diff --git a/CHANGELOG.md b/CHANGELOG.md

index 542e20192a..7f58575ae1 100644

--- a/CHANGELOG.md

+++ b/CHANGELOG.md

@@ -2,7 +2,26 @@

## New Features:

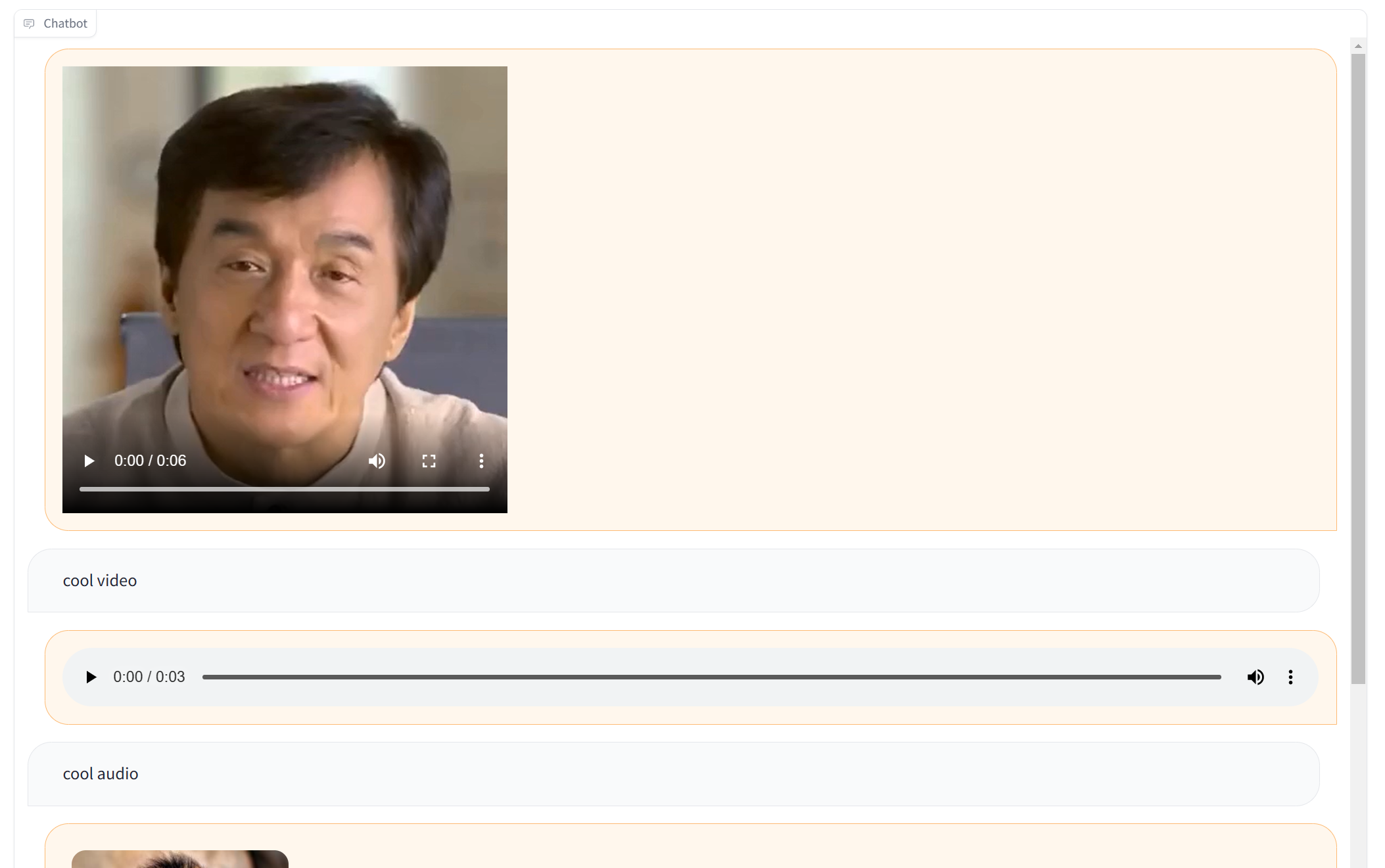

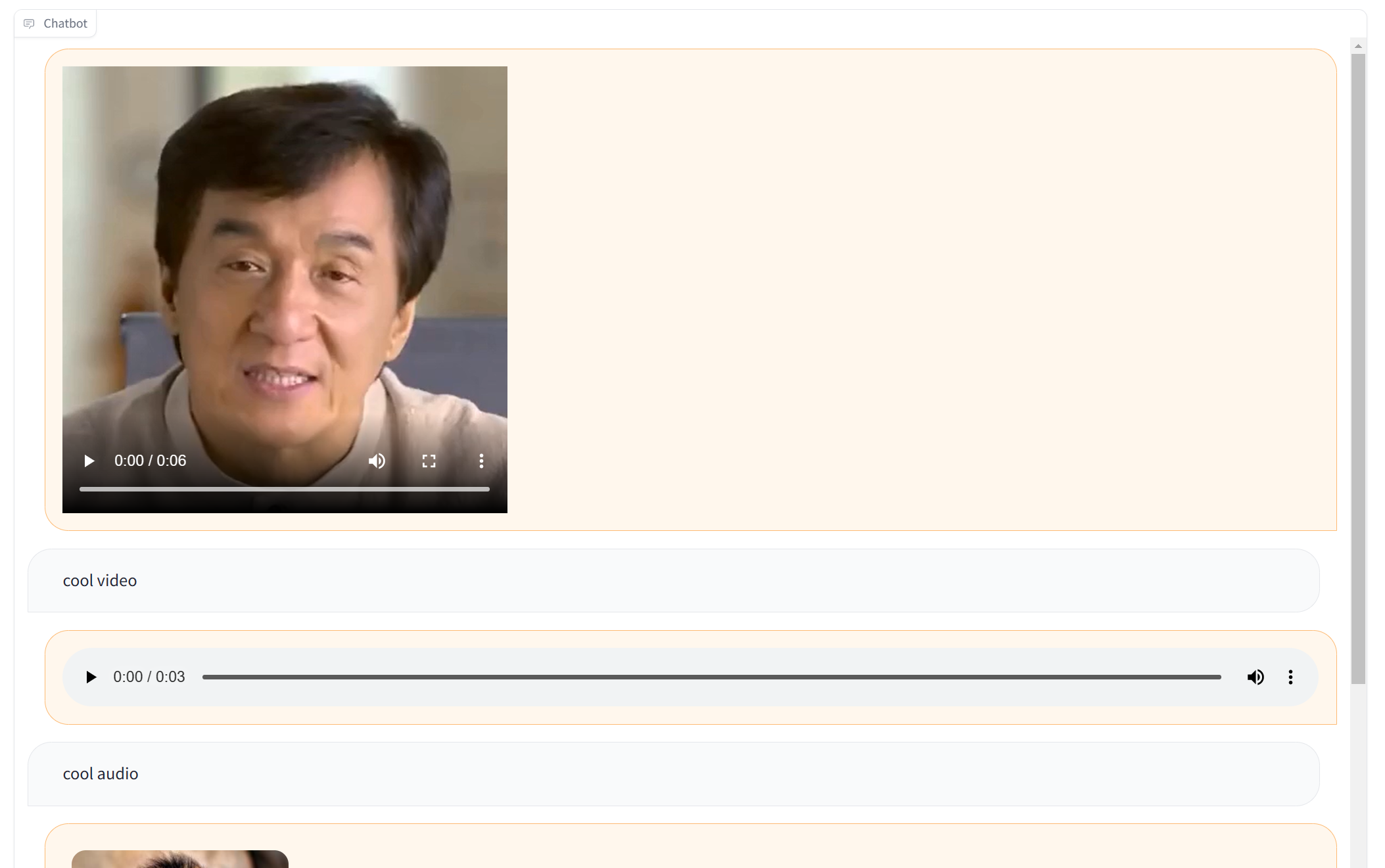

+### The `Chatbot` component now supports audio, video, and images

+

+The `Chatbot` component now supports audio, video, and images with a simple syntax: simply

+pass in a tuple with the URL or filepath (the second optional element of the tuple is alt text), and the image/audio/video will be displayed:

+

+```python

+gr.Chatbot([

+ (("driving.mp4",), "cool video"),

+ (("cantina.wav",), "cool audio"),

+ (("lion.jpg", "A lion"), "cool pic"),

+]).style(height=800)

+```

+

+ +

+

+Note: images were previously supported via Markdown syntax and that is still supported for backwards compatibility. By [@dawoodkhan82](https://github.com/dawoodkhan82) in [PR 3413](https://github.com/gradio-app/gradio/pull/3413)

+

- Allow consecutive function triggers with `.then` and `.success` by [@aliabid94](https://github.com/aliabid94) in [PR 3430](https://github.com/gradio-app/gradio/pull/3430)

+

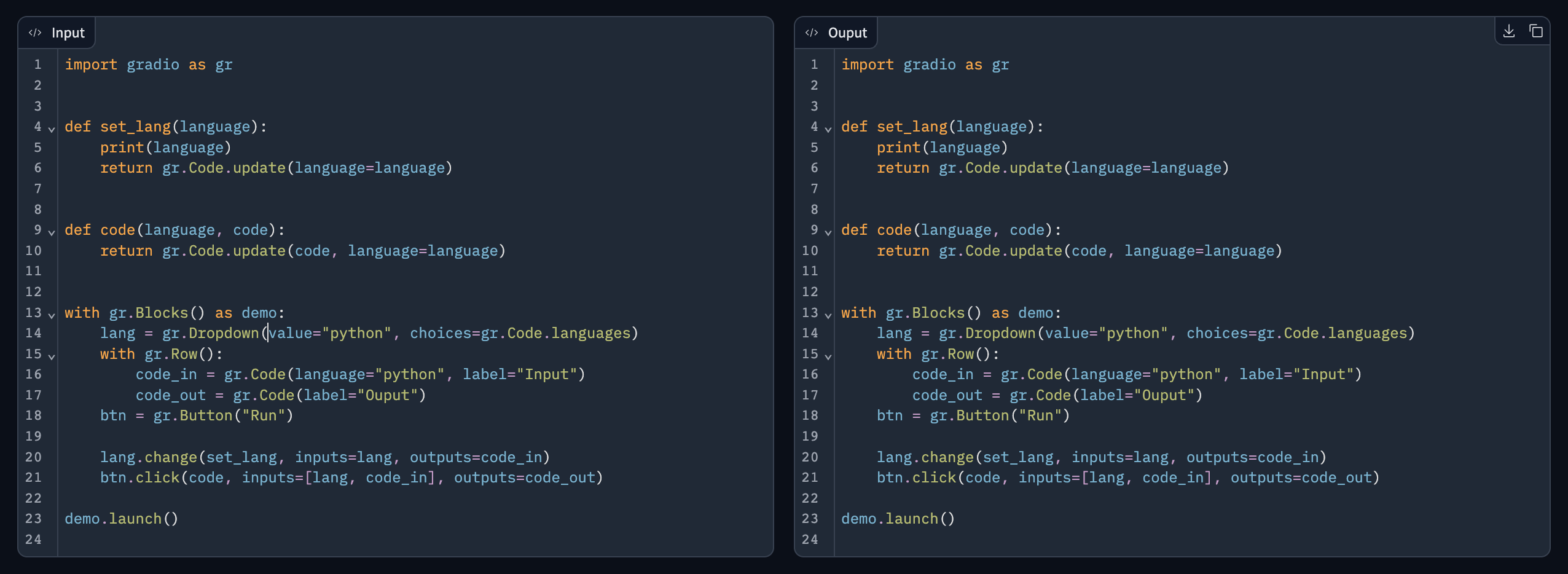

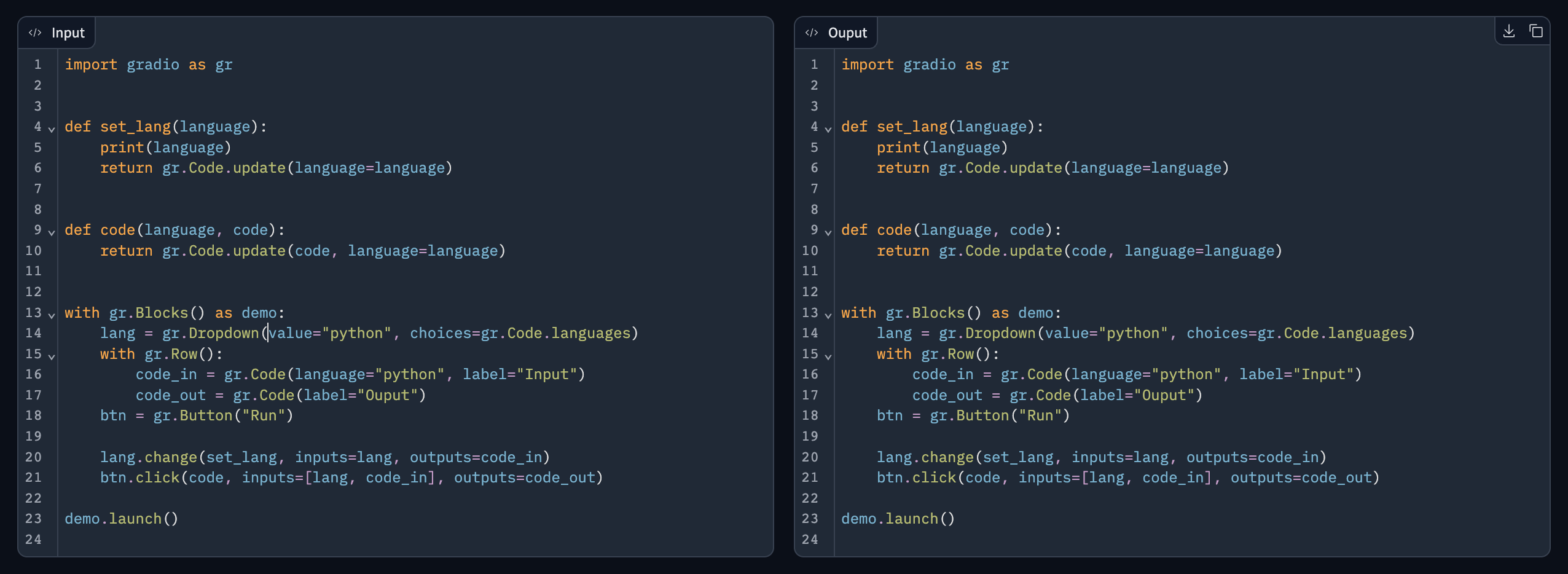

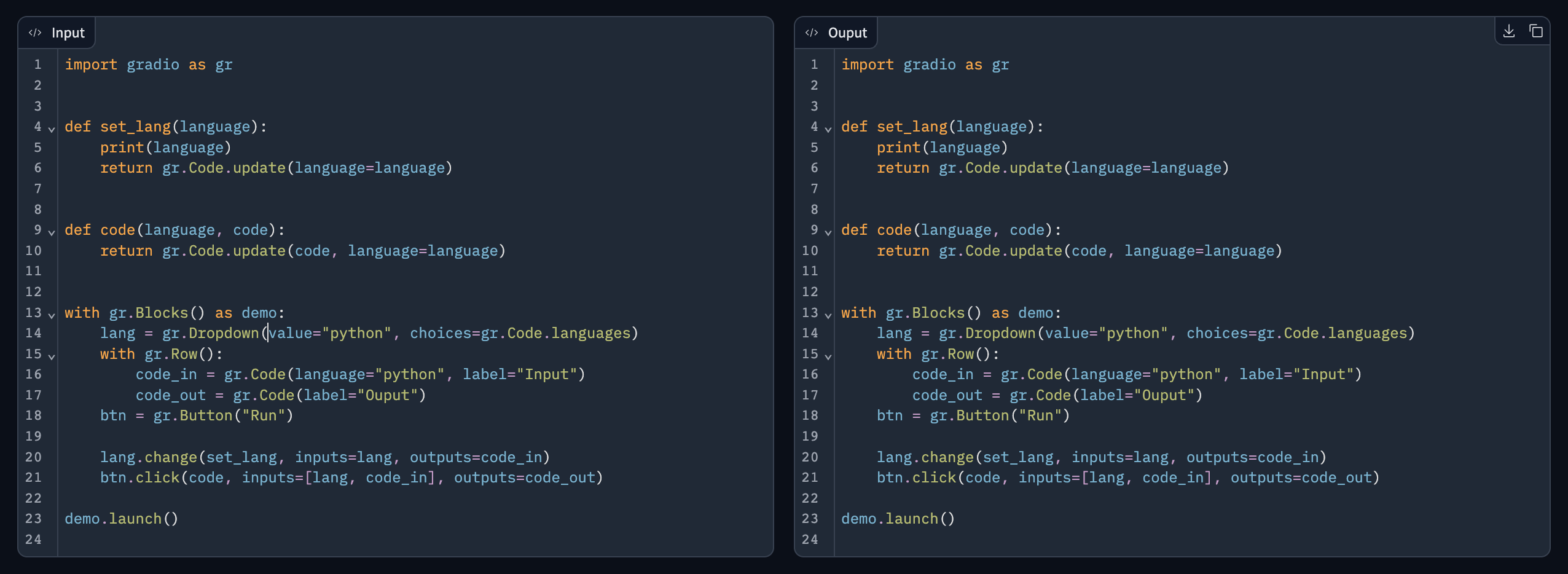

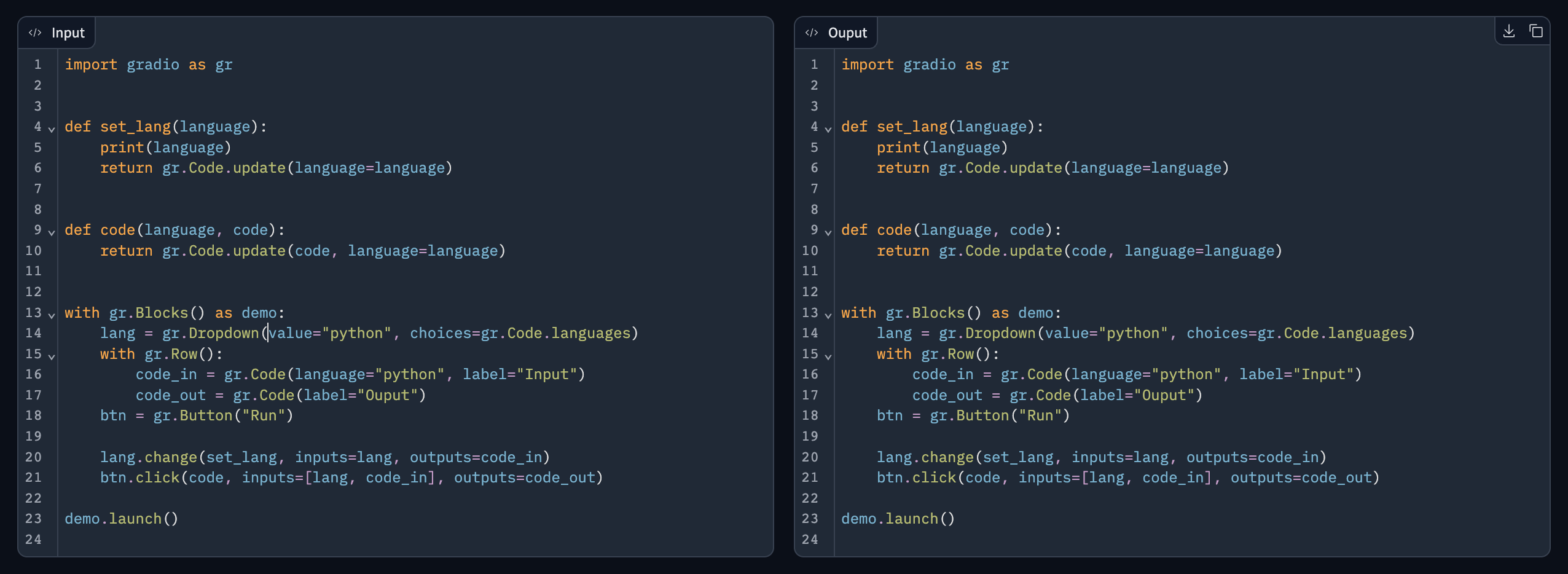

- New code component allows you to enter, edit and display code with full syntax highlighting by [@pngwn](https://github.com/pngwn) in [PR 3421](https://github.com/gradio-app/gradio/pull/3421)

diff --git a/demo/chatbot_demo/run.ipynb b/demo/chatbot_demo/run.ipynb

deleted file mode 100644

index 7c618e1d67..0000000000

--- a/demo/chatbot_demo/run.ipynb

+++ /dev/null

@@ -1 +0,0 @@

-{"cells": [{"cell_type": "markdown", "id": 302934307671667531413257853548643485645, "metadata": {}, "source": ["# Gradio Demo: chatbot_demo"]}, {"cell_type": "code", "execution_count": null, "id": 272996653310673477252411125948039410165, "metadata": {}, "outputs": [], "source": ["!pip install -q gradio "]}, {"cell_type": "code", "execution_count": null, "id": 288918539441861185822528903084949547379, "metadata": {}, "outputs": [], "source": ["import gradio as gr\n", "from transformers import AutoModelForCausalLM, AutoTokenizer\n", "import torch\n", "\n", "tokenizer = AutoTokenizer.from_pretrained(\"microsoft/DialoGPT-medium\")\n", "model = AutoModelForCausalLM.from_pretrained(\"microsoft/DialoGPT-medium\")\n", "\n", "def predict(input, history=[]):\n", " # tokenize the new input sentence\n", " new_user_input_ids = tokenizer.encode(input + tokenizer.eos_token, return_tensors='pt')\n", "\n", " # append the new user input tokens to the chat history\n", " bot_input_ids = torch.cat([torch.LongTensor(history), new_user_input_ids], dim=-1)\n", "\n", " # generate a response \n", " history = model.generate(bot_input_ids, max_length=1000, pad_token_id=tokenizer.eos_token_id).tolist()\n", "\n", " # convert the tokens to text, and then split the responses into lines\n", " response = tokenizer.decode(history[0]).split(\"<|endoftext|>\")\n", " response = [(response[i], response[i+1]) for i in range(0, len(response)-1, 2)] # convert to tuples of list\n", " return response, history\n", "\n", "with gr.Blocks() as demo:\n", " chatbot = gr.Chatbot()\n", " state = gr.State([])\n", "\n", " with gr.Row():\n", " txt = gr.Textbox(show_label=False, placeholder=\"Enter text and press enter\").style(container=False)\n", "\n", " txt.submit(predict, [txt, state], [chatbot, state])\n", " \n", "if __name__ == \"__main__\":\n", " demo.launch()\n"]}], "metadata": {}, "nbformat": 4, "nbformat_minor": 5}

\ No newline at end of file

diff --git a/demo/chatbot_dialogpt/run.ipynb b/demo/chatbot_dialogpt/run.ipynb

new file mode 100644

index 0000000000..58b5d3bf58

--- /dev/null

+++ b/demo/chatbot_dialogpt/run.ipynb

@@ -0,0 +1 @@

+{"cells": [{"cell_type": "markdown", "id": 302934307671667531413257853548643485645, "metadata": {}, "source": ["# Gradio Demo: chatbot_dialogpt"]}, {"cell_type": "code", "execution_count": null, "id": 272996653310673477252411125948039410165, "metadata": {}, "outputs": [], "source": ["!pip install -q gradio "]}, {"cell_type": "code", "execution_count": null, "id": 288918539441861185822528903084949547379, "metadata": {}, "outputs": [], "source": ["import gradio as gr\n", "from transformers import AutoModelForCausalLM, AutoTokenizer\n", "import torch\n", "\n", "tokenizer = AutoTokenizer.from_pretrained(\"microsoft/DialoGPT-medium\")\n", "model = AutoModelForCausalLM.from_pretrained(\"microsoft/DialoGPT-medium\")\n", "\n", "def user(message, history):\n", " return \"\", history + [[message, None]]\n", "\n", "\n", "# bot_message = random.choice([\"Yes\", \"No\"])\n", "# history[-1][1] = bot_message\n", "# time.sleep(1)\n", "# return history\n", "\n", "# def predict(input, history=[]):\n", "# # tokenize the new input sentence\n", "\n", "def bot(history):\n", " user_message = history[-1][0]\n", " new_user_input_ids = tokenizer.encode(user_message + tokenizer.eos_token, return_tensors='pt')\n", "\n", " # append the new user input tokens to the chat history\n", " bot_input_ids = torch.cat([torch.LongTensor(history), new_user_input_ids], dim=-1)\n", "\n", " # generate a response \n", " history = model.generate(bot_input_ids, max_length=1000, pad_token_id=tokenizer.eos_token_id).tolist()\n", "\n", " # convert the tokens to text, and then split the responses into lines\n", " response = tokenizer.decode(history[0]).split(\"<|endoftext|>\")\n", " response = [(response[i], response[i+1]) for i in range(0, len(response)-1, 2)] # convert to tuples of list\n", " return history\n", "\n", "with gr.Blocks() as demo:\n", " chatbot = gr.Chatbot()\n", " msg = gr.Textbox()\n", " clear = gr.Button(\"Clear\")\n", "\n", " msg.submit(user, [msg, chatbot], [msg, chatbot], queue=False).then(\n", " bot, chatbot, chatbot\n", " )\n", " clear.click(lambda: None, None, chatbot, queue=False)\n", "\n", "if __name__ == \"__main__\":\n", " demo.launch()\n"]}], "metadata": {}, "nbformat": 4, "nbformat_minor": 5}

\ No newline at end of file

diff --git a/demo/chatbot_demo/run.py b/demo/chatbot_dialogpt/run.py

similarity index 56%

rename from demo/chatbot_demo/run.py

rename to demo/chatbot_dialogpt/run.py

index 937d901a67..18bbfdf190 100644

--- a/demo/chatbot_demo/run.py

+++ b/demo/chatbot_dialogpt/run.py

@@ -5,9 +5,21 @@ import torch

tokenizer = AutoTokenizer.from_pretrained("microsoft/DialoGPT-medium")

model = AutoModelForCausalLM.from_pretrained("microsoft/DialoGPT-medium")

-def predict(input, history=[]):

- # tokenize the new input sentence

- new_user_input_ids = tokenizer.encode(input + tokenizer.eos_token, return_tensors='pt')

+def user(message, history):

+ return "", history + [[message, None]]

+

+

+# bot_message = random.choice(["Yes", "No"])

+# history[-1][1] = bot_message

+# time.sleep(1)

+# return history

+

+# def predict(input, history=[]):

+# # tokenize the new input sentence

+

+def bot(history):

+ user_message = history[-1][0]

+ new_user_input_ids = tokenizer.encode(user_message + tokenizer.eos_token, return_tensors='pt')

# append the new user input tokens to the chat history

bot_input_ids = torch.cat([torch.LongTensor(history), new_user_input_ids], dim=-1)

@@ -18,16 +30,17 @@ def predict(input, history=[]):

# convert the tokens to text, and then split the responses into lines

response = tokenizer.decode(history[0]).split("<|endoftext|>")

response = [(response[i], response[i+1]) for i in range(0, len(response)-1, 2)] # convert to tuples of list

- return response, history

+ return history

with gr.Blocks() as demo:

chatbot = gr.Chatbot()

- state = gr.State([])

+ msg = gr.Textbox()

+ clear = gr.Button("Clear")

- with gr.Row():

- txt = gr.Textbox(show_label=False, placeholder="Enter text and press enter").style(container=False)

+ msg.submit(user, [msg, chatbot], [msg, chatbot], queue=False).then(

+ bot, chatbot, chatbot

+ )

+ clear.click(lambda: None, None, chatbot, queue=False)

- txt.submit(predict, [txt, state], [chatbot, state])

-

if __name__ == "__main__":

demo.launch()

diff --git a/demo/chatbot_demo/screenshot.gif b/demo/chatbot_dialogpt/screenshot.gif

similarity index 100%

rename from demo/chatbot_demo/screenshot.gif

rename to demo/chatbot_dialogpt/screenshot.gif

diff --git a/demo/chatbot_demo/screenshot.png b/demo/chatbot_dialogpt/screenshot.png

similarity index 100%

rename from demo/chatbot_demo/screenshot.png

rename to demo/chatbot_dialogpt/screenshot.png

diff --git a/demo/chatbot_multimodal/run.ipynb b/demo/chatbot_multimodal/run.ipynb

index 77b8201e19..450ac4c23a 100644

--- a/demo/chatbot_multimodal/run.ipynb

+++ b/demo/chatbot_multimodal/run.ipynb

@@ -1 +1 @@

-{"cells": [{"cell_type": "markdown", "id": 302934307671667531413257853548643485645, "metadata": {}, "source": ["# Gradio Demo: chatbot_multimodal"]}, {"cell_type": "code", "execution_count": null, "id": 272996653310673477252411125948039410165, "metadata": {}, "outputs": [], "source": ["!pip install -q gradio "]}, {"cell_type": "code", "execution_count": null, "id": 288918539441861185822528903084949547379, "metadata": {}, "outputs": [], "source": ["import gradio as gr\n", "from urllib.parse import quote\n", "\n", "with gr.Blocks() as demo:\n", " chatbot = gr.Chatbot(elem_id=\"chatbot\").style(height=500)\n", "\n", " with gr.Row():\n", " with gr.Column(scale=0.85):\n", " txt = gr.Textbox(\n", " show_label=False,\n", " placeholder=\"Enter text and press enter, or upload an image\",\n", " ).style(container=False)\n", " with gr.Column(scale=0.15, min_width=0):\n", " btn = gr.UploadButton(\"\ud83d\uddbc\ufe0f\", file_types=[\"image\"])\n", "\n", " def add_text(history, text):\n", " history = history + [(text, None)]\n", " return history, \"\"\n", "\n", " def add_image(history, image):\n", " history = history + [(f\"})\", None)]\n", " return history\n", "\n", " def bot_response(history):\n", " response = \"Cool!\"\n", " history[-1][1] = response\n", " return history\n", "\n", " txt.submit(add_text, [chatbot, txt], [chatbot, txt]).then(\n", " bot_response, chatbot, chatbot\n", " )\n", " btn.upload(add_image, [chatbot, btn], [chatbot]).then(\n", " bot_response, chatbot, chatbot\n", " )\n", "\n", "if __name__ == \"__main__\":\n", " demo.launch()\n"]}], "metadata": {}, "nbformat": 4, "nbformat_minor": 5}

\ No newline at end of file

+{"cells": [{"cell_type": "markdown", "id": 302934307671667531413257853548643485645, "metadata": {}, "source": ["# Gradio Demo: chatbot_multimodal"]}, {"cell_type": "code", "execution_count": null, "id": 272996653310673477252411125948039410165, "metadata": {}, "outputs": [], "source": ["!pip install -q gradio "]}, {"cell_type": "code", "execution_count": null, "id": 288918539441861185822528903084949547379, "metadata": {}, "outputs": [], "source": ["import gradio as gr\n", "\n", "def add_text(history, text):\n", " history = history + [(text, None)]\n", " return history, \"\"\n", "\n", "def add_file(history, file):\n", " history = history + [((file.name,), None)]\n", " return history\n", "\n", "def bot(history):\n", " response = \"**That's cool!**\"\n", " history[-1][1] = response\n", " return history\n", "\n", "with gr.Blocks() as demo:\n", " chatbot = gr.Chatbot([], elem_id=\"chatbot\").style(height=750)\n", " \n", " with gr.Row():\n", " with gr.Column(scale=0.85):\n", " txt = gr.Textbox(\n", " show_label=False,\n", " placeholder=\"Enter text and press enter, or upload an image\",\n", " ).style(container=False)\n", " with gr.Column(scale=0.15, min_width=0):\n", " btn = gr.UploadButton(\"\ud83d\udcc1\", file_types=[\"image\", \"video\", \"audio\"])\n", " \n", " txt.submit(add_text, [chatbot, txt], [chatbot, txt]).then(\n", " bot, chatbot, chatbot\n", " )\n", " btn.upload(add_file, [chatbot, btn], [chatbot]).then(\n", " bot, chatbot, chatbot\n", " )\n", "\n", "if __name__ == \"__main__\":\n", " demo.launch()\n"]}], "metadata": {}, "nbformat": 4, "nbformat_minor": 5}

\ No newline at end of file

diff --git a/demo/chatbot_multimodal/run.py b/demo/chatbot_multimodal/run.py

index 6a5344db45..1bc15a67fb 100644

--- a/demo/chatbot_multimodal/run.py

+++ b/demo/chatbot_multimodal/run.py

@@ -1,9 +1,21 @@

import gradio as gr

-from urllib.parse import quote

+

+def add_text(history, text):

+ history = history + [(text, None)]

+ return history, ""

+

+def add_file(history, file):

+ history = history + [((file.name,), None)]

+ return history

+

+def bot(history):

+ response = "**That's cool!**"

+ history[-1][1] = response

+ return history

with gr.Blocks() as demo:

- chatbot = gr.Chatbot(elem_id="chatbot").style(height=500)

-

+ chatbot = gr.Chatbot([], elem_id="chatbot").style(height=750)

+

with gr.Row():

with gr.Column(scale=0.85):

txt = gr.Textbox(

@@ -11,26 +23,13 @@ with gr.Blocks() as demo:

placeholder="Enter text and press enter, or upload an image",

).style(container=False)

with gr.Column(scale=0.15, min_width=0):

- btn = gr.UploadButton("🖼️", file_types=["image"])

-

- def add_text(history, text):

- history = history + [(text, None)]

- return history, ""

-

- def add_image(history, image):

- history = history + [(f"})", None)]

- return history

-

- def bot_response(history):

- response = "Cool!"

- history[-1][1] = response

- return history

-

+ btn = gr.UploadButton("📁", file_types=["image", "video", "audio"])

+

txt.submit(add_text, [chatbot, txt], [chatbot, txt]).then(

- bot_response, chatbot, chatbot

+ bot, chatbot, chatbot

)

- btn.upload(add_image, [chatbot, btn], [chatbot]).then(

- bot_response, chatbot, chatbot

+ btn.upload(add_file, [chatbot, btn], [chatbot]).then(

+ bot, chatbot, chatbot

)

if __name__ == "__main__":

diff --git a/demo/chatbot_simple/run.ipynb b/demo/chatbot_simple/run.ipynb

new file mode 100644

index 0000000000..78ec25d29a

--- /dev/null

+++ b/demo/chatbot_simple/run.ipynb

@@ -0,0 +1 @@

+{"cells": [{"cell_type": "markdown", "id": 302934307671667531413257853548643485645, "metadata": {}, "source": ["# Gradio Demo: chatbot_simple"]}, {"cell_type": "code", "execution_count": null, "id": 272996653310673477252411125948039410165, "metadata": {}, "outputs": [], "source": ["!pip install -q gradio "]}, {"cell_type": "code", "execution_count": null, "id": 288918539441861185822528903084949547379, "metadata": {}, "outputs": [], "source": ["import gradio as gr\n", "import random\n", "import time\n", "\n", "with gr.Blocks() as demo:\n", " chatbot = gr.Chatbot()\n", " msg = gr.Textbox()\n", " clear = gr.Button(\"Clear\")\n", "\n", " def user(user_message, history):\n", " return \"\", history + [[user_message, None]]\n", "\n", " def bot(history):\n", " bot_message = random.choice([\"Yes\", \"No\"])\n", " history[-1][1] = bot_message\n", " time.sleep(1)\n", " return history\n", "\n", " msg.submit(user, [msg, chatbot], [msg, chatbot], queue=False).then(\n", " bot, chatbot, chatbot\n", " )\n", " clear.click(lambda: None, None, chatbot, queue=False)\n", "\n", "if __name__ == \"__main__\":\n", " demo.launch()\n"]}], "metadata": {}, "nbformat": 4, "nbformat_minor": 5}

\ No newline at end of file

diff --git a/demo/chatbot_simple/run.py b/demo/chatbot_simple/run.py

new file mode 100644

index 0000000000..2057b311e0

--- /dev/null

+++ b/demo/chatbot_simple/run.py

@@ -0,0 +1,25 @@

+import gradio as gr

+import random

+import time

+

+with gr.Blocks() as demo:

+ chatbot = gr.Chatbot()

+ msg = gr.Textbox()

+ clear = gr.Button("Clear")

+

+ def user(user_message, history):

+ return "", history + [[user_message, None]]

+

+ def bot(history):

+ bot_message = random.choice(["Yes", "No"])

+ history[-1][1] = bot_message

+ time.sleep(1)

+ return history

+

+ msg.submit(user, [msg, chatbot], [msg, chatbot], queue=False).then(

+ bot, chatbot, chatbot

+ )

+ clear.click(lambda: None, None, chatbot, queue=False)

+

+if __name__ == "__main__":

+ demo.launch()

diff --git a/demo/chatbot_simple_demo/run.ipynb b/demo/chatbot_simple_demo/run.ipynb

deleted file mode 100644

index 55365909ed..0000000000

--- a/demo/chatbot_simple_demo/run.ipynb

+++ /dev/null

@@ -1 +0,0 @@

-{"cells": [{"cell_type": "markdown", "id": 302934307671667531413257853548643485645, "metadata": {}, "source": ["# Gradio Demo: chatbot_simple_demo"]}, {"cell_type": "code", "execution_count": null, "id": 272996653310673477252411125948039410165, "metadata": {}, "outputs": [], "source": ["!pip install -q gradio "]}, {"cell_type": "code", "execution_count": null, "id": 288918539441861185822528903084949547379, "metadata": {}, "outputs": [], "source": ["import gradio as gr\n", "import random\n", "import time\n", "\n", "with gr.Blocks() as demo:\n", " chatbot = gr.Chatbot()\n", " msg = gr.Textbox()\n", " clear = gr.Button(\"Clear\")\n", "\n", " def user_message(message, history):\n", " return \"\", history + [[message, None]]\n", "\n", " def bot_message(history):\n", " response = random.choice([\"Yes\", \"No\"])\n", " history[-1][1] = response\n", " time.sleep(1)\n", " return history\n", "\n", " msg.submit(user_message, [msg, chatbot], [msg, chatbot], queue=False).then(\n", " bot_message, chatbot, chatbot\n", " )\n", " clear.click(lambda: None, None, chatbot, queue=False)\n", "\n", "if __name__ == \"__main__\":\n", " demo.launch()\n"]}], "metadata": {}, "nbformat": 4, "nbformat_minor": 5}

\ No newline at end of file

diff --git a/demo/chatbot_simple_demo/run.py b/demo/chatbot_simple_demo/run.py

deleted file mode 100644

index c01cfa03ea..0000000000

--- a/demo/chatbot_simple_demo/run.py

+++ /dev/null

@@ -1,25 +0,0 @@

-import gradio as gr

-import random

-import time

-

-with gr.Blocks() as demo:

- chatbot = gr.Chatbot()

- msg = gr.Textbox()

- clear = gr.Button("Clear")

-

- def user_message(message, history):

- return "", history + [[message, None]]

-

- def bot_message(history):

- response = random.choice(["Yes", "No"])

- history[-1][1] = response

- time.sleep(1)

- return history

-

- msg.submit(user_message, [msg, chatbot], [msg, chatbot], queue=False).then(

- bot_message, chatbot, chatbot

- )

- clear.click(lambda: None, None, chatbot, queue=False)

-

-if __name__ == "__main__":

- demo.launch()

diff --git a/gradio/components.py b/gradio/components.py

index 92532ce390..3860c65d5e 100644

--- a/gradio/components.py

+++ b/gradio/components.py

@@ -2901,7 +2901,7 @@ class State(IOComponent, SimpleSerializable):

Preprocessing: No preprocessing is performed

Postprocessing: No postprocessing is performed

- Demos: chatbot_demo, blocks_simple_squares

+ Demos: blocks_simple_squares

Guides: creating_a_chatbot, real_time_speech_recognition

"""

@@ -3928,9 +3928,9 @@ class Chatbot(Changeable, IOComponent, JSONSerializable):

"""

Displays a chatbot output showing both user submitted messages and responses. Supports a subset of Markdown including bold, italics, code, and images.

Preprocessing: this component does *not* accept input.

- Postprocessing: expects function to return a {List[Tuple[str | None, str | None]]}, a list of tuples with user inputs and responses as strings of HTML or Nones. Messages that are `None` are not displayed.

+ Postprocessing: expects function to return a {List[Tuple[str | None | Tuple, str | None | Tuple]]}, a list of tuples with user message and response messages. Messages should be strings, tuples, or Nones. If the message is a string, it can include Markdown. If it is a tuple, it should consist of (string filepath to image/video/audio, [optional string alt text]). Messages that are `None` are not displayed.

- Demos: chatbot_demo, chatbot_multimodal

+ Demos: chatbot_simple, chatbot_multimodal

"""

def __init__(

@@ -3956,11 +3956,9 @@ class Chatbot(Changeable, IOComponent, JSONSerializable):

"""

if color_map is not None:

warnings.warn(

- "The 'color_map' parameter has been moved from the constructor to `Chatbot.style()` ",

+ "The 'color_map' parameter has been deprecated.",

)

- self.color_map = color_map

self.md = utils.get_markdown_parser()

-

IOComponent.__init__(

self,

label=label,

@@ -3975,20 +3973,17 @@ class Chatbot(Changeable, IOComponent, JSONSerializable):

def get_config(self):

return {

"value": self.value,

- "color_map": self.color_map,

**IOComponent.get_config(self),

}

@staticmethod

def update(

value: Any | Literal[_Keywords.NO_VALUE] | None = _Keywords.NO_VALUE,

- color_map: Tuple[str, str] | None = None,

label: str | None = None,

show_label: bool | None = None,

visible: bool | None = None,

):

updated_config = {

- "color_map": color_map,

"label": label,

"show_label": show_label,

"visible": visible,

@@ -3997,23 +3992,58 @@ class Chatbot(Changeable, IOComponent, JSONSerializable):

}

return updated_config

+ def _process_chat_messages(

+ self, chat_message: str | Tuple | List | Dict | None

+ ) -> str | Dict | None:

+ if chat_message is None:

+ return None

+ elif isinstance(chat_message, (tuple, list)):

+ mime_type = processing_utils.get_mimetype(chat_message[0])

+ return {

+ "name": chat_message[0],

+ "mime_type": mime_type,

+ "alt_text": chat_message[1] if len(chat_message) > 1 else None,

+ "data": None, # These last two fields are filled in by the frontend

+ "is_file": True,

+ }

+ elif isinstance(

+ chat_message, dict

+ ): # This happens for previously processed messages

+ return chat_message

+ elif isinstance(chat_message, str):

+ return self.md.renderInline(chat_message)

+ else:

+ raise ValueError(f"Invalid message for Chatbot component: {chat_message}")

+

def postprocess(

- self, y: List[Tuple[str | None, str | None]]

- ) -> List[Tuple[str | None, str | None]]:

+ self,

+ y: List[

+ Tuple[str | Tuple | List | Dict | None, str | Tuple | List | Dict | None]

+ ],

+ ) -> List[Tuple[str | Dict | None, str | Dict | None]]:

"""

Parameters:

- y: List of tuples representing the message and response pairs. Each message and response should be a string, which may be in Markdown format.

+ y: List of tuples representing the message and response pairs. Each message and response should be a string, which may be in Markdown format. It can also be a tuple whose first element is a string filepath or URL to an image/video/audio, and second (optional) element is the alt text, in which case the media file is displayed. It can also be None, in which case that message is not displayed.

Returns:

- List of tuples representing the message and response. Each message and response will be a string of HTML.

+ List of tuples representing the message and response. Each message and response will be a string of HTML, or a dictionary with media information.

"""

if y is None:

return []

- for i, (message, response) in enumerate(y):

- y[i] = (

- None if message is None else self.md.renderInline(message),

- None if response is None else self.md.renderInline(response),

+ processed_messages = []

+ for message_pair in y:

+ assert isinstance(

+ message_pair, (tuple, list)

+ ), f"Expected a list of lists or list of tuples. Received: {message_pair}"

+ assert (

+ len(message_pair) == 2

+ ), f"Expected a list of lists of length 2 or list of tuples of length 2. Received: {message_pair}"

+ processed_messages.append(

+ (

+ self._process_chat_messages(message_pair[0]),

+ self._process_chat_messages(message_pair[1]),

+ )

)

- return y

+ return processed_messages

def style(self, height: int | None = None, **kwargs):

"""

diff --git a/guides/02_building-interfaces/01_interface-state.md b/guides/02_building-interfaces/01_interface-state.md

index 64fced4e5a..9f3c44df51 100644

--- a/guides/02_building-interfaces/01_interface-state.md

+++ b/guides/02_building-interfaces/01_interface-state.md

@@ -20,8 +20,8 @@ Another type of data persistence Gradio supports is session **state**, where dat

A chatbot is an example where you would need session state - you want access to a users previous submissions, but you cannot store chat history in a global variable, because then chat history would get jumbled between different users.

-$code_chatbot_demo

-$demo_chatbot_demo

+$code_chatbot_dialogpt

+$demo_chatbot_dialogpt

Notice how the state persists across submits within each page, but if you load this demo in another tab (or refresh the page), the demos will not share chat history.

diff --git a/guides/03_building-with-blocks/01_blocks-and-event-listeners.md b/guides/03_building-with-blocks/01_blocks-and-event-listeners.md

index fe9cb8c705..b3cd43bed9 100644

--- a/guides/03_building-with-blocks/01_blocks-and-event-listeners.md

+++ b/guides/03_building-with-blocks/01_blocks-and-event-listeners.md

@@ -129,8 +129,8 @@ You can also run events consecutively by using the `then` method of an event lis

For example, in the chatbot example below, we first update the chatbot with the user message immediately, and then update the chatbot with the computer response after a simulated delay.

-$code_chatbot_simple_demo

-$demo_chatbot_simple_demo

+$code_chatbot_simple

+$demo_chatbot_simple

The `.then()` method of an event listener executes the subsequent event regardless of whether the previous event raised any errors. If you'd like to only run subsequent events if the previous event executed successfully, use the `.success()` method, which takes the same arguments as `.then()`.

diff --git a/guides/06_other-tutorials/creating-a-chatbot.md b/guides/06_other-tutorials/creating-a-chatbot.md

index d06d6770f1..b93e110310 100644

--- a/guides/06_other-tutorials/creating-a-chatbot.md

+++ b/guides/06_other-tutorials/creating-a-chatbot.md

@@ -1,177 +1,67 @@

# How to Create a Chatbot

-Related spaces: https://huggingface.co/spaces/dawood/chatbot-guide, https://huggingface.co/spaces/dawood/chatbot-guide-multimodal, https://huggingface.co/spaces/ThomasSimonini/Chat-with-Gandalf-GPT-J6B, https://huggingface.co/spaces/gorkemgoknar/moviechatbot, https://huggingface.co/spaces/Kirili4ik/chat-with-Kirill

-Tags: NLP, TEXT, HTML

+Tags: NLP, TEXT, CHAT

## Introduction

-Chatbots are widely studied in natural language processing (NLP) research and are a common use case of NLP in industry. Because chatbots are designed to be used directly by customers and end users, it is important to validate that chatbots are behaving as expected when confronted with a wide variety of input prompts.

+Chatbots are widely used in natural language processing (NLP) research and industry. Because chatbots are designed to be used directly by customers and end users, it is important to validate that chatbots are behaving as expected when confronted with a wide variety of input prompts.

Using `gradio`, you can easily build a demo of your chatbot model and share that with a testing team, or test it yourself using an intuitive chatbot GUI.

-This tutorial will show how to take a pretrained chatbot model and deploy it with a Gradio interface in 4 steps. The live chatbot interface that we create will look something like this (try it!):

+This tutorial will show how to make two kinds of chatbot UIs with Gradio: a simple one to display text and a more sophisticated one that can handle media files as well. The simple chatbot interface that we create will look something like this:

-

+$demo_chatbot_simple

+

+**Prerequisite**: We'll be using the `gradio.Blocks` class to build our Chatbot demo.

+You can [read the Guide to Blocks first](https://gradio.app/quickstart/#blocks-more-flexibility-and-control) if you are not already familiar with it.

## A Simple Chatbot Demo

-Let's start with a simple demo, with no actual model. Our bot will randomly respond "yes" or "no" to any input.

+Let's start with recreating the simple demo above. As you may have noticed, our bot simply randomly responds "yes" or "no" to any input. Here's the code to create this with Gradio:

-$code_chatbot_simple_demo

-$demo_chatbot_simple_demo

+$code_chatbot_simple

-The chatbot value stores the entire history of the conversation, as a list of response pairs between the user and bot. Note that we chain two event event listeners with `.then` after a user triggers a submit:

+There are three Gradio components here:

+* A `Chatbot`, whose value stores the entire history of the conversation, as a list of response pairs between the user and bot.

+* A `Textbox` where the user can type their message, and then hit enter/submit to trigger the chatbot response

+* A `Clear` button to clear the entire Chatbot history

-1. The first method updates the chatbot with the user message and clears the input field. Because we want this to happen instantly, we set `queue=False`, which would skip any queue if it had been enabled.

-2. The second method waits for the bot to respond, and then updates the chatbot with the bot response.

+Note that when a user submits their message, we chain two event events with `.then`:

-The reason we split these events is so that the user can see their message appear in the chatbot before the bot responds, which can take time to process.

+1. The first method `user()` updates the chatbot with the user message and clears the input field. Because we want this to happen instantly, we set `queue=False`, which would skip any queue if it had been enabled. The chatbot's history is appended with `(user_message, None)`, the `None` signifying that the bot has not responded.

+

+2. The second method, `bot()` waits for the bot to respond, and then updates the chatbot with the bot's response. Instead of creating a new message, we just replace the previous `None` message with the bot's response. We add a `time.sleep` to simulate the bot's processing time.

+

+The reason we split these events is so that the user can see their message appear in the chatbot immediately before the bot responds, which can take some time to process.

Note we pass the entire history of the chatbot to these functions and back to the component. To clear the chatbot, we pass it `None`.

-### Using a Model

+Of course, in practice, you would replace `bot()` with your own more complex function, which might call a pretrained model or an API, to generate a response.

-Chatbots are *stateful*, meaning we need to track how the user has previously interacted with the model. So, in this tutorial, we will also cover how to use **state** with Gradio demos.

-### Prerequisites

+## Adding Markdown, Images, Audio, or Videos

-Make sure you have the `gradio` Python package already [installed](/quickstart). To use a pretrained chatbot model, also install `transformers` and `torch`.

+The `gradio.Chatbot` component supports a subset of markdown including bold, italics, and code. For example, we could write a function that responds to a user's message, with a bold **That's cool!**, like this:

-Let's get started! Here's how to build your own chatbot:

-

- [1. Set up the Chatbot Model](#1-set-up-the-chatbot-model)

- [2. Define a `predict` function](#2-define-a-predict-function)

- [3. Create a Gradio Demo using Blocks](#3-create-a-gradio-demo-using-blocks)

- [4. Chatbot Markdown Support](#4-chatbot-markdown-support)

-

-## 1. Set up the Chatbot Model

-

-First, you will need to have a chatbot model that you have either trained yourself or you will need to download a pretrained model. In this tutorial, we will use a pretrained chatbot model, `DialoGPT`, and its tokenizer from the [Hugging Face Hub](https://huggingface.co/microsoft/DialoGPT-medium), but you can replace this with your own model.

-

-Here is the code to load `DialoGPT` from Hugging Face `transformers`.

-

-```python

-from transformers import AutoModelForCausalLM, AutoTokenizer

-import torch

-

-tokenizer = AutoTokenizer.from_pretrained("microsoft/DialoGPT-medium")

-model = AutoModelForCausalLM.from_pretrained("microsoft/DialoGPT-medium")

+```py

+def bot(history):

+ response = "**That's cool!**"

+ history[-1][1] = response

+ return history

```

-## 2. Define a `predict` function

-

-Next, you will need to define a function that takes in the *user input* as well as the previous *chat history* to generate a response.

-

-In the case of our pretrained model, it will look like this:

+In addition, it can handle media files, such as images, audio, and video. To pass in a media file, we must pass in the file as a tuple of two strings, like this: `(filepath, alt_text)`. The `alt_text` is optional, so you can also just pass in a tuple with a single element `(filepath,)`, like this:

```python

-def predict(input, history=[]):

- # tokenize the new input sentence

- new_user_input_ids = tokenizer.encode(input + tokenizer.eos_token, return_tensors='pt')

-

- # append the new user input tokens to the chat history

- bot_input_ids = torch.cat([torch.LongTensor(history), new_user_input_ids], dim=-1)

-

- # generate a response

- history = model.generate(bot_input_ids, max_length=1000, pad_token_id=tokenizer.eos_token_id).tolist()

-

- # convert the tokens to text, and then split the responses into lines

- response = tokenizer.decode(history[0]).split("<|endoftext|>")

- response = [(response[i], response[i+1]) for i in range(0, len(response)-1, 2)] # convert to tuples of list

- return response, history

+def add_file(history, file):

+ history = history + [((file.name,), None)]

+ return history

```

-Let's break this down. The function takes two parameters:

-

-* `input`: which is what the user enters (through the Gradio GUI) in a particular step of the conversation.

-* `history`: which represents the **state**, consisting of the list of user and bot responses. To create a stateful Gradio demo, we *must* pass in a parameter to represent the state, and we set the default value of this parameter to be the initial value of the state (in this case, the empty list since this is what we would like the chat history to be at the start).

-

-Then, the function tokenizes the input and concatenates it with the tokens corresponding to the previous user and bot responses. Then, this is fed into the pretrained model to get a prediction. Finally, we do some cleaning up so that we can return two values from our function:

-

-* `response`: which is a list of tuples of strings corresponding to all of the user and bot responses. This will be rendered as the output in the Gradio demo.

-* `history` variable, which is the token representation of all of the user and bot responses. In stateful Gradio demos, we *must* return the updated state at the end of the function.

-

-## 3. Create a Gradio Demo using Blocks

-

-Now that we have our predictive function set up, we can create a Gradio demo around it.

-

-In this case, our function takes in two values, a text input and a state input. The corresponding input components in `gradio` are `"text"` and `"state"`.

-

-The function also returns two values. We will display the list of responses using the dedicated `"chatbot"` component and use the `"state"` output component type for the second return value.

-

-Note that the `"state"` input and output components are not displayed.

-

-```python

-with gr.Blocks() as demo:

- chatbot = gr.Chatbot()

- state = gr.State([])

-

- with gr.Row():

- txt = gr.Textbox(show_label=False, placeholder="Enter text and press enter").style(container=False)

-

- txt.submit(predict, [txt, state], [chatbot, state])

-

-demo.launch()

-```

-

-This produces the following demo, which you can try right here in your browser (try typing in some simple greetings like "Hi!" to get started):

-

-

+Putting this together, we can create a *multimodal* chatbot with a textbox for a user to submit text and an file upload button to submit images / audio / video files. The rest of the code looks pretty much the same as before:

+$code_chatbot_multimodal

+$demo_chatbot_multimodal

-## 4. Chatbot Markdown Support

-

-

-The `gr.Chatbot` also supports a subset of markdown including bold, italics, code, and images. Let's take a look at how we can use the markdown support to allow a user to submit images to the chatbot component.

-

-```python

-def add_image(state, image):

- state = state + [(f"", "Cool pic!")]

- return state, state

-```

-

-

-Notice the `add_image` function takes in both the `state` and `image` and appends the user submitted image to `state` by using markdown.

-

-

-```python

-import gradio as gr

-

-def add_text(state, text):

- state = state + [(text, text + "?")]

- return state, state

-

-def add_image(state, image):

- state = state + [(f"", "Cool pic!")]

- return state, state

-

-

-with gr.Blocks(css="#chatbot .overflow-y-auto{height:500px}") as demo:

- chatbot = gr.Chatbot(elem_id="chatbot")

- state = gr.State([])

-

- with gr.Row():

- with gr.Column(scale=0.85):

- txt = gr.Textbox(show_label=False, placeholder="Enter text and press enter, or upload an image").style(container=False)

- with gr.Column(scale=0.15, min_width=0):

- btn = gr.UploadButton("🖼️", file_types=["image"])

-

- txt.submit(add_text, [state, txt], [state, chatbot])

- txt.submit(lambda :"", None, txt)

- btn.upload(add_image, [state, btn], [state, chatbot])

-

-demo.launch()

-```

-

-This is the code for a chatbot with a textbox for a user to submit text and an image upload button to submit images. The rest of the demo code is creating an interface using blocks; basically adding a couple more components compared to section 3.

-

-This code will produce a demo like the one below:

-

-

-

-And you're done! That's all the code you need to build an interface for your chatbot model. Here are some references that you may find useful:

-

-* Gradio's [Quickstart guide](https://gradio.app/quickstart/)

-* The first chatbot demo [chatbot demo](https://huggingface.co/spaces/dawood/chatbot-guide) and [complete code](https://huggingface.co/spaces/dawood/chatbot-guide/blob/main/app.py) (on Hugging Face Spaces)

-* The final chatbot with markdown support [chatbot demo](https://huggingface.co/spaces/dawood/chatbot-guide-multimodal) and [complete code](https://huggingface.co/spaces/dawood/chatbot-guide-multimodal/blob/main/app.py) (on Hugging Face Spaces)

+And you're done! That's all the code you need to build an interface for your chatbot model.

\ No newline at end of file

diff --git a/test/test_components.py b/test/test_components.py

index fb7b280a4b..d977c3aebf 100644

--- a/test/test_components.py

+++ b/test/test_components.py

@@ -1687,9 +1687,48 @@ class TestChatbot:

assert chatbot.postprocess([("You are **cool**", "so are *you*")]) == [

("You are cool", "so are you")

]

+

+ multimodal_msg = [

+ (("driving.mp4",), "cool video"),

+ (("cantina.wav",), "cool audio"),

+ (("lion.jpg", "A lion"), "cool pic"),

+ ]

+ processed_multimodal_msg = [

+ (

+ {

+ "name": "driving.mp4",

+ "mime_type": "video/mp4",

+ "alt_text": None,

+ "data": None,

+ "is_file": True,

+ },

+ "cool video",

+ ),

+ (

+ {

+ "name": "cantina.wav",

+ "mime_type": "audio/wav",

+ "alt_text": None,

+ "data": None,

+ "is_file": True,

+ },

+ "cool audio",

+ ),

+ (

+ {

+ "name": "lion.jpg",

+ "mime_type": "image/jpeg",

+ "alt_text": "A lion",

+ "data": None,

+ "is_file": True,

+ },

+ "cool pic",

+ ),

+ ]

+

+ assert chatbot.postprocess(multimodal_msg) == processed_multimodal_msg

assert chatbot.get_config() == {

"value": [],

- "color_map": None,

"label": None,

"show_label": True,

"interactive": None,

diff --git a/ui/packages/app/src/components/Chatbot/Chatbot.svelte b/ui/packages/app/src/components/Chatbot/Chatbot.svelte

index 6f73943e8e..26b6b84472 100644

--- a/ui/packages/app/src/components/Chatbot/Chatbot.svelte

+++ b/ui/packages/app/src/components/Chatbot/Chatbot.svelte

@@ -4,21 +4,35 @@

import type { LoadingStatus } from "../StatusTracker/types";

import type { Styles } from "@gradio/utils";

import { Chat } from "@gradio/icons";

+ import type { FileData } from "@gradio/upload";

+ import { normalise_file } from "@gradio/upload";

export let elem_id: string = "";

export let visible: boolean = true;

- export let value: Array<[string | null, string | null]> = [];

+ export let value: Array<

+ [string | FileData | null, string | FileData | null]

+ > = [];

+ let _value: Array<[string | FileData | null, string | FileData | null]>;

export let style: Styles = {};

export let label: string;

export let show_label: boolean = true;

- export let color_map: Record = {};

export let root: string;

+ export let root_url: null | string;

- $: if (!style.color_map && Object.keys(color_map).length) {

- style.color_map = color_map;

- }

+ const redirect_src_url = (src: string) =>

+ src.replace('src="/file', `src="${root}file`);

- export let loading_status: LoadingStatus | undefined;

+ $: _value = value

+ ? value.map(([user_msg, bot_msg]) => [

+ typeof user_msg === "string"

+ ? redirect_src_url(user_msg)

+ : normalise_file(user_msg, root, root_url),

+ typeof bot_msg === "string"

+ ? redirect_src_url(bot_msg)

+ : normalise_file(bot_msg, root, root_url)

+ ])

+ : [];

+ export let loading_status: LoadingStatus | undefined = undefined;

@@ -33,8 +47,7 @@

{/if}

diff --git a/ui/packages/app/src/components/Chatbot/Chatbot.test.ts b/ui/packages/app/src/components/Chatbot/Chatbot.test.ts

index 959224256d..e810cda37c 100644

--- a/ui/packages/app/src/components/Chatbot/Chatbot.test.ts

+++ b/ui/packages/app/src/components/Chatbot/Chatbot.test.ts

@@ -4,11 +4,11 @@ import { cleanup, render, get_text } from "@gradio/tootils";

import Chatbot from "./Chatbot.svelte";

import type { LoadingStatus } from "../StatusTracker/types";

-const loading_status = {

+const loading_status: LoadingStatus = {

eta: 0,

queue_position: 1,

queue_size: 1,

- status: "complete" as LoadingStatus["status"],

+ status: "complete",

scroll_to_output: false,

visible: true,

fn_index: 0

@@ -22,7 +22,8 @@ describe("Chatbot", () => {

loading_status,

label: "hello",

value: [["user message one", "bot message one"]],

- root: ""

+ root: "",

+ root_url: ""

});

const bot = getAllByTestId("user")[0];

@@ -37,7 +38,8 @@ describe("Chatbot", () => {

loading_status,

label: "hello",

value: [["user message one", "bot message one"]],

- root: ""

+ root: "",

+ root_url: ""

});

const bot = getAllByTestId("user");

diff --git a/ui/packages/chatbot/package.json b/ui/packages/chatbot/package.json

index 762cdbfb8c..5db42768d5 100644

--- a/ui/packages/chatbot/package.json

+++ b/ui/packages/chatbot/package.json

@@ -9,6 +9,7 @@

"private": true,

"dependencies": {

"@gradio/theme": "workspace:^0.0.1",

- "@gradio/utils": "workspace:^0.0.1"

+ "@gradio/utils": "workspace:^0.0.1",

+ "@gradio/upload": "workspace:^0.0.1"

}

}

diff --git a/ui/packages/chatbot/src/ChatBot.svelte b/ui/packages/chatbot/src/ChatBot.svelte

index 0ad3299df9..dfb0a61cfe 100644

--- a/ui/packages/chatbot/src/ChatBot.svelte

+++ b/ui/packages/chatbot/src/ChatBot.svelte

@@ -1,11 +1,15 @@

+

+

+Note: images were previously supported via Markdown syntax and that is still supported for backwards compatibility. By [@dawoodkhan82](https://github.com/dawoodkhan82) in [PR 3413](https://github.com/gradio-app/gradio/pull/3413)

+

- Allow consecutive function triggers with `.then` and `.success` by [@aliabid94](https://github.com/aliabid94) in [PR 3430](https://github.com/gradio-app/gradio/pull/3430)

+

- New code component allows you to enter, edit and display code with full syntax highlighting by [@pngwn](https://github.com/pngwn) in [PR 3421](https://github.com/gradio-app/gradio/pull/3421)

diff --git a/demo/chatbot_demo/run.ipynb b/demo/chatbot_demo/run.ipynb

deleted file mode 100644

index 7c618e1d67..0000000000

--- a/demo/chatbot_demo/run.ipynb

+++ /dev/null

@@ -1 +0,0 @@

-{"cells": [{"cell_type": "markdown", "id": 302934307671667531413257853548643485645, "metadata": {}, "source": ["# Gradio Demo: chatbot_demo"]}, {"cell_type": "code", "execution_count": null, "id": 272996653310673477252411125948039410165, "metadata": {}, "outputs": [], "source": ["!pip install -q gradio "]}, {"cell_type": "code", "execution_count": null, "id": 288918539441861185822528903084949547379, "metadata": {}, "outputs": [], "source": ["import gradio as gr\n", "from transformers import AutoModelForCausalLM, AutoTokenizer\n", "import torch\n", "\n", "tokenizer = AutoTokenizer.from_pretrained(\"microsoft/DialoGPT-medium\")\n", "model = AutoModelForCausalLM.from_pretrained(\"microsoft/DialoGPT-medium\")\n", "\n", "def predict(input, history=[]):\n", " # tokenize the new input sentence\n", " new_user_input_ids = tokenizer.encode(input + tokenizer.eos_token, return_tensors='pt')\n", "\n", " # append the new user input tokens to the chat history\n", " bot_input_ids = torch.cat([torch.LongTensor(history), new_user_input_ids], dim=-1)\n", "\n", " # generate a response \n", " history = model.generate(bot_input_ids, max_length=1000, pad_token_id=tokenizer.eos_token_id).tolist()\n", "\n", " # convert the tokens to text, and then split the responses into lines\n", " response = tokenizer.decode(history[0]).split(\"<|endoftext|>\")\n", " response = [(response[i], response[i+1]) for i in range(0, len(response)-1, 2)] # convert to tuples of list\n", " return response, history\n", "\n", "with gr.Blocks() as demo:\n", " chatbot = gr.Chatbot()\n", " state = gr.State([])\n", "\n", " with gr.Row():\n", " txt = gr.Textbox(show_label=False, placeholder=\"Enter text and press enter\").style(container=False)\n", "\n", " txt.submit(predict, [txt, state], [chatbot, state])\n", " \n", "if __name__ == \"__main__\":\n", " demo.launch()\n"]}], "metadata": {}, "nbformat": 4, "nbformat_minor": 5}

\ No newline at end of file

diff --git a/demo/chatbot_dialogpt/run.ipynb b/demo/chatbot_dialogpt/run.ipynb

new file mode 100644

index 0000000000..58b5d3bf58

--- /dev/null

+++ b/demo/chatbot_dialogpt/run.ipynb

@@ -0,0 +1 @@

+{"cells": [{"cell_type": "markdown", "id": 302934307671667531413257853548643485645, "metadata": {}, "source": ["# Gradio Demo: chatbot_dialogpt"]}, {"cell_type": "code", "execution_count": null, "id": 272996653310673477252411125948039410165, "metadata": {}, "outputs": [], "source": ["!pip install -q gradio "]}, {"cell_type": "code", "execution_count": null, "id": 288918539441861185822528903084949547379, "metadata": {}, "outputs": [], "source": ["import gradio as gr\n", "from transformers import AutoModelForCausalLM, AutoTokenizer\n", "import torch\n", "\n", "tokenizer = AutoTokenizer.from_pretrained(\"microsoft/DialoGPT-medium\")\n", "model = AutoModelForCausalLM.from_pretrained(\"microsoft/DialoGPT-medium\")\n", "\n", "def user(message, history):\n", " return \"\", history + [[message, None]]\n", "\n", "\n", "# bot_message = random.choice([\"Yes\", \"No\"])\n", "# history[-1][1] = bot_message\n", "# time.sleep(1)\n", "# return history\n", "\n", "# def predict(input, history=[]):\n", "# # tokenize the new input sentence\n", "\n", "def bot(history):\n", " user_message = history[-1][0]\n", " new_user_input_ids = tokenizer.encode(user_message + tokenizer.eos_token, return_tensors='pt')\n", "\n", " # append the new user input tokens to the chat history\n", " bot_input_ids = torch.cat([torch.LongTensor(history), new_user_input_ids], dim=-1)\n", "\n", " # generate a response \n", " history = model.generate(bot_input_ids, max_length=1000, pad_token_id=tokenizer.eos_token_id).tolist()\n", "\n", " # convert the tokens to text, and then split the responses into lines\n", " response = tokenizer.decode(history[0]).split(\"<|endoftext|>\")\n", " response = [(response[i], response[i+1]) for i in range(0, len(response)-1, 2)] # convert to tuples of list\n", " return history\n", "\n", "with gr.Blocks() as demo:\n", " chatbot = gr.Chatbot()\n", " msg = gr.Textbox()\n", " clear = gr.Button(\"Clear\")\n", "\n", " msg.submit(user, [msg, chatbot], [msg, chatbot], queue=False).then(\n", " bot, chatbot, chatbot\n", " )\n", " clear.click(lambda: None, None, chatbot, queue=False)\n", "\n", "if __name__ == \"__main__\":\n", " demo.launch()\n"]}], "metadata": {}, "nbformat": 4, "nbformat_minor": 5}

\ No newline at end of file

diff --git a/demo/chatbot_demo/run.py b/demo/chatbot_dialogpt/run.py

similarity index 56%

rename from demo/chatbot_demo/run.py

rename to demo/chatbot_dialogpt/run.py

index 937d901a67..18bbfdf190 100644

--- a/demo/chatbot_demo/run.py

+++ b/demo/chatbot_dialogpt/run.py

@@ -5,9 +5,21 @@ import torch

tokenizer = AutoTokenizer.from_pretrained("microsoft/DialoGPT-medium")

model = AutoModelForCausalLM.from_pretrained("microsoft/DialoGPT-medium")

-def predict(input, history=[]):

- # tokenize the new input sentence

- new_user_input_ids = tokenizer.encode(input + tokenizer.eos_token, return_tensors='pt')

+def user(message, history):

+ return "", history + [[message, None]]

+

+

+# bot_message = random.choice(["Yes", "No"])

+# history[-1][1] = bot_message

+# time.sleep(1)

+# return history

+

+# def predict(input, history=[]):

+# # tokenize the new input sentence

+

+def bot(history):

+ user_message = history[-1][0]

+ new_user_input_ids = tokenizer.encode(user_message + tokenizer.eos_token, return_tensors='pt')

# append the new user input tokens to the chat history

bot_input_ids = torch.cat([torch.LongTensor(history), new_user_input_ids], dim=-1)

@@ -18,16 +30,17 @@ def predict(input, history=[]):

# convert the tokens to text, and then split the responses into lines

response = tokenizer.decode(history[0]).split("<|endoftext|>")

response = [(response[i], response[i+1]) for i in range(0, len(response)-1, 2)] # convert to tuples of list

- return response, history

+ return history

with gr.Blocks() as demo:

chatbot = gr.Chatbot()

- state = gr.State([])

+ msg = gr.Textbox()

+ clear = gr.Button("Clear")

- with gr.Row():

- txt = gr.Textbox(show_label=False, placeholder="Enter text and press enter").style(container=False)

+ msg.submit(user, [msg, chatbot], [msg, chatbot], queue=False).then(

+ bot, chatbot, chatbot

+ )

+ clear.click(lambda: None, None, chatbot, queue=False)

- txt.submit(predict, [txt, state], [chatbot, state])

-

if __name__ == "__main__":

demo.launch()

diff --git a/demo/chatbot_demo/screenshot.gif b/demo/chatbot_dialogpt/screenshot.gif

similarity index 100%

rename from demo/chatbot_demo/screenshot.gif

rename to demo/chatbot_dialogpt/screenshot.gif

diff --git a/demo/chatbot_demo/screenshot.png b/demo/chatbot_dialogpt/screenshot.png

similarity index 100%

rename from demo/chatbot_demo/screenshot.png

rename to demo/chatbot_dialogpt/screenshot.png

diff --git a/demo/chatbot_multimodal/run.ipynb b/demo/chatbot_multimodal/run.ipynb

index 77b8201e19..450ac4c23a 100644

--- a/demo/chatbot_multimodal/run.ipynb

+++ b/demo/chatbot_multimodal/run.ipynb

@@ -1 +1 @@

-{"cells": [{"cell_type": "markdown", "id": 302934307671667531413257853548643485645, "metadata": {}, "source": ["# Gradio Demo: chatbot_multimodal"]}, {"cell_type": "code", "execution_count": null, "id": 272996653310673477252411125948039410165, "metadata": {}, "outputs": [], "source": ["!pip install -q gradio "]}, {"cell_type": "code", "execution_count": null, "id": 288918539441861185822528903084949547379, "metadata": {}, "outputs": [], "source": ["import gradio as gr\n", "from urllib.parse import quote\n", "\n", "with gr.Blocks() as demo:\n", " chatbot = gr.Chatbot(elem_id=\"chatbot\").style(height=500)\n", "\n", " with gr.Row():\n", " with gr.Column(scale=0.85):\n", " txt = gr.Textbox(\n", " show_label=False,\n", " placeholder=\"Enter text and press enter, or upload an image\",\n", " ).style(container=False)\n", " with gr.Column(scale=0.15, min_width=0):\n", " btn = gr.UploadButton(\"\ud83d\uddbc\ufe0f\", file_types=[\"image\"])\n", "\n", " def add_text(history, text):\n", " history = history + [(text, None)]\n", " return history, \"\"\n", "\n", " def add_image(history, image):\n", " history = history + [(f\"})\", None)]\n", " return history\n", "\n", " def bot_response(history):\n", " response = \"Cool!\"\n", " history[-1][1] = response\n", " return history\n", "\n", " txt.submit(add_text, [chatbot, txt], [chatbot, txt]).then(\n", " bot_response, chatbot, chatbot\n", " )\n", " btn.upload(add_image, [chatbot, btn], [chatbot]).then(\n", " bot_response, chatbot, chatbot\n", " )\n", "\n", "if __name__ == \"__main__\":\n", " demo.launch()\n"]}], "metadata": {}, "nbformat": 4, "nbformat_minor": 5}

\ No newline at end of file

+{"cells": [{"cell_type": "markdown", "id": 302934307671667531413257853548643485645, "metadata": {}, "source": ["# Gradio Demo: chatbot_multimodal"]}, {"cell_type": "code", "execution_count": null, "id": 272996653310673477252411125948039410165, "metadata": {}, "outputs": [], "source": ["!pip install -q gradio "]}, {"cell_type": "code", "execution_count": null, "id": 288918539441861185822528903084949547379, "metadata": {}, "outputs": [], "source": ["import gradio as gr\n", "\n", "def add_text(history, text):\n", " history = history + [(text, None)]\n", " return history, \"\"\n", "\n", "def add_file(history, file):\n", " history = history + [((file.name,), None)]\n", " return history\n", "\n", "def bot(history):\n", " response = \"**That's cool!**\"\n", " history[-1][1] = response\n", " return history\n", "\n", "with gr.Blocks() as demo:\n", " chatbot = gr.Chatbot([], elem_id=\"chatbot\").style(height=750)\n", " \n", " with gr.Row():\n", " with gr.Column(scale=0.85):\n", " txt = gr.Textbox(\n", " show_label=False,\n", " placeholder=\"Enter text and press enter, or upload an image\",\n", " ).style(container=False)\n", " with gr.Column(scale=0.15, min_width=0):\n", " btn = gr.UploadButton(\"\ud83d\udcc1\", file_types=[\"image\", \"video\", \"audio\"])\n", " \n", " txt.submit(add_text, [chatbot, txt], [chatbot, txt]).then(\n", " bot, chatbot, chatbot\n", " )\n", " btn.upload(add_file, [chatbot, btn], [chatbot]).then(\n", " bot, chatbot, chatbot\n", " )\n", "\n", "if __name__ == \"__main__\":\n", " demo.launch()\n"]}], "metadata": {}, "nbformat": 4, "nbformat_minor": 5}

\ No newline at end of file

diff --git a/demo/chatbot_multimodal/run.py b/demo/chatbot_multimodal/run.py

index 6a5344db45..1bc15a67fb 100644

--- a/demo/chatbot_multimodal/run.py

+++ b/demo/chatbot_multimodal/run.py

@@ -1,9 +1,21 @@

import gradio as gr

-from urllib.parse import quote

+

+def add_text(history, text):

+ history = history + [(text, None)]

+ return history, ""

+

+def add_file(history, file):

+ history = history + [((file.name,), None)]

+ return history

+

+def bot(history):

+ response = "**That's cool!**"

+ history[-1][1] = response

+ return history

with gr.Blocks() as demo:

- chatbot = gr.Chatbot(elem_id="chatbot").style(height=500)

-

+ chatbot = gr.Chatbot([], elem_id="chatbot").style(height=750)

+

with gr.Row():

with gr.Column(scale=0.85):

txt = gr.Textbox(

@@ -11,26 +23,13 @@ with gr.Blocks() as demo:

placeholder="Enter text and press enter, or upload an image",

).style(container=False)

with gr.Column(scale=0.15, min_width=0):

- btn = gr.UploadButton("🖼️", file_types=["image"])

-

- def add_text(history, text):

- history = history + [(text, None)]

- return history, ""

-

- def add_image(history, image):

- history = history + [(f"})", None)]

- return history

-

- def bot_response(history):

- response = "Cool!"

- history[-1][1] = response

- return history

-

+ btn = gr.UploadButton("📁", file_types=["image", "video", "audio"])

+

txt.submit(add_text, [chatbot, txt], [chatbot, txt]).then(

- bot_response, chatbot, chatbot

+ bot, chatbot, chatbot

)

- btn.upload(add_image, [chatbot, btn], [chatbot]).then(

- bot_response, chatbot, chatbot

+ btn.upload(add_file, [chatbot, btn], [chatbot]).then(

+ bot, chatbot, chatbot

)

if __name__ == "__main__":

diff --git a/demo/chatbot_simple/run.ipynb b/demo/chatbot_simple/run.ipynb

new file mode 100644

index 0000000000..78ec25d29a

--- /dev/null

+++ b/demo/chatbot_simple/run.ipynb

@@ -0,0 +1 @@

+{"cells": [{"cell_type": "markdown", "id": 302934307671667531413257853548643485645, "metadata": {}, "source": ["# Gradio Demo: chatbot_simple"]}, {"cell_type": "code", "execution_count": null, "id": 272996653310673477252411125948039410165, "metadata": {}, "outputs": [], "source": ["!pip install -q gradio "]}, {"cell_type": "code", "execution_count": null, "id": 288918539441861185822528903084949547379, "metadata": {}, "outputs": [], "source": ["import gradio as gr\n", "import random\n", "import time\n", "\n", "with gr.Blocks() as demo:\n", " chatbot = gr.Chatbot()\n", " msg = gr.Textbox()\n", " clear = gr.Button(\"Clear\")\n", "\n", " def user(user_message, history):\n", " return \"\", history + [[user_message, None]]\n", "\n", " def bot(history):\n", " bot_message = random.choice([\"Yes\", \"No\"])\n", " history[-1][1] = bot_message\n", " time.sleep(1)\n", " return history\n", "\n", " msg.submit(user, [msg, chatbot], [msg, chatbot], queue=False).then(\n", " bot, chatbot, chatbot\n", " )\n", " clear.click(lambda: None, None, chatbot, queue=False)\n", "\n", "if __name__ == \"__main__\":\n", " demo.launch()\n"]}], "metadata": {}, "nbformat": 4, "nbformat_minor": 5}

\ No newline at end of file

diff --git a/demo/chatbot_simple/run.py b/demo/chatbot_simple/run.py

new file mode 100644

index 0000000000..2057b311e0

--- /dev/null

+++ b/demo/chatbot_simple/run.py

@@ -0,0 +1,25 @@

+import gradio as gr

+import random

+import time

+

+with gr.Blocks() as demo:

+ chatbot = gr.Chatbot()

+ msg = gr.Textbox()

+ clear = gr.Button("Clear")

+

+ def user(user_message, history):

+ return "", history + [[user_message, None]]

+

+ def bot(history):

+ bot_message = random.choice(["Yes", "No"])

+ history[-1][1] = bot_message

+ time.sleep(1)

+ return history

+

+ msg.submit(user, [msg, chatbot], [msg, chatbot], queue=False).then(

+ bot, chatbot, chatbot

+ )

+ clear.click(lambda: None, None, chatbot, queue=False)

+

+if __name__ == "__main__":

+ demo.launch()

diff --git a/demo/chatbot_simple_demo/run.ipynb b/demo/chatbot_simple_demo/run.ipynb

deleted file mode 100644

index 55365909ed..0000000000

--- a/demo/chatbot_simple_demo/run.ipynb

+++ /dev/null

@@ -1 +0,0 @@

-{"cells": [{"cell_type": "markdown", "id": 302934307671667531413257853548643485645, "metadata": {}, "source": ["# Gradio Demo: chatbot_simple_demo"]}, {"cell_type": "code", "execution_count": null, "id": 272996653310673477252411125948039410165, "metadata": {}, "outputs": [], "source": ["!pip install -q gradio "]}, {"cell_type": "code", "execution_count": null, "id": 288918539441861185822528903084949547379, "metadata": {}, "outputs": [], "source": ["import gradio as gr\n", "import random\n", "import time\n", "\n", "with gr.Blocks() as demo:\n", " chatbot = gr.Chatbot()\n", " msg = gr.Textbox()\n", " clear = gr.Button(\"Clear\")\n", "\n", " def user_message(message, history):\n", " return \"\", history + [[message, None]]\n", "\n", " def bot_message(history):\n", " response = random.choice([\"Yes\", \"No\"])\n", " history[-1][1] = response\n", " time.sleep(1)\n", " return history\n", "\n", " msg.submit(user_message, [msg, chatbot], [msg, chatbot], queue=False).then(\n", " bot_message, chatbot, chatbot\n", " )\n", " clear.click(lambda: None, None, chatbot, queue=False)\n", "\n", "if __name__ == \"__main__\":\n", " demo.launch()\n"]}], "metadata": {}, "nbformat": 4, "nbformat_minor": 5}

\ No newline at end of file

diff --git a/demo/chatbot_simple_demo/run.py b/demo/chatbot_simple_demo/run.py

deleted file mode 100644

index c01cfa03ea..0000000000

--- a/demo/chatbot_simple_demo/run.py

+++ /dev/null

@@ -1,25 +0,0 @@

-import gradio as gr

-import random

-import time

-

-with gr.Blocks() as demo:

- chatbot = gr.Chatbot()

- msg = gr.Textbox()

- clear = gr.Button("Clear")

-

- def user_message(message, history):

- return "", history + [[message, None]]

-

- def bot_message(history):

- response = random.choice(["Yes", "No"])

- history[-1][1] = response

- time.sleep(1)

- return history

-

- msg.submit(user_message, [msg, chatbot], [msg, chatbot], queue=False).then(

- bot_message, chatbot, chatbot

- )

- clear.click(lambda: None, None, chatbot, queue=False)

-

-if __name__ == "__main__":

- demo.launch()

diff --git a/gradio/components.py b/gradio/components.py

index 92532ce390..3860c65d5e 100644

--- a/gradio/components.py

+++ b/gradio/components.py

@@ -2901,7 +2901,7 @@ class State(IOComponent, SimpleSerializable):

Preprocessing: No preprocessing is performed

Postprocessing: No postprocessing is performed

- Demos: chatbot_demo, blocks_simple_squares

+ Demos: blocks_simple_squares

Guides: creating_a_chatbot, real_time_speech_recognition

"""

@@ -3928,9 +3928,9 @@ class Chatbot(Changeable, IOComponent, JSONSerializable):

"""

Displays a chatbot output showing both user submitted messages and responses. Supports a subset of Markdown including bold, italics, code, and images.

Preprocessing: this component does *not* accept input.

- Postprocessing: expects function to return a {List[Tuple[str | None, str | None]]}, a list of tuples with user inputs and responses as strings of HTML or Nones. Messages that are `None` are not displayed.

+ Postprocessing: expects function to return a {List[Tuple[str | None | Tuple, str | None | Tuple]]}, a list of tuples with user message and response messages. Messages should be strings, tuples, or Nones. If the message is a string, it can include Markdown. If it is a tuple, it should consist of (string filepath to image/video/audio, [optional string alt text]). Messages that are `None` are not displayed.

- Demos: chatbot_demo, chatbot_multimodal

+ Demos: chatbot_simple, chatbot_multimodal

"""

def __init__(

@@ -3956,11 +3956,9 @@ class Chatbot(Changeable, IOComponent, JSONSerializable):

"""

if color_map is not None:

warnings.warn(

- "The 'color_map' parameter has been moved from the constructor to `Chatbot.style()` ",

+ "The 'color_map' parameter has been deprecated.",

)

- self.color_map = color_map

self.md = utils.get_markdown_parser()

-

IOComponent.__init__(

self,

label=label,

@@ -3975,20 +3973,17 @@ class Chatbot(Changeable, IOComponent, JSONSerializable):

def get_config(self):

return {

"value": self.value,

- "color_map": self.color_map,

**IOComponent.get_config(self),

}

@staticmethod

def update(

value: Any | Literal[_Keywords.NO_VALUE] | None = _Keywords.NO_VALUE,

- color_map: Tuple[str, str] | None = None,

label: str | None = None,

show_label: bool | None = None,

visible: bool | None = None,

):

updated_config = {

- "color_map": color_map,

"label": label,

"show_label": show_label,

"visible": visible,

@@ -3997,23 +3992,58 @@ class Chatbot(Changeable, IOComponent, JSONSerializable):

}

return updated_config

+ def _process_chat_messages(

+ self, chat_message: str | Tuple | List | Dict | None

+ ) -> str | Dict | None:

+ if chat_message is None:

+ return None

+ elif isinstance(chat_message, (tuple, list)):

+ mime_type = processing_utils.get_mimetype(chat_message[0])

+ return {

+ "name": chat_message[0],

+ "mime_type": mime_type,

+ "alt_text": chat_message[1] if len(chat_message) > 1 else None,

+ "data": None, # These last two fields are filled in by the frontend

+ "is_file": True,

+ }

+ elif isinstance(

+ chat_message, dict

+ ): # This happens for previously processed messages

+ return chat_message

+ elif isinstance(chat_message, str):

+ return self.md.renderInline(chat_message)

+ else:

+ raise ValueError(f"Invalid message for Chatbot component: {chat_message}")

+

def postprocess(

- self, y: List[Tuple[str | None, str | None]]

- ) -> List[Tuple[str | None, str | None]]:

+ self,

+ y: List[

+ Tuple[str | Tuple | List | Dict | None, str | Tuple | List | Dict | None]

+ ],

+ ) -> List[Tuple[str | Dict | None, str | Dict | None]]:

"""

Parameters:

- y: List of tuples representing the message and response pairs. Each message and response should be a string, which may be in Markdown format.

+ y: List of tuples representing the message and response pairs. Each message and response should be a string, which may be in Markdown format. It can also be a tuple whose first element is a string filepath or URL to an image/video/audio, and second (optional) element is the alt text, in which case the media file is displayed. It can also be None, in which case that message is not displayed.

Returns:

- List of tuples representing the message and response. Each message and response will be a string of HTML.

+ List of tuples representing the message and response. Each message and response will be a string of HTML, or a dictionary with media information.

"""

if y is None:

return []

- for i, (message, response) in enumerate(y):

- y[i] = (

- None if message is None else self.md.renderInline(message),

- None if response is None else self.md.renderInline(response),

+ processed_messages = []

+ for message_pair in y:

+ assert isinstance(

+ message_pair, (tuple, list)

+ ), f"Expected a list of lists or list of tuples. Received: {message_pair}"

+ assert (

+ len(message_pair) == 2

+ ), f"Expected a list of lists of length 2 or list of tuples of length 2. Received: {message_pair}"

+ processed_messages.append(

+ (

+ self._process_chat_messages(message_pair[0]),

+ self._process_chat_messages(message_pair[1]),

+ )

)

- return y

+ return processed_messages

def style(self, height: int | None = None, **kwargs):

"""

diff --git a/guides/02_building-interfaces/01_interface-state.md b/guides/02_building-interfaces/01_interface-state.md

index 64fced4e5a..9f3c44df51 100644

--- a/guides/02_building-interfaces/01_interface-state.md

+++ b/guides/02_building-interfaces/01_interface-state.md

@@ -20,8 +20,8 @@ Another type of data persistence Gradio supports is session **state**, where dat

A chatbot is an example where you would need session state - you want access to a users previous submissions, but you cannot store chat history in a global variable, because then chat history would get jumbled between different users.

-$code_chatbot_demo

-$demo_chatbot_demo

+$code_chatbot_dialogpt

+$demo_chatbot_dialogpt

Notice how the state persists across submits within each page, but if you load this demo in another tab (or refresh the page), the demos will not share chat history.

diff --git a/guides/03_building-with-blocks/01_blocks-and-event-listeners.md b/guides/03_building-with-blocks/01_blocks-and-event-listeners.md

index fe9cb8c705..b3cd43bed9 100644

--- a/guides/03_building-with-blocks/01_blocks-and-event-listeners.md

+++ b/guides/03_building-with-blocks/01_blocks-and-event-listeners.md

@@ -129,8 +129,8 @@ You can also run events consecutively by using the `then` method of an event lis

For example, in the chatbot example below, we first update the chatbot with the user message immediately, and then update the chatbot with the computer response after a simulated delay.

-$code_chatbot_simple_demo

-$demo_chatbot_simple_demo

+$code_chatbot_simple

+$demo_chatbot_simple

The `.then()` method of an event listener executes the subsequent event regardless of whether the previous event raised any errors. If you'd like to only run subsequent events if the previous event executed successfully, use the `.success()` method, which takes the same arguments as `.then()`.

diff --git a/guides/06_other-tutorials/creating-a-chatbot.md b/guides/06_other-tutorials/creating-a-chatbot.md

index d06d6770f1..b93e110310 100644

--- a/guides/06_other-tutorials/creating-a-chatbot.md

+++ b/guides/06_other-tutorials/creating-a-chatbot.md

@@ -1,177 +1,67 @@

# How to Create a Chatbot

-Related spaces: https://huggingface.co/spaces/dawood/chatbot-guide, https://huggingface.co/spaces/dawood/chatbot-guide-multimodal, https://huggingface.co/spaces/ThomasSimonini/Chat-with-Gandalf-GPT-J6B, https://huggingface.co/spaces/gorkemgoknar/moviechatbot, https://huggingface.co/spaces/Kirili4ik/chat-with-Kirill

-Tags: NLP, TEXT, HTML

+Tags: NLP, TEXT, CHAT

## Introduction

-Chatbots are widely studied in natural language processing (NLP) research and are a common use case of NLP in industry. Because chatbots are designed to be used directly by customers and end users, it is important to validate that chatbots are behaving as expected when confronted with a wide variety of input prompts.

+Chatbots are widely used in natural language processing (NLP) research and industry. Because chatbots are designed to be used directly by customers and end users, it is important to validate that chatbots are behaving as expected when confronted with a wide variety of input prompts.

Using `gradio`, you can easily build a demo of your chatbot model and share that with a testing team, or test it yourself using an intuitive chatbot GUI.

-This tutorial will show how to take a pretrained chatbot model and deploy it with a Gradio interface in 4 steps. The live chatbot interface that we create will look something like this (try it!):

+This tutorial will show how to make two kinds of chatbot UIs with Gradio: a simple one to display text and a more sophisticated one that can handle media files as well. The simple chatbot interface that we create will look something like this:

-

+$demo_chatbot_simple

+

+**Prerequisite**: We'll be using the `gradio.Blocks` class to build our Chatbot demo.

+You can [read the Guide to Blocks first](https://gradio.app/quickstart/#blocks-more-flexibility-and-control) if you are not already familiar with it.

## A Simple Chatbot Demo

-Let's start with a simple demo, with no actual model. Our bot will randomly respond "yes" or "no" to any input.

+Let's start with recreating the simple demo above. As you may have noticed, our bot simply randomly responds "yes" or "no" to any input. Here's the code to create this with Gradio:

-$code_chatbot_simple_demo

-$demo_chatbot_simple_demo

+$code_chatbot_simple

-The chatbot value stores the entire history of the conversation, as a list of response pairs between the user and bot. Note that we chain two event event listeners with `.then` after a user triggers a submit:

+There are three Gradio components here:

+* A `Chatbot`, whose value stores the entire history of the conversation, as a list of response pairs between the user and bot.

+* A `Textbox` where the user can type their message, and then hit enter/submit to trigger the chatbot response

+* A `Clear` button to clear the entire Chatbot history

-1. The first method updates the chatbot with the user message and clears the input field. Because we want this to happen instantly, we set `queue=False`, which would skip any queue if it had been enabled.

-2. The second method waits for the bot to respond, and then updates the chatbot with the bot response.

+Note that when a user submits their message, we chain two event events with `.then`:

-The reason we split these events is so that the user can see their message appear in the chatbot before the bot responds, which can take time to process.

+1. The first method `user()` updates the chatbot with the user message and clears the input field. Because we want this to happen instantly, we set `queue=False`, which would skip any queue if it had been enabled. The chatbot's history is appended with `(user_message, None)`, the `None` signifying that the bot has not responded.

+

+2. The second method, `bot()` waits for the bot to respond, and then updates the chatbot with the bot's response. Instead of creating a new message, we just replace the previous `None` message with the bot's response. We add a `time.sleep` to simulate the bot's processing time.

+

+The reason we split these events is so that the user can see their message appear in the chatbot immediately before the bot responds, which can take some time to process.

Note we pass the entire history of the chatbot to these functions and back to the component. To clear the chatbot, we pass it `None`.

-### Using a Model

+Of course, in practice, you would replace `bot()` with your own more complex function, which might call a pretrained model or an API, to generate a response.

-Chatbots are *stateful*, meaning we need to track how the user has previously interacted with the model. So, in this tutorial, we will also cover how to use **state** with Gradio demos.

-### Prerequisites

+## Adding Markdown, Images, Audio, or Videos

-Make sure you have the `gradio` Python package already [installed](/quickstart). To use a pretrained chatbot model, also install `transformers` and `torch`.

+The `gradio.Chatbot` component supports a subset of markdown including bold, italics, and code. For example, we could write a function that responds to a user's message, with a bold **That's cool!**, like this:

-Let's get started! Here's how to build your own chatbot:

-

- [1. Set up the Chatbot Model](#1-set-up-the-chatbot-model)

- [2. Define a `predict` function](#2-define-a-predict-function)

- [3. Create a Gradio Demo using Blocks](#3-create-a-gradio-demo-using-blocks)

- [4. Chatbot Markdown Support](#4-chatbot-markdown-support)

-

-## 1. Set up the Chatbot Model

-

-First, you will need to have a chatbot model that you have either trained yourself or you will need to download a pretrained model. In this tutorial, we will use a pretrained chatbot model, `DialoGPT`, and its tokenizer from the [Hugging Face Hub](https://huggingface.co/microsoft/DialoGPT-medium), but you can replace this with your own model.

-

-Here is the code to load `DialoGPT` from Hugging Face `transformers`.

-

-```python

-from transformers import AutoModelForCausalLM, AutoTokenizer

-import torch

-

-tokenizer = AutoTokenizer.from_pretrained("microsoft/DialoGPT-medium")

-model = AutoModelForCausalLM.from_pretrained("microsoft/DialoGPT-medium")

+```py

+def bot(history):

+ response = "**That's cool!**"

+ history[-1][1] = response

+ return history

```

-## 2. Define a `predict` function

-

-Next, you will need to define a function that takes in the *user input* as well as the previous *chat history* to generate a response.

-

-In the case of our pretrained model, it will look like this:

+In addition, it can handle media files, such as images, audio, and video. To pass in a media file, we must pass in the file as a tuple of two strings, like this: `(filepath, alt_text)`. The `alt_text` is optional, so you can also just pass in a tuple with a single element `(filepath,)`, like this:

```python