mirror of

https://github.com/gradio-app/gradio.git

synced 2025-03-31 12:20:26 +08:00

generate docs json in ci, reimplement main vs release (#5092)

* fixup site * fix docs versions * test ci * test ci some more * test ci some more * test ci some more * asd * asd * asd * asd * asd * asd * asd * asd * asd * test * fix * add changeset * fix * fix * fix * test ci * test ci * test ci * test ci * test ci * test ci * test ci * test ci * test ci * notebook ci * notebook ci * more ci * more ci * update changeset * update changeset * update changeset * fix site * fix * fix * fix * fix * fix ci * render mising pages * remove changeset * fix path * fix workflows * fix workflows * fix workflows * fix comment * tweaks * tweaks --------- Co-authored-by: gradio-pr-bot <gradio-pr-bot@users.noreply.github.com>

This commit is contained in:

parent

3c00f0fbfb

commit

643442e1a5

5

.changeset/mean-dragons-move.md

Normal file

5

.changeset/mean-dragons-move.md

Normal file

@ -0,0 +1,5 @@

|

||||

---

|

||||

"website": minor

|

||||

---

|

||||

|

||||

feat:generate docs json in ci, reimplement main vs release

|

||||

@ -9,11 +9,10 @@

|

||||

**/.svelte-kit/**

|

||||

**/demo/**

|

||||

**/gradio/**

|

||||

**/website/**

|

||||

**/.pnpm-store/**

|

||||

**/.venv/**

|

||||

**/.github/**

|

||||

**/guides/**

|

||||

/guides/**

|

||||

**/.mypy_cache/**

|

||||

!test-strategy.md

|

||||

**/js/_space-test/**

|

||||

@ -22,4 +21,5 @@

|

||||

**/gradio_cached_examples/**

|

||||

**/storybook-static/**

|

||||

**/.vscode/**

|

||||

sweep.yaml

|

||||

sweep.yaml

|

||||

**/.vercel/**

|

||||

@ -3,5 +3,5 @@

|

||||

"singleQuote": false,

|

||||

"trailingComma": "none",

|

||||

"printWidth": 80,

|

||||

"plugins": ["prettier-plugin-svelte", "prettier-plugin-css-order"]

|

||||

"plugins": ["prettier-plugin-svelte"]

|

||||

}

|

||||

|

||||

5

.github/actions/install-all-deps/action.yml

vendored

5

.github/actions/install-all-deps/action.yml

vendored

@ -53,3 +53,8 @@ runs:

|

||||

node_auth_token: ${{ inputs.node_auth_token }}

|

||||

npm_token: ${{ inputs.npm_token }}

|

||||

skip_build: ${{ inputs.skip_build }}

|

||||

- name: generate json

|

||||

shell: bash

|

||||

run: |

|

||||

. venv/bin/activate

|

||||

python js/_website/generate_jsons/generate.py

|

||||

|

||||

@ -40,7 +40,7 @@ runs:

|

||||

- name: Install deps

|

||||

if: steps.frontend-cache.outputs.cache-hit != 'true' || inputs.always-install-pnpm == 'true'

|

||||

shell: bash

|

||||

run: pnpm i --frozen-lockfile

|

||||

run: pnpm i --frozen-lockfile --ignore-scripts

|

||||

- name: Build Css

|

||||

if: inputs.always-install-pnpm == 'true'

|

||||

shell: bash

|

||||

|

||||

4

.github/workflows/backend.yml

vendored

4

.github/workflows/backend.yml

vendored

@ -90,7 +90,7 @@ jobs:

|

||||

- name: Build frontend

|

||||

if: steps.frontend-cache.outputs.cache-hit != 'true'

|

||||

run: |

|

||||

pnpm i --frozen-lockfile

|

||||

pnpm i --frozen-lockfile --ignore-scripts

|

||||

pnpm build

|

||||

- name: Install Test Requirements (Linux)

|

||||

if: runner.os == 'Linux'

|

||||

@ -180,7 +180,7 @@ jobs:

|

||||

- name: Build frontend

|

||||

if: steps.frontend-cache.outputs.cache-hit != 'true'

|

||||

run: |

|

||||

pnpm i --frozen-lockfile

|

||||

pnpm i --frozen-lockfile --ignore-scripts

|

||||

pnpm build

|

||||

- name: Install Gradio and Client Libraries Locally (Linux)

|

||||

if: runner.os == 'Linux'

|

||||

|

||||

24

.github/workflows/build-pr.yml

vendored

24

.github/workflows/build-pr.yml

vendored

@ -6,6 +6,13 @@ on:

|

||||

- main

|

||||

|

||||

jobs:

|

||||

comment-spaces-start:

|

||||

uses: "./.github/workflows/comment-queue.yml"

|

||||

secrets:

|

||||

gh_token: ${{ secrets.COMMENT_TOKEN }}

|

||||

with:

|

||||

pr_number: ${{ github.event.pull_request.number }}

|

||||

message: spaces~pending~null

|

||||

build_pr:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

@ -26,14 +33,15 @@ jobs:

|

||||

- name: Install pip

|

||||

run: python -m pip install build requests

|

||||

- name: Get PR Number

|

||||

id: get_pr_number

|

||||

run: |

|

||||

python -c "import os;print(os.environ['GITHUB_REF'].split('/')[2])" > pr_number.txt

|

||||

echo "PR_NUMBER=$(cat pr_number.txt)" >> $GITHUB_ENV

|

||||

echo "GRADIO_VERSION=$(python -c 'import requests;print(requests.get("https://pypi.org/pypi/gradio/json").json()["info"]["version"])')" >> $GITHUB_ENV

|

||||

echo "PR_NUMBER=$(cat pr_number.txt)" >> $GITHUB_OUTPUT

|

||||

echo "GRADIO_VERSION=$(python -c 'import requests;print(requests.get("https://pypi.org/pypi/gradio/json").json()["info"]["version"])')" >> $GITHUB_OUTPUT

|

||||

- name: Build pr package

|

||||

run: |

|

||||

echo ${{ env.GRADIO_VERSION }} > gradio/version.txt

|

||||

pnpm i --frozen-lockfile

|

||||

echo ${{ steps.get_pr_number.outputs.GRADIO_VERSION }} > gradio/version.txt

|

||||

pnpm i --frozen-lockfile --ignore-scripts

|

||||

pnpm build

|

||||

python3 -m build -w

|

||||

env:

|

||||

@ -41,11 +49,11 @@ jobs:

|

||||

- name: Upload wheel

|

||||

uses: actions/upload-artifact@v3

|

||||

with:

|

||||

name: gradio-${{ env.GRADIO_VERSION }}-py3-none-any.whl

|

||||

path: dist/gradio-${{ env.GRADIO_VERSION }}-py3-none-any.whl

|

||||

name: gradio-${{ steps.get_pr_number.outputs.GRADIO_VERSION }}-py3-none-any.whl

|

||||

path: dist/gradio-${{ steps.get_pr_number.outputs.GRADIO_VERSION }}-py3-none-any.whl

|

||||

- name: Set up Demos

|

||||

run: |

|

||||

python scripts/copy_demos.py https://gradio-builds.s3.amazonaws.com/${{ github.sha }}/gradio-${{ env.GRADIO_VERSION }}-py3-none-any.whl \

|

||||

python scripts/copy_demos.py https://gradio-builds.s3.amazonaws.com/${{ github.sha }}/gradio-${{ steps.get_pr_number.outputs.GRADIO_VERSION }}-py3-none-any.whl \

|

||||

"gradio-client @ git+https://github.com/gradio-app/gradio@${{ github.sha }}#subdirectory=client/python"

|

||||

- name: Upload all_demos

|

||||

uses: actions/upload-artifact@v3

|

||||

@ -54,7 +62,7 @@ jobs:

|

||||

path: demo/all_demos

|

||||

- name: Create metadata artifact

|

||||

run: |

|

||||

python -c "import json; json.dump({'gh_sha': '${{ github.sha }}', 'pr_number': ${{ env.PR_NUMBER }}, 'version': '${{ env.GRADIO_VERSION }}', 'wheel': 'gradio-${{ env.GRADIO_VERSION }}-py3-none-any.whl'}, open('metadata.json', 'w'))"

|

||||

python -c "import json; json.dump({'gh_sha': '${{ github.sha }}', 'pr_number': ${{ steps.get_pr_number.outputs.pr_number }}, 'version': '${{ steps.get_pr_number.outputs.GRADIO_VERSION }}', 'wheel': 'gradio-${{ steps.get_pr_number.outputs.GRADIO_VERSION }}-py3-none-any.whl'}, open('metadata.json', 'w'))"

|

||||

- name: Upload metadata

|

||||

uses: actions/upload-artifact@v3

|

||||

with:

|

||||

|

||||

7

.github/workflows/check-demo-notebooks.yml

vendored

7

.github/workflows/check-demo-notebooks.yml

vendored

@ -9,6 +9,13 @@ on:

|

||||

- 'demo/**'

|

||||

|

||||

jobs:

|

||||

comment-notebook-start:

|

||||

uses: "./.github/workflows/comment-queue.yml"

|

||||

secrets:

|

||||

gh_token: ${{ secrets.COMMENT_TOKEN }}

|

||||

with:

|

||||

pr_number: ${{ github.event.pull_request.number }}

|

||||

message: notebooks~pending~null

|

||||

check-notebooks:

|

||||

name: Generate Notebooks and Check

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

44

.github/workflows/comment-pr.yml

vendored

44

.github/workflows/comment-pr.yml

vendored

@ -1,44 +0,0 @@

|

||||

on:

|

||||

workflow_run:

|

||||

workflows: [Check Demos Match Notebooks]

|

||||

types: [completed]

|

||||

|

||||

jobs:

|

||||

comment-on-failure:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: Install Python

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: '3.9'

|

||||

- name: Install pip

|

||||

run: python -m pip install requests

|

||||

- name: Download metadata

|

||||

run: python scripts/download_artifacts.py ${{github.event.workflow_run.id }} metadata.json ${{ secrets.COMMENT_TOKEN }} --owner ${{ github.repository_owner }}

|

||||

- run: unzip metadata.json.zip

|

||||

- name: Pipe metadata to env

|

||||

run: echo "pr_number=$(python -c 'import json; print(json.load(open("metadata.json"))["pr_number"])')" >> $GITHUB_ENV

|

||||

- name: Comment On Notebook check fail

|

||||

if: ${{ github.event.workflow_run.conclusion == 'failure' && github.event.workflow_run.name == 'Check Demos Match Notebooks'}}

|

||||

uses: thollander/actions-comment-pull-request@v2

|

||||

with:

|

||||

message: |

|

||||

The demo notebooks don't match the run.py files. Please run this command from the root of the repo and then commit the changes:

|

||||

```bash

|

||||

pip install nbformat && cd demo && python generate_notebooks.py

|

||||

```

|

||||

comment_includes: The demo notebooks don't match the run.py files

|

||||

GITHUB_TOKEN: ${{ secrets.COMMENT_TOKEN }}

|

||||

pr_number: ${{ env.pr_number }}

|

||||

comment_tag: notebook-check

|

||||

- name: Comment On Notebook check fail

|

||||

if: ${{ github.event.workflow_run.conclusion == 'success' && github.event.workflow_run.name == 'Check Demos Match Notebooks'}}

|

||||

uses: thollander/actions-comment-pull-request@v2

|

||||

with:

|

||||

message: |

|

||||

🎉 The demo notebooks match the run.py files! 🎉

|

||||

comment_includes: The demo notebooks match the run.py files!

|

||||

GITHUB_TOKEN: ${{ secrets.COMMENT_TOKEN }}

|

||||

pr_number: ${{ env.pr_number }}

|

||||

comment_tag: notebook-check

|

||||

39

.github/workflows/comment-queue.yml

vendored

Normal file

39

.github/workflows/comment-queue.yml

vendored

Normal file

@ -0,0 +1,39 @@

|

||||

name: Comment on pull request without race conditions

|

||||

|

||||

on:

|

||||

workflow_call:

|

||||

inputs:

|

||||

pr_number:

|

||||

type: string

|

||||

message:

|

||||

required: true

|

||||

type: string

|

||||

tag:

|

||||

required: false

|

||||

type: string

|

||||

default: "previews"

|

||||

additional_text:

|

||||

required: false

|

||||

type: string

|

||||

default: ""

|

||||

secrets:

|

||||

gh_token:

|

||||

required: true

|

||||

|

||||

concurrency:

|

||||

group: 1

|

||||

|

||||

jobs:

|

||||

comment:

|

||||

concurrency:

|

||||

group: ${{inputs.pr_number || inputs.tag}}

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: comment on pr

|

||||

uses: "gradio-app/github/actions/comment-pr@main"

|

||||

with:

|

||||

gh_token: ${{ secrets.gh_token }}

|

||||

tag: ${{ inputs.tag }}

|

||||

pr_number: ${{ inputs.pr_number}}

|

||||

message: ${{ inputs.message }}

|

||||

additional_text: ${{ inputs.additional_text }}

|

||||

65

.github/workflows/deploy-chromatic.yml

vendored

65

.github/workflows/deploy-chromatic.yml

vendored

@ -12,27 +12,33 @@ on:

|

||||

jobs:

|

||||

get-current-pr:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: 8BitJonny/gh-get-current-pr@2.2.0

|

||||

id: get-pr

|

||||

outputs:

|

||||

pr_found: ${{ steps.get-pr.outputs.pr_found }}

|

||||

pr_number: ${{ steps.get-pr.outputs.number }}

|

||||

pr_labels: ${{ steps.get-pr.outputs.pr_labels }}

|

||||

steps:

|

||||

- uses: 8BitJonny/gh-get-current-pr@2.2.0

|

||||

id: get-pr

|

||||

comment-chromatic-start:

|

||||

uses: "./.github/workflows/comment-queue.yml"

|

||||

needs: get-current-pr

|

||||

secrets:

|

||||

gh_token: ${{ secrets.COMMENT_TOKEN }}

|

||||

with:

|

||||

pr_number: ${{ needs.get-current-pr.outputs.pr_number }}

|

||||

message: |

|

||||

storybook~pending~null

|

||||

visual~pending~0~0~null

|

||||

chromatic-deployment:

|

||||

needs: get-current-pr

|

||||

runs-on: ubuntu-latest

|

||||

outputs:

|

||||

changes: ${{ steps.publish-chromatic.outputs.changeCount }}

|

||||

errors: ${{ steps.publish-chromatic.outputs.errorCount }}

|

||||

storybook_url: ${{ steps.publish-chromatic.outputs.storybookUrl }}

|

||||

build_url: ${{ steps.publish-chromatic.outputs.buildUrl }}

|

||||

if: ${{ github.repository == 'gradio-app/gradio' && !contains(needs.get-current-pr.outputs.pr_labels, 'no-visual-update') }}

|

||||

steps:

|

||||

- name: post pending deployment comment to PR

|

||||

if: ${{ needs.get-current-pr.outputs.pr_found }} == 'true'

|

||||

uses: thollander/actions-comment-pull-request@v2

|

||||

with:

|

||||

message: |

|

||||

Chromatic build pending :hourglass:

|

||||

comment_tag: chromatic-build

|

||||

GITHUB_TOKEN: ${{ secrets.COMMENT_TOKEN }}

|

||||

pr_number: ${{ needs.get-current-pr.outputs.pr_number }}

|

||||

- uses: actions/checkout@v3

|

||||

with:

|

||||

fetch-depth: 0

|

||||

@ -54,17 +60,24 @@ jobs:

|

||||

projectToken: ${{ secrets.CHROMATIC_PROJECT_TOKEN }}

|

||||

token: ${{ secrets.GITHUB_TOKEN }}

|

||||

exitOnceUploaded: true

|

||||

- name: post deployment link to PR

|

||||

if: ${{ needs.get-current-pr.outputs.pr_found }} == 'true'

|

||||

uses: thollander/actions-comment-pull-request@v2

|

||||

with:

|

||||

message: |

|

||||

:tada: Chromatic build completed!

|

||||

|

||||

There are ${{ steps.publish-chromatic.outputs.changeCount }} visual changes to review.

|

||||

There are ${{ steps.publish-chromatic.outputs.errorCount }} failed tests to fix.

|

||||

* [Storybook Preview](${{ steps.publish-chromatic.outputs.storybookUrl }})

|

||||

* [Build Review](${{ steps.publish-chromatic.outputs.buildUrl }})

|

||||

GITHUB_TOKEN: ${{ secrets.COMMENT_TOKEN }}

|

||||

comment_tag: chromatic-build

|

||||

pr_number: ${{ needs.get-current-pr.outputs.pr_number }}

|

||||

comment-chromatic-end:

|

||||

uses: "./.github/workflows/comment-queue.yml"

|

||||

needs: [chromatic-deployment, get-current-pr]

|

||||

secrets:

|

||||

gh_token: ${{ secrets.COMMENT_TOKEN }}

|

||||

with:

|

||||

pr_number: ${{ needs.get-current-pr.outputs.pr_number }}

|

||||

message: |

|

||||

storybook~success~${{ needs.chromatic-deployment.outputs.storybook_url }}

|

||||

visual~success~${{ needs.chromatic-deployment.outputs.changes }}~${{ needs.chromatic-deployment.outputs.errors }}~${{ needs.chromatic-deployment.outputs.build_url }}

|

||||

comment-chromatic-fail:

|

||||

uses: "./.github/workflows/comment-queue.yml"

|

||||

needs: [chromatic-deployment, get-current-pr]

|

||||

if: always() && needs.chromatic-deployment.result == 'failure'

|

||||

secrets:

|

||||

gh_token: ${{ secrets.COMMENT_TOKEN }}

|

||||

with:

|

||||

pr_number: ${{ needs.get-current-pr.outputs.pr_number }}

|

||||

message: |

|

||||

storybook~failure~https://github.com/${{github.action_repository}}/actions/runs/${{github.run_id}}/

|

||||

visual~failure~0~0~https://github.com/${{github.action_repository}}/actions/runs/${{github.run_id}}/

|

||||

78

.github/workflows/deploy-pr-to-spaces.yml

vendored

78

.github/workflows/deploy-pr-to-spaces.yml

vendored

@ -8,6 +8,11 @@ on:

|

||||

|

||||

jobs:

|

||||

deploy-current-pr:

|

||||

outputs:

|

||||

pr_number: ${{ steps.set-outputs.outputs.pr_number }}

|

||||

space_url: ${{ steps.upload-demo.outputs.SPACE_URL }}

|

||||

sha: ${{ steps.set-outputs.outputs.gh_sha }}

|

||||

gradio_version: ${{ steps.set-outputs.outputs.gradio_version }}

|

||||

runs-on: ubuntu-latest

|

||||

if: >

|

||||

github.event.workflow_run.event == 'pull_request' &&

|

||||

@ -23,21 +28,22 @@ jobs:

|

||||

- name: Download metadata

|

||||

run: python scripts/download_artifacts.py ${{github.event.workflow_run.id }} metadata.json ${{ secrets.COMMENT_TOKEN }} --owner ${{ github.repository_owner }}

|

||||

- run: unzip metadata.json.zip

|

||||

- name: Pipe metadata to env

|

||||

- name: set outputs

|

||||

id: set-outputs

|

||||

run: |

|

||||

echo "wheel_name=$(python -c 'import json; print(json.load(open("metadata.json"))["wheel"])')" >> $GITHUB_ENV

|

||||

echo "gh_sha=$(python -c 'import json; print(json.load(open("metadata.json"))["gh_sha"])')" >> $GITHUB_ENV

|

||||

echo "gradio_version=$(python -c 'import json; print(json.load(open("metadata.json"))["version"])')" >> $GITHUB_ENV

|

||||

echo "pr_number=$(python -c 'import json; print(json.load(open("metadata.json"))["pr_number"])')" >> $GITHUB_ENV

|

||||

echo "wheel_name=$(python -c 'import json; print(json.load(open("metadata.json"))["wheel"])')" >> $GITHUB_OUTPUT

|

||||

echo "gh_sha=$(python -c 'import json; print(json.load(open("metadata.json"))["gh_sha"])')" >> $GITHUB_OUTPUT

|

||||

echo "gradio_version=$(python -c 'import json; print(json.load(open("metadata.json"))["version"])')" >> $GITHUB_OUTPUT

|

||||

echo "pr_number=$(python -c 'import json; print(json.load(open("metadata.json"))["pr_number"])')" >> $GITHUB_OUTPUT

|

||||

- name: 'Download wheel'

|

||||

run: python scripts/download_artifacts.py ${{ github.event.workflow_run.id }} ${{ env.wheel_name }} ${{ secrets.COMMENT_TOKEN }} --owner ${{ github.repository_owner }}

|

||||

- run: unzip ${{ env.wheel_name }}.zip

|

||||

run: python scripts/download_artifacts.py ${{ github.event.workflow_run.id }} ${{ steps.set-outputs.outputs.wheel_name }} ${{ secrets.COMMENT_TOKEN }} --owner ${{ github.repository_owner }}

|

||||

- run: unzip ${{ steps.set-outputs.outputs.wheel_name }}.zip

|

||||

- name: Upload wheel

|

||||

run: |

|

||||

export AWS_ACCESS_KEY_ID=${{ secrets.PR_DEPLOY_KEY }}

|

||||

export AWS_SECRET_ACCESS_KEY=${{ secrets.PR_DEPLOY_SECRET }}

|

||||

export AWS_DEFAULT_REGION=us-east-1

|

||||

aws s3 cp ${{ env.wheel_name }} s3://gradio-builds/${{ env.gh_sha }}/

|

||||

aws s3 cp ${{ steps.set-outputs.outputs.wheel_name }} s3://gradio-builds/${{ steps.set-outputs.outputs.gh_sha }}/

|

||||

- name: Install Hub Client Library

|

||||

run: pip install huggingface-hub

|

||||

- name: 'Download all_demos'

|

||||

@ -45,29 +51,41 @@ jobs:

|

||||

- run: unzip all_demos.zip -d all_demos

|

||||

- run: cp -R all_demos/* demo/all_demos

|

||||

- name: Upload demo to spaces

|

||||

id: upload-demo

|

||||

run: |

|

||||

python scripts/upload_demo_to_space.py all_demos \

|

||||

gradio-pr-deploys/pr-${{ env.pr_number }}-all-demos \

|

||||

gradio-pr-deploys/pr-${{ steps.set-outputs.outputs.pr_number }}-all-demos \

|

||||

${{ secrets.SPACES_DEPLOY_TOKEN }} \

|

||||

--gradio-version ${{ env.gradio_version }} > url.txt

|

||||

echo "SPACE_URL=$(cat url.txt)" >> $GITHUB_ENV

|

||||

- name: Comment On Release PR

|

||||

uses: thollander/actions-comment-pull-request@v2

|

||||

with:

|

||||

message: |

|

||||

All the demos for this PR have been deployed at ${{ env.SPACE_URL }}

|

||||

--gradio-version ${{ steps.set-outputs.outputs.gradio_version }} > url.txt

|

||||

echo "SPACE_URL=$(cat url.txt)" >> $GITHUB_OUTPUT

|

||||

comment-spaces-success:

|

||||

uses: "./.github/workflows/comment-queue.yml"

|

||||

needs: [deploy-current-pr]

|

||||

if: >

|

||||

github.event.workflow_run.event == 'pull_request' &&

|

||||

github.event.workflow_run.conclusion == 'success' &&

|

||||

needs.deploy-current-pr.result == 'success'

|

||||

secrets:

|

||||

gh_token: ${{ secrets.COMMENT_TOKEN }}

|

||||

with:

|

||||

pr_number: ${{ needs.deploy-current-pr.outputs.pr_number }}

|

||||

message: spaces~success~${{ needs.deploy-current-pr.outputs.space_url }}

|

||||

additional_text: |

|

||||

**Install Gradio from this PR**

|

||||

```bash

|

||||

pip install https://gradio-builds.s3.amazonaws.com/${{ needs.deploy-current-pr.outputs.sha }}/gradio-${{ needs.deploy-current-pr.outputs.gradio_version }}-py3-none-any.whl

|

||||

```

|

||||

|

||||

---

|

||||

### Install Gradio from this PR:

|

||||

```bash

|

||||

pip install https://gradio-builds.s3.amazonaws.com/${{ env.gh_sha }}/gradio-${{ env.gradio_version }}-py3-none-any.whl

|

||||

```

|

||||

|

||||

---

|

||||

### Install Gradio Python Client from this PR

|

||||

```bash

|

||||

pip install "gradio-client @ git+https://github.com/gradio-app/gradio@${{ github.sha }}#subdirectory=client/python"

|

||||

```

|

||||

comment_tag: All the demos for this PR have been deployed at

|

||||

GITHUB_TOKEN: ${{ secrets.COMMENT_TOKEN }}

|

||||

pr_number: ${{ env.pr_number }}

|

||||

**Install Gradio Python Client from this PR**

|

||||

```bash

|

||||

pip install "gradio-client @ git+https://github.com/gradio-app/gradio@${{ needs.deploy-current-pr.outputs.sha }}#subdirectory=client/python"

|

||||

```

|

||||

comment-spaces-failure:

|

||||

uses: "./.github/workflows/comment-queue.yml"

|

||||

needs: [deploy-current-pr]

|

||||

if: always() && needs.deploy-current-pr.result == 'failure'

|

||||

secrets:

|

||||

gh_token: ${{ secrets.COMMENT_TOKEN }}

|

||||

with:

|

||||

pr_number: ${{ needs.deploy-current-pr.outputs.pr_number }}

|

||||

message: spaces~failure~https://github.com/${{github.action_repository}}/actions/runs/${{github.run_id}}/

|

||||

|

||||

109

.github/workflows/deploy-website.yml

vendored

Normal file

109

.github/workflows/deploy-website.yml

vendored

Normal file

@ -0,0 +1,109 @@

|

||||

name: "deploy website"

|

||||

|

||||

on:

|

||||

workflow_call:

|

||||

inputs:

|

||||

branch_name:

|

||||

description: "The branch name"

|

||||

type: string

|

||||

pr_number:

|

||||

description: "The PR number"

|

||||

type: string

|

||||

secrets:

|

||||

vercel_token:

|

||||

description: "Vercel API token"

|

||||

gh_token:

|

||||

description: "Github token"

|

||||

required: true

|

||||

vercel_org_id:

|

||||

description: "Vercel organization ID"

|

||||

required: true

|

||||

vercel_project_id:

|

||||

description: "Vercel project ID"

|

||||

required: true

|

||||

|

||||

env:

|

||||

VERCEL_ORG_ID: ${{ secrets.vercel_org_id }}

|

||||

VERCEL_PROJECT_ID: ${{ secrets.vercel_project_id }}

|

||||

|

||||

jobs:

|

||||

comment-deploy-start:

|

||||

uses: "./.github/workflows/comment-queue.yml"

|

||||

secrets:

|

||||

gh_token: ${{ secrets.github_token }}

|

||||

with:

|

||||

pr_number: ${{ inputs.pr_number }}

|

||||

message: website~pending~null

|

||||

deploy:

|

||||

name: "Deploy website"

|

||||

runs-on: ubuntu-latest

|

||||

outputs:

|

||||

vercel_url: ${{ steps.output_url.outputs.vercel_url }}

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: install dependencies

|

||||

uses: "./.github/actions/install-frontend-deps"

|

||||

with:

|

||||

always-install-pnpm: true

|

||||

skip_build: true

|

||||

- name: download artifacts

|

||||

uses: actions/download-artifact@v2

|

||||

with:

|

||||

name: website-json-${{ inputs.pr_number }}}

|

||||

path: |

|

||||

./js/_website/src/lib/json

|

||||

- name: echo artifact path

|

||||

shell: bash

|

||||

run: ls ./js/_website/src/lib/json

|

||||

- name: Install Vercel CLI

|

||||

shell: bash

|

||||

run: pnpm install --global vercel@latest

|

||||

# preview

|

||||

- name: Pull Vercel Environment Information

|

||||

shell: bash

|

||||

if: github.event_name == 'pull_request'

|

||||

run: vercel pull --yes --environment=preview --token=${{ secrets.vercel_token }}

|

||||

- name: Build Project Artifacts

|

||||

if: github.event_name == 'pull_request'

|

||||

shell: bash

|

||||

run: vercel build --token=${{ secrets.vercel_token }}

|

||||

- name: Deploy Project Artifacts to Vercel

|

||||

if: github.event_name == 'pull_request'

|

||||

id: output_url

|

||||

shell: bash

|

||||

run: echo "vercel_url=$(vercel deploy --prebuilt --token=${{ secrets.vercel_token }})" >> $GITHUB_OUTPUT

|

||||

# production

|

||||

- name: Pull Vercel Environment Information

|

||||

if: github.event_name == 'push' && inputs.branch_name == 'main'

|

||||

shell: bash

|

||||

run: vercel pull --yes --environment=production --token=${{ secrets.vercel_token }}

|

||||

- name: Build Project Artifacts

|

||||

if: github.event_name == 'push' && inputs.branch_name == 'main'

|

||||

shell: bash

|

||||

run: vercel build --prod --token=${{ secrets.vercel_token }}

|

||||

- name: Deploy Project Artifacts to Vercel

|

||||

if: github.event_name == 'push' && inputs.branch_name == 'main'

|

||||

shell: bash

|

||||

run: echo "deploying production"

|

||||

# run: echo "VERCEL_URL=$(vercel deploy --prebuilt --prod --token=${{ inputs.vercel_token }})" >> $GITHUB_ENV

|

||||

- name: echo vercel url

|

||||

shell: bash

|

||||

run: echo $VERCEL_URL #add to comment

|

||||

comment-deploy-success:

|

||||

uses: "./.github/workflows/comment-queue.yml"

|

||||

needs: deploy

|

||||

if: needs.deploy.result == 'success'

|

||||

secrets:

|

||||

gh_token: ${{ secrets.gh_token }}

|

||||

with:

|

||||

pr_number: ${{ inputs.pr_number }}

|

||||

message: website~success~${{needs.deploy.outputs.vercel_url}}

|

||||

comment-deploy-failure:

|

||||

uses: "./.github/workflows/comment-queue.yml"

|

||||

needs: deploy

|

||||

if: always() && needs.deploy.result == 'failure'

|

||||

secrets:

|

||||

gh_token: ${{ secrets.gh_token }}

|

||||

with:

|

||||

pr_number: ${{ inputs.pr_number }}

|

||||

message: website~failure~https://github.com/${{github.action_repository}}/actions/runs/${{github.run_id}}/

|

||||

67

.github/workflows/generate-changeset.yml

vendored

67

.github/workflows/generate-changeset.yml

vendored

@ -13,27 +13,74 @@ concurrency:

|

||||

group: ${{ github.event.workflow_run.head_repository.full_name }}::${{ github.event.workflow_run.head_branch }}

|

||||

|

||||

jobs:

|

||||

get-pr:

|

||||

runs-on: ubuntu-latest

|

||||

outputs:

|

||||

found_pr: ${{ steps.pr_details.outputs.found_pr }}

|

||||

pr_number: ${{ steps.pr_details.outputs.pr_number }}

|

||||

source_repo: ${{ steps.pr_details.outputs.source_repo }}

|

||||

source_branch: ${{ steps.pr_details.outputs.source_branch }}

|

||||

steps:

|

||||

- name: get pr details

|

||||

id: pr_details

|

||||

uses: gradio-app/github/actions/find-pr@main

|

||||

with:

|

||||

github_token: ${{ secrets.GITHUB_TOKEN }}

|

||||

comment-chromatic-start:

|

||||

uses: "./.github/workflows/comment-queue.yml"

|

||||

needs: get-pr

|

||||

secrets:

|

||||

gh_token: ${{ secrets.COMMENT_TOKEN }}

|

||||

with:

|

||||

pr_number: ${{ needs.get-pr.outputs.pr_number }}

|

||||

message: changes~pending~null

|

||||

version:

|

||||

permissions: write-all

|

||||

name: static checks

|

||||

needs: get-pr

|

||||

runs-on: ubuntu-22.04

|

||||

if: (github.event.workflow_run.head_repository.full_name == 'gradio-app/gradio' && github.event.workflow_run.head_branch != 'main') || github.event.workflow_run.head_repository.full_name != 'gradio-app/gradio'

|

||||

if: needs.get-pr.outputs.found_pr == 'true'

|

||||

outputs:

|

||||

skipped: ${{ steps.version.outputs.skipper }}

|

||||

comment_url: ${{ steps.version.outputs.comment_url }}

|

||||

steps:

|

||||

- id: 'get-pr'

|

||||

uses: "gradio-app/github/actions/get-pr-branch@main"

|

||||

with:

|

||||

github_token: ${{ secrets.GITHUB_TOKEN }}

|

||||

- name: get pr number

|

||||

run: echo "PR number is ${{ steps.get-pr.outputs.pr_number }}"

|

||||

- uses: actions/checkout@v3

|

||||

with:

|

||||

repository: ${{ github.event.workflow_run.head_repository.full_name }}

|

||||

ref: ${{ github.event.workflow_run.head_branch }}

|

||||

repository: ${{ needs.get-pr.outputs.source_repo }}

|

||||

ref: ${{ needs.get-pr.outputs.source_branch }}

|

||||

fetch-depth: 0

|

||||

token: ${{ secrets.COMMENT_TOKEN }}

|

||||

- name: generate changeset

|

||||

id: version

|

||||

uses: "gradio-app/github/actions/generate-changeset@main"

|

||||

with:

|

||||

github_token: ${{ secrets.COMMENT_TOKEN }}

|

||||

main_pkg: gradio

|

||||

pr_number: ${{ steps.get-pr.outputs.pr_number }}

|

||||

pr_number: ${{ needs.get-pr.outputs.pr_number }}

|

||||

comment-changes-skipper:

|

||||

uses: "./.github/workflows/comment-queue.yml"

|

||||

needs: [get-pr, version]

|

||||

if: needs.version.result == 'success' && needs.version.outputs.skipped == 'true'

|

||||

secrets:

|

||||

gh_token: ${{ secrets.COMMENT_TOKEN }}

|

||||

with:

|

||||

pr_number: ${{ needs.get-pr.outputs.pr_number }}

|

||||

message: changes~warning~https://github.com/${{github.action_repository}}/actions/runs/${{github.run_id}}/

|

||||

comment-changes-success:

|

||||

uses: "./.github/workflows/comment-queue.yml"

|

||||

needs: [get-pr, version]

|

||||

if: needs.version.result == 'success' && needs.version.outputs.skipped == 'false'

|

||||

secrets:

|

||||

gh_token: ${{ secrets.COMMENT_TOKEN }}

|

||||

with:

|

||||

pr_number: ${{ needs.get-pr.outputs.pr_number }}

|

||||

message: changes~success~${{ needs.version.outputs.comment_url }}

|

||||

comment-changes-failure:

|

||||

uses: "./.github/workflows/comment-queue.yml"

|

||||

needs: [get-pr, version]

|

||||

if: always() && needs.version.result == 'failure'

|

||||

secrets:

|

||||

gh_token: ${{ secrets.COMMENT_TOKEN }}

|

||||

with:

|

||||

pr_number: ${{ needs.get-pr.outputs.pr_number }}

|

||||

message: changes~failure~https://github.com/${{github.action_repository}}/actions/runs/${{github.run_id}}/

|

||||

47

.github/workflows/report-notebook-status-pr.yml

vendored

Normal file

47

.github/workflows/report-notebook-status-pr.yml

vendored

Normal file

@ -0,0 +1,47 @@

|

||||

on:

|

||||

workflow_run:

|

||||

workflows: [Check Demos Match Notebooks]

|

||||

types: [completed]

|

||||

|

||||

jobs:

|

||||

get-pr-number:

|

||||

runs-on: ubuntu-latest

|

||||

outputs:

|

||||

pr_number: ${{ steps.pr_number.outputs.pr_number }}

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: Install Python

|

||||

uses: actions/setup-python@v4

|

||||

with:

|

||||

python-version: '3.9'

|

||||

- name: Install pip

|

||||

run: python -m pip install requests

|

||||

- name: Download metadata

|

||||

run: python scripts/download_artifacts.py ${{github.event.workflow_run.id }} metadata.json ${{ secrets.COMMENT_TOKEN }} --owner ${{ github.repository_owner }}

|

||||

- run: unzip metadata.json.zip

|

||||

- name: Pipe metadata to env

|

||||

id: pr_number

|

||||

run: echo "pr_number=$(python -c 'import json; print(json.load(open("metadata.json"))["pr_number"])')" >> $GITHUB_OUTPUT

|

||||

comment-success:

|

||||

uses: "./.github/workflows/comment-queue.yml"

|

||||

if: ${{ github.event.workflow_run.conclusion == 'success' && github.event.workflow_run.name == 'Check Demos Match Notebooks'}}

|

||||

needs: get-pr-number

|

||||

secrets:

|

||||

gh_token: ${{ secrets.GITHUB_TOKEN }}

|

||||

with:

|

||||

pr_number: ${{ needs.get-pr-number.outputs.pr_number }}

|

||||

message: notebooks~success~null

|

||||

comment-failure:

|

||||

uses: "./.github/workflows/comment-queue.yml"

|

||||

if: ${{ github.event.workflow_run.conclusion == 'failure' && github.event.workflow_run.name == 'Check Demos Match Notebooks'}}

|

||||

needs: get-pr-number

|

||||

secrets:

|

||||

gh_token: ${{ secrets.GITHUB_TOKEN }}

|

||||

with:

|

||||

pr_number: ${{ needs.get-pr-number.outputs.pr_number }}

|

||||

message: notebooks~failure~https://github.com/${{github.action_repository}}/actions/runs/${{github.run_id}}/

|

||||

additional_text: |

|

||||

The demo notebooks don't match the run.py files. Please run this command from the root of the repo and then commit the changes:

|

||||

```bash

|

||||

pip install nbformat && cd demo && python generate_notebooks.py

|

||||

```

|

||||

38

.github/workflows/ui.yml

vendored

38

.github/workflows/ui.yml

vendored

@ -10,7 +10,8 @@ env:

|

||||

CI: true

|

||||

PLAYWRIGHT_SKIP_BROWSER_DOWNLOAD: "1"

|

||||

NODE_OPTIONS: "--max-old-space-size=4096"

|

||||

|

||||

VERCEL_ORG_ID: ${{ secrets.VERCEL_ORG_ID }}

|

||||

VERCEL_PROJECT_ID: ${{ secrets.VERCEL_PROJECT_ID }}

|

||||

concurrency:

|

||||

group: deploy-${{ github.ref }}-${{ github.event_name == 'push' || github.event.inputs.fire != null }}

|

||||

cancel-in-progress: true

|

||||

@ -37,12 +38,29 @@ jobs:

|

||||

run: pnpm test:run

|

||||

functional-test:

|

||||

runs-on: ubuntu-latest

|

||||

outputs:

|

||||

source_branch: ${{ steps.pr_details.outputs.source_branch }}

|

||||

pr_number: ${{ steps.pr_details.outputs.pr_number }}

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: install dependencies

|

||||

id: install_deps

|

||||

uses: "./.github/actions/install-all-deps"

|

||||

with:

|

||||

always-install-pnpm: true

|

||||

- name: get pr details

|

||||

id: pr_details

|

||||

uses: gradio-app/github/actions/find-pr@main

|

||||

with:

|

||||

github_token: ${{ secrets.GITHUB_TOKEN }}

|

||||

- name: deploy json to aws

|

||||

if: steps.pr_details.outputs.source_branch == 'changeset-release/main'

|

||||

run: |

|

||||

export AWS_ACCESS_KEY_ID=${{ secrets.AWSACCESSKEYID }}

|

||||

export AWS_SECRET_ACCESS_KEY=${{ secrets.AWSSECRETKEY }}

|

||||

export AWS_DEFAULT_REGION=us-west-2

|

||||

version=$(sed -nr 's/{ "version": "([0-9\.]+)" }/\1/p' ./js/_website/src/lib/json/version.json)

|

||||

aws s3 cp ./js/_website/src/lib/json/ s3://gradio-docs-json/$version/ --recursive

|

||||

- name: install outbreak_forecast dependencies

|

||||

run: |

|

||||

. venv/bin/activate

|

||||

@ -63,3 +81,21 @@ jobs:

|

||||

run: |

|

||||

. venv/bin/activate

|

||||

pnpm run test:ct

|

||||

- name: save artifacts

|

||||

uses: actions/upload-artifact@v2

|

||||

with:

|

||||

name: website-json-${{ steps.pr_details.outputs.pr_number }}}

|

||||

path: |

|

||||

./js/_website/src/lib/json

|

||||

deploy_to_vercel:

|

||||

uses: "./.github/workflows/deploy-website.yml"

|

||||

needs: functional-test

|

||||

if: always()

|

||||

secrets:

|

||||

gh_token: ${{ secrets.COMMENT_TOKEN }}

|

||||

vercel_token: ${{ secrets.VERCEL_TOKEN }}

|

||||

vercel_org_id: ${{ secrets.VERCEL_ORG_ID }}

|

||||

vercel_project_id: ${{ secrets.VERCEL_PROJECT_ID }}

|

||||

with:

|

||||

branch_name: ${{ needs.functional-test.outputs.source_branch }}

|

||||

pr_number: ${{ needs.functional-test.outputs.pr_number }}

|

||||

|

||||

@ -207,7 +207,7 @@ export function api_factory(fetch_implementation: typeof fetch): Client {

|

||||

const chunkSize = 1000;

|

||||

const uploadResponses = [];

|

||||

for (let i = 0; i < files.length; i += chunkSize) {

|

||||

const chunk = files.slice(i, i + chunkSize);

|

||||

const chunk = files.slice(i, i + chunkSize);

|

||||

const formData = new FormData();

|

||||

chunk.forEach((file) => {

|

||||

formData.append("files", file);

|

||||

@ -222,7 +222,7 @@ export function api_factory(fetch_implementation: typeof fetch): Client {

|

||||

return { error: BROKEN_CONNECTION_MSG };

|

||||

}

|

||||

const output: UploadResponse["files"] = await response.json();

|

||||

uploadResponses.push(...output);

|

||||

uploadResponses.push(...output);

|

||||

}

|

||||

return { files: uploadResponses };

|

||||

}

|

||||

|

||||

1

client/js/src/globals.d.ts

vendored

1

client/js/src/globals.d.ts

vendored

@ -3,6 +3,7 @@ declare global {

|

||||

__gradio_mode__: "app" | "website";

|

||||

gradio_config: Config;

|

||||

__is_colab__: boolean;

|

||||

__gradio_space__: string | null;

|

||||

}

|

||||

}

|

||||

|

||||

|

||||

@ -1,31 +0,0 @@

|

||||

"""Writes the config file for any of the demos to an output file

|

||||

|

||||

Usage: python write_config.py <demo_name> <output_file>

|

||||

Example: python write_config.py calculator output.json

|

||||

|

||||

Assumes:

|

||||

- The demo_name is a folder in this directory

|

||||

- The demo_name folder contains a run.py file

|

||||

- The run.py file defines a Gradio Interface/Blocks instance called `demo`

|

||||

"""

|

||||

|

||||

from __future__ import annotations

|

||||

|

||||

import argparse

|

||||

import importlib

|

||||

import json

|

||||

|

||||

import gradio as gr

|

||||

|

||||

parser = argparse.ArgumentParser()

|

||||

parser.add_argument("demo_name", help="the name of the demo whose config to write")

|

||||

parser.add_argument("file_path", help="the path at which to write the config file")

|

||||

args = parser.parse_args()

|

||||

|

||||

# import the run.py file from inside the directory specified by args.demo_name

|

||||

run = importlib.import_module(f"{args.demo_name}.run")

|

||||

|

||||

demo: gr.Blocks = run.demo

|

||||

config = demo.get_config_file()

|

||||

|

||||

json.dump(config, open(args.file_path, "w"), indent=2)

|

||||

1

globals.d.ts

vendored

1

globals.d.ts

vendored

@ -1,6 +1,7 @@

|

||||

declare global {

|

||||

interface Window {

|

||||

__gradio_mode__: "app" | "website";

|

||||

__gradio_space__: string | null;

|

||||

launchGradio: Function;

|

||||

launchGradioFromSpaces: Function;

|

||||

gradio_config: Config;

|

||||

|

||||

@ -4,7 +4,7 @@

|

||||

|

||||

## What Does Gradio Do?

|

||||

|

||||

One of the *best ways to share* your machine learning model, API, or data science workflow with others is to create an **interactive app** that allows your users or colleagues to try out the demo in their browsers.

|

||||

One of the _best ways to share_ your machine learning model, API, or data science workflow with others is to create an **interactive app** that allows your users or colleagues to try out the demo in their browsers.

|

||||

|

||||

Gradio allows you to **build demos and share them, all in Python.** And usually in just a few lines of code! So let's get started.

|

||||

|

||||

@ -89,8 +89,8 @@ You can read more about the many components and how to use them in the [Gradio d

|

||||

|

||||

Gradio includes a high-level class, `gr.ChatInterface`, which is similar to `gr.Interface`, but is specifically designed for chatbot UIs. The `gr.ChatInterface` class also wraps a function but this function must have a specific signature. The function should take two arguments: `message` and then `history` (the arguments can be named anything, but must be in this order)

|

||||

|

||||

* `message`: a `str` representing the user's input

|

||||

* `history`: a `list` of `list` representing the conversations up until that point. Each inner list consists of two `str` representing a pair: `[user input, bot response]`.

|

||||

- `message`: a `str` representing the user's input

|

||||

- `history`: a `list` of `list` representing the conversations up until that point. Each inner list consists of two `str` representing a pair: `[user input, bot response]`.

|

||||

|

||||

Your function should return a single string response, which is the bot's response to the particular user input `message`.

|

||||

|

||||

|

||||

@ -16,7 +16,7 @@ Let's go through some of the most popular features of Gradio! Here are Gradio's

|

||||

|

||||

## Example Inputs

|

||||

|

||||

You can provide example data that a user can easily load into `Interface`. This can be helpful to demonstrate the types of inputs the model expects, as well as to provide a way to explore your dataset in conjunction with your model. To load example data, you can provide a **nested list** to the `examples=` keyword argument of the Interface constructor. Each sublist within the outer list represents a data sample, and each element within the sublist represents an input for each input component. The format of example data for each component is specified in the [Docs](https://gradio.app/docs#components).

|

||||

You can provide example data that a user can easily load into `Interface`. This can be helpful to demonstrate the types of inputs the model expects, as well as to provide a way to explore your dataset in conjunction with your model. To load example data, you can provide a **nested list** to the `examples=` keyword argument of the Interface constructor. Each sublist within the outer list represents a data sample, and each element within the sublist represents an input for each input component. The format of example data for each component is specified in the [Docs](https://gradio.app/docs#components).

|

||||

|

||||

$code_calculator

|

||||

$demo_calculator

|

||||

@ -27,9 +27,9 @@ Continue learning about examples in the [More On Examples](https://gradio.app/mo

|

||||

|

||||

## Alerts

|

||||

|

||||

You wish to pass custom error messages to the user. To do so, raise a `gr.Error("custom message")` to display an error message. If you try to divide by zero in the calculator demo above, a popup modal will display the custom error message. Learn more about Error in the [docs](https://gradio.app/docs#error).

|

||||

You wish to pass custom error messages to the user. To do so, raise a `gr.Error("custom message")` to display an error message. If you try to divide by zero in the calculator demo above, a popup modal will display the custom error message. Learn more about Error in the [docs](https://gradio.app/docs#error).

|

||||

|

||||

You can also issue `gr.Warning("message")` and `gr.Info("message")` by having them as standalone lines in your function, which will immediately display modals while continuing the execution of your function. Queueing needs to be enabled for this to work.

|

||||

You can also issue `gr.Warning("message")` and `gr.Info("message")` by having them as standalone lines in your function, which will immediately display modals while continuing the execution of your function. Queueing needs to be enabled for this to work.

|

||||

|

||||

Note below how the `gr.Error` has to be raised, while the `gr.Warning` and `gr.Info` are single lines.

|

||||

|

||||

@ -42,16 +42,16 @@ def start_process(name):

|

||||

if success == False:

|

||||

raise gr.Error("Process failed")

|

||||

```

|

||||

|

||||

|

||||

## Descriptive Content

|

||||

|

||||

In the previous example, you may have noticed the `title=` and `description=` keyword arguments in the `Interface` constructor that helps users understand your app.

|

||||

|

||||

There are three arguments in the `Interface` constructor to specify where this content should go:

|

||||

|

||||

* `title`: which accepts text and can display it at the very top of interface, and also becomes the page title.

|

||||

* `description`: which accepts text, markdown or HTML and places it right under the title.

|

||||

* `article`: which also accepts text, markdown or HTML and places it below the interface.

|

||||

- `title`: which accepts text and can display it at the very top of interface, and also becomes the page title.

|

||||

- `description`: which accepts text, markdown or HTML and places it right under the title.

|

||||

- `article`: which also accepts text, markdown or HTML and places it below the interface.

|

||||

|

||||

|

||||

|

||||

@ -65,7 +65,7 @@ gr.Number(label='Age', info='In years, must be greater than 0')

|

||||

|

||||

## Flagging

|

||||

|

||||

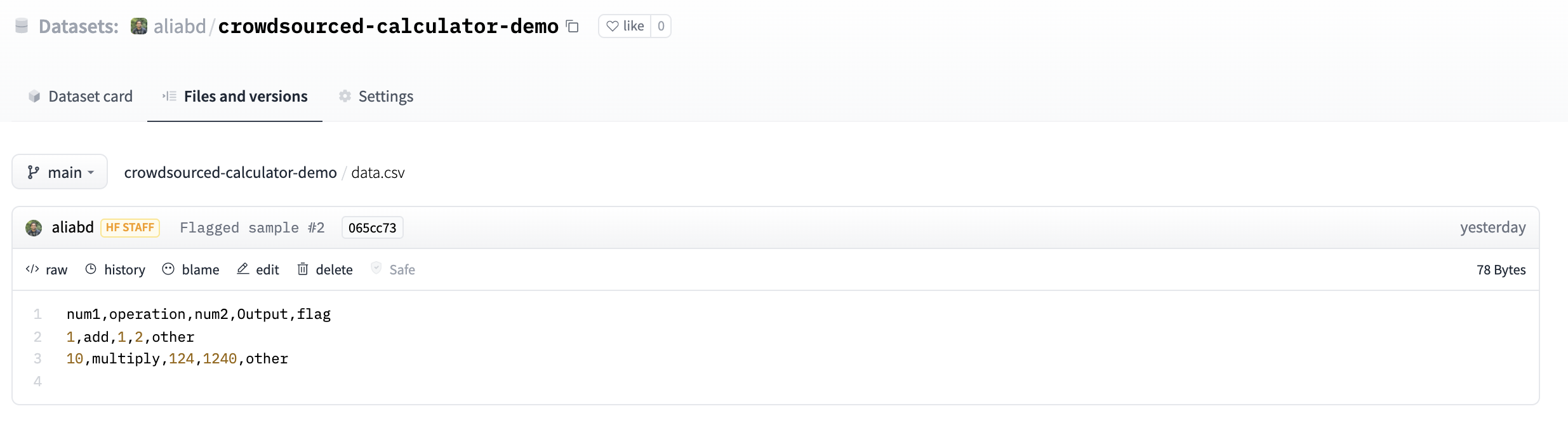

By default, an `Interface` will have "Flag" button. When a user testing your `Interface` sees input with interesting output, such as erroneous or unexpected model behaviour, they can flag the input for you to review. Within the directory provided by the `flagging_dir=` argument to the `Interface` constructor, a CSV file will log the flagged inputs. If the interface involves file data, such as for Image and Audio components, folders will be created to store those flagged data as well.

|

||||

By default, an `Interface` will have "Flag" button. When a user testing your `Interface` sees input with interesting output, such as erroneous or unexpected model behaviour, they can flag the input for you to review. Within the directory provided by the `flagging_dir=` argument to the `Interface` constructor, a CSV file will log the flagged inputs. If the interface involves file data, such as for Image and Audio components, folders will be created to store those flagged data as well.

|

||||

|

||||

For example, with the calculator interface shown above, we would have the flagged data stored in the flagged directory shown below:

|

||||

|

||||

@ -75,7 +75,7 @@ For example, with the calculator interface shown above, we would have the flagge

|

||||

| +-- logs.csv

|

||||

```

|

||||

|

||||

*flagged/logs.csv*

|

||||

_flagged/logs.csv_

|

||||

|

||||

```csv

|

||||

num1,operation,num2,Output

|

||||

@ -97,7 +97,7 @@ With the sepia interface shown earlier, we would have the flagged data stored in

|

||||

| | +-- 1.png

|

||||

```

|

||||

|

||||

*flagged/logs.csv*

|

||||

_flagged/logs.csv_

|

||||

|

||||

```csv

|

||||

im,Output

|

||||

@ -113,11 +113,11 @@ If you wish for the user to provide a reason for flagging, you can pass a list o

|

||||

|

||||

As you've seen, Gradio includes components that can handle a variety of different data types, such as images, audio, and video. Most components can be used both as inputs or outputs.

|

||||

|

||||

When a component is used as an input, Gradio automatically handles the *preprocessing* needed to convert the data from a type sent by the user's browser (such as a base64 representation of a webcam snapshot) to a form that can be accepted by your function (such as a `numpy` array).

|

||||

When a component is used as an input, Gradio automatically handles the _preprocessing_ needed to convert the data from a type sent by the user's browser (such as a base64 representation of a webcam snapshot) to a form that can be accepted by your function (such as a `numpy` array).

|

||||

|

||||

Similarly, when a component is used as an output, Gradio automatically handles the *postprocessing* needed to convert the data from what is returned by your function (such as a list of image paths) to a form that can be displayed in the user's browser (such as a `Gallery` of images in base64 format).

|

||||

Similarly, when a component is used as an output, Gradio automatically handles the _postprocessing_ needed to convert the data from what is returned by your function (such as a list of image paths) to a form that can be displayed in the user's browser (such as a `Gallery` of images in base64 format).

|

||||

|

||||

You can control the *preprocessing* using the parameters when constructing the image component. For example, here if you instantiate the `Image` component with the following parameters, it will convert the image to the `PIL` type and reshape it to be `(100, 100)` no matter the original size that it was submitted as:

|

||||

You can control the _preprocessing_ using the parameters when constructing the image component. For example, here if you instantiate the `Image` component with the following parameters, it will convert the image to the `PIL` type and reshape it to be `(100, 100)` no matter the original size that it was submitted as:

|

||||

|

||||

```py

|

||||

img = gr.Image(shape=(100, 100), type="pil")

|

||||

@ -229,11 +229,11 @@ Gradio supports the ability to create a custom Progress Bars so that you have cu

|

||||

$code_progress_simple

|

||||

$demo_progress_simple

|

||||

|

||||

If you use the `tqdm` library, you can even report progress updates automatically from any `tqdm.tqdm` that already exists within your function by setting the default argument as `gr.Progress(track_tqdm=True)`!

|

||||

If you use the `tqdm` library, you can even report progress updates automatically from any `tqdm.tqdm` that already exists within your function by setting the default argument as `gr.Progress(track_tqdm=True)`!

|

||||

|

||||

## Batch Functions

|

||||

|

||||

Gradio supports the ability to pass *batch* functions. Batch functions are just

|

||||

Gradio supports the ability to pass _batch_ functions. Batch functions are just

|

||||

functions which take in a list of inputs and return a list of predictions.

|

||||

|

||||

For example, here is a batched function that takes in two lists of inputs (a list of

|

||||

@ -246,12 +246,12 @@ def trim_words(words, lens):

|

||||

trimmed_words = []

|

||||

time.sleep(5)

|

||||

for w, l in zip(words, lens):

|

||||

trimmed_words.append(w[:int(l)])

|

||||

trimmed_words.append(w[:int(l)])

|

||||

return [trimmed_words]

|

||||

```

|

||||

|

||||

The advantage of using batched functions is that if you enable queuing, the Gradio

|

||||

server can automatically *batch* incoming requests and process them in parallel,

|

||||

server can automatically _batch_ incoming requests and process them in parallel,

|

||||

potentially speeding up your demo. Here's what the Gradio code looks like (notice

|

||||

the `batch=True` and `max_batch_size=16` -- both of these parameters can be passed

|

||||

into event triggers or into the `Interface` class)

|

||||

@ -259,7 +259,7 @@ into event triggers or into the `Interface` class)

|

||||

With `Interface`:

|

||||

|

||||

```python

|

||||

demo = gr.Interface(trim_words, ["textbox", "number"], ["output"],

|

||||

demo = gr.Interface(trim_words, ["textbox", "number"], ["output"],

|

||||

batch=True, max_batch_size=16)

|

||||

demo.queue()

|

||||

demo.launch()

|

||||

@ -292,8 +292,6 @@ generate images in batches](https://github.com/gradio-app/gradio/blob/main/demo/

|

||||

|

||||

Note: using batch functions with Gradio **requires** you to enable queuing in the underlying Interface or Blocks (see the queuing section above).

|

||||

|

||||

|

||||

## Colab Notebooks

|

||||

|

||||

|

||||

Gradio is able to run anywhere you run Python, including local jupyter notebooks as well as collaborative notebooks, such as [Google Colab](https://colab.research.google.com/). In the case of local jupyter notebooks and Google Colab notbooks, Gradio runs on a local server which you can interact with in your browser. (Note: for Google Colab, this is accomplished by [service worker tunneling](https://github.com/tensorflow/tensorboard/blob/master/docs/design/colab_integration.md), which requires cookies to be enabled in your browser.) For other remote notebooks, Gradio will also run on a server, but you will need to use [SSH tunneling](https://coderwall.com/p/ohk6cg/remote-access-to-ipython-notebooks-via-ssh) to view the app in your local browser. Often a simpler options is to use Gradio's built-in public links, [discussed in the next Guide](https://gradio.app/guides/sharing-your-app/#sharing-demos).

|

||||

Gradio is able to run anywhere you run Python, including local jupyter notebooks as well as collaborative notebooks, such as [Google Colab](https://colab.research.google.com/). In the case of local jupyter notebooks and Google Colab notbooks, Gradio runs on a local server which you can interact with in your browser. (Note: for Google Colab, this is accomplished by [service worker tunneling](https://github.com/tensorflow/tensorboard/blob/master/docs/design/colab_integration.md), which requires cookies to be enabled in your browser.) For other remote notebooks, Gradio will also run on a server, but you will need to use [SSH tunneling](https://coderwall.com/p/ohk6cg/remote-access-to-ipython-notebooks-via-ssh) to view the app in your local browser. Often a simpler options is to use Gradio's built-in public links, [discussed in the next Guide](https://gradio.app/guides/sharing-your-app/#sharing-demos).

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

# Sharing Your App

|

||||

|

||||

How to share your Gradio app:

|

||||

How to share your Gradio app:

|

||||

|

||||

1. [Sharing demos with the share parameter](#sharing-demos)

|

||||

2. [Hosting on HF Spaces](#hosting-on-hf-spaces)

|

||||

@ -20,9 +20,9 @@ Gradio demos can be easily shared publicly by setting `share=True` in the `launc

|

||||

demo.launch(share=True)

|

||||

```

|

||||

|

||||

This generates a public, shareable link that you can send to anybody! When you send this link, the user on the other side can try out the model in their browser. Because the processing happens on your device (as long as your device stays on!), you don't have to worry about any packaging any dependencies. A share link usually looks something like this: **XXXXX.gradio.app**. Although the link is served through a Gradio URL, we are only a proxy for your local server, and do not store any data sent through your app.

|

||||

This generates a public, shareable link that you can send to anybody! When you send this link, the user on the other side can try out the model in their browser. Because the processing happens on your device (as long as your device stays on!), you don't have to worry about any packaging any dependencies. A share link usually looks something like this: **XXXXX.gradio.app**. Although the link is served through a Gradio URL, we are only a proxy for your local server, and do not store any data sent through your app.

|

||||

|

||||

Keep in mind, however, that these links are publicly accessible, meaning that anyone can use your model for prediction! Therefore, make sure not to expose any sensitive information through the functions you write, or allow any critical changes to occur on your device. If you set `share=False` (the default, except in colab notebooks), only a local link is created, which can be shared by [port-forwarding](https://www.ssh.com/ssh/tunneling/example) with specific users.

|

||||

Keep in mind, however, that these links are publicly accessible, meaning that anyone can use your model for prediction! Therefore, make sure not to expose any sensitive information through the functions you write, or allow any critical changes to occur on your device. If you set `share=False` (the default, except in colab notebooks), only a local link is created, which can be shared by [port-forwarding](https://www.ssh.com/ssh/tunneling/example) with specific users.

|

||||

|

||||

|

||||

|

||||

@ -30,7 +30,7 @@ Share links expire after 72 hours.

|

||||

|

||||

## Hosting on HF Spaces

|

||||

|

||||

If you'd like to have a permanent link to your Gradio demo on the internet, use Hugging Face Spaces. [Hugging Face Spaces](http://huggingface.co/spaces/) provides the infrastructure to permanently host your machine learning model for free!

|

||||

If you'd like to have a permanent link to your Gradio demo on the internet, use Hugging Face Spaces. [Hugging Face Spaces](http://huggingface.co/spaces/) provides the infrastructure to permanently host your machine learning model for free!

|

||||

|

||||

After you have [created a free Hugging Face account](https://huggingface.co/join), you have three methods to deploy your Gradio app to Hugging Face Spaces:

|

||||

|

||||

@ -38,13 +38,13 @@ After you have [created a free Hugging Face account](https://huggingface.co/join

|

||||

|

||||

2. From your browser: Drag and drop a folder containing your Gradio model and all related files [here](https://huggingface.co/new-space).

|

||||

|

||||

3. Connect Spaces with your Git repository and Spaces will pull the Gradio app from there. See [this guide how to host on Hugging Face Spaces](https://huggingface.co/blog/gradio-spaces) for more information.

|

||||

3. Connect Spaces with your Git repository and Spaces will pull the Gradio app from there. See [this guide how to host on Hugging Face Spaces](https://huggingface.co/blog/gradio-spaces) for more information.

|

||||

|

||||

<video autoplay muted loop>

|

||||

<source src="https://github.com/gradio-app/gradio/blob/main/guides/assets/hf_demo.mp4?raw=true" type="video/mp4" />

|

||||

</video>

|

||||

|

||||

Note: Some components, like `gr.Image`, will display a "Share" button only on Spaces, so that users can share the generated output to the Discussions page of the Space easily. You can disable this with `show_share_button`, such as `gr.Image(show_share_button=False)`.

|

||||

Note: Some components, like `gr.Image`, will display a "Share" button only on Spaces, so that users can share the generated output to the Discussions page of the Space easily. You can disable this with `show_share_button`, such as `gr.Image(show_share_button=False)`.

|

||||

|

||||

|

||||

|

||||

@ -58,28 +58,31 @@ There are two ways to embed your Gradio demos. You can find quick links to both

|

||||

|

||||

### Embedding with Web Components

|

||||

|

||||

Web components typically offer a better experience to users than IFrames. Web components load lazily, meaning that they won't slow down the loading time of your website, and they automatically adjust their height based on the size of the Gradio app.

|

||||

Web components typically offer a better experience to users than IFrames. Web components load lazily, meaning that they won't slow down the loading time of your website, and they automatically adjust their height based on the size of the Gradio app.

|

||||

|

||||

To embed with Web Components:

|

||||

|

||||

1. Import the gradio JS library into into your site by adding the script below in your site (replace {GRADIO_VERSION} in the URL with the library version of Gradio you are using).

|

||||

1. Import the gradio JS library into into your site by adding the script below in your site (replace {GRADIO_VERSION} in the URL with the library version of Gradio you are using).

|

||||

|

||||

```html

|

||||

<script type="module"

|

||||

src="https://gradio.s3-us-west-2.amazonaws.com/{GRADIO_VERSION}/gradio.js">

|

||||

</script>

|

||||

<script

|

||||

type="module"

|

||||

src="https://gradio.s3-us-west-2.amazonaws.com/{GRADIO_VERSION}/gradio.js"

|

||||

></script>

|

||||

```

|

||||

|

||||

2. Add

|

||||

2. Add

|

||||

|

||||

```html

|

||||

<gradio-app src="https://$your_space_host.hf.space"></gradio-app>

|

||||

```

|

||||

|

||||

element where you want to place the app. Set the `src=` attribute to your Space's embed URL, which you can find in the "Embed this Space" button. For example:

|

||||

|

||||

|

||||

```html

|

||||

<gradio-app src="https://abidlabs-pytorch-image-classifier.hf.space"></gradio-app>

|

||||

<gradio-app

|

||||

src="https://abidlabs-pytorch-image-classifier.hf.space"

|

||||

></gradio-app>

|

||||

```

|

||||

|

||||

<script>

|

||||

@ -96,21 +99,24 @@ You can see examples of how web components look <a href="https://www.gradio.app"

|

||||

|

||||

You can also customize the appearance and behavior of your web component with attributes that you pass into the `<gradio-app>` tag:

|

||||

|

||||

* `src`: as we've seen, the `src` attributes links to the URL of the hosted Gradio demo that you would like to embed

|

||||

* `space`: an optional shorthand if your Gradio demo is hosted on Hugging Face Space. Accepts a `username/space_name` instead of a full URL. Example: `gradio/Echocardiogram-Segmentation`. If this attribute attribute is provided, then `src` does not need to be provided.

|

||||

* `control_page_title`: a boolean designating whether the html title of the page should be set to the title of the Gradio app (by default `"false"`)

|

||||

* `initial_height`: the initial height of the web component while it is loading the Gradio app, (by default `"300px"`). Note that the final height is set based on the size of the Gradio app.

|

||||

* `container`: whether to show the border frame and information about where the Space is hosted (by default `"true"`)

|

||||

* `info`: whether to show just the information about where the Space is hosted underneath the embedded app (by default `"true"`)

|

||||

* `autoscroll`: whether to autoscroll to the output when prediction has finished (by default `"false"`)

|

||||

* `eager`: whether to load the Gradio app as soon as the page loads (by default `"false"`)

|

||||

* `theme_mode`: whether to use the `dark`, `light`, or default `system` theme mode (by default `"system"`)

|

||||

- `src`: as we've seen, the `src` attributes links to the URL of the hosted Gradio demo that you would like to embed

|

||||

- `space`: an optional shorthand if your Gradio demo is hosted on Hugging Face Space. Accepts a `username/space_name` instead of a full URL. Example: `gradio/Echocardiogram-Segmentation`. If this attribute attribute is provided, then `src` does not need to be provided.

|

||||

- `control_page_title`: a boolean designating whether the html title of the page should be set to the title of the Gradio app (by default `"false"`)

|

||||

- `initial_height`: the initial height of the web component while it is loading the Gradio app, (by default `"300px"`). Note that the final height is set based on the size of the Gradio app.

|

||||

- `container`: whether to show the border frame and information about where the Space is hosted (by default `"true"`)

|

||||

- `info`: whether to show just the information about where the Space is hosted underneath the embedded app (by default `"true"`)

|

||||

- `autoscroll`: whether to autoscroll to the output when prediction has finished (by default `"false"`)

|

||||

- `eager`: whether to load the Gradio app as soon as the page loads (by default `"false"`)

|

||||

- `theme_mode`: whether to use the `dark`, `light`, or default `system` theme mode (by default `"system"`)

|

||||

|

||||