mirror of

https://github.com/gradio-app/gradio.git

synced 2025-04-24 13:01:18 +08:00

Document python client in gradio.app/docs (#3764)

* document py client * more changes * update docs * changes * fix tests * formatting * stash * fix tests * documentation * more docs * gradio client * changes * rework documentation * add to docs * modify dockerfile * gradio client * address feedback * formatting * gradio client version * fix tests * tweaks to website --------- Co-authored-by: aliabd <ali.si3luwa@gmail.com>

This commit is contained in:

parent

88a487c07c

commit

5f8186218c

client/python

gradio

blocks.pycomponents.pyevents.pyexceptions.pyexternal.pyflagging.pyhelpers.pyinterface.pylayouts.pymix.pyroutes.py

requirements.txtthemes

scripts

test

website/homepage

@ -1,22 +1,26 @@

|

||||

# `gradio_client`: Use any Gradio app as an API -- in 3 lines of Python

|

||||

# `gradio_client`: Use a Gradio app as an API -- in 3 lines of Python

|

||||

|

||||

This directory contains the source code for `gradio_client`, a lightweight Python library that makes it very easy to use any Gradio app as an API. Warning: This library is **currently in alpha, and APIs may change**.

|

||||

This directory contains the source code for `gradio_client`, a lightweight Python library that makes it very easy to use any Gradio app as an API.

|

||||

|

||||

As an example, consider the Stable Diffusion Gradio app, which is hosted on Hugging Face Spaces, and which generates images given a text prompt. Using the `gradio_client` library, we can easily use the Gradio as an API to generates images programmatically.

|

||||

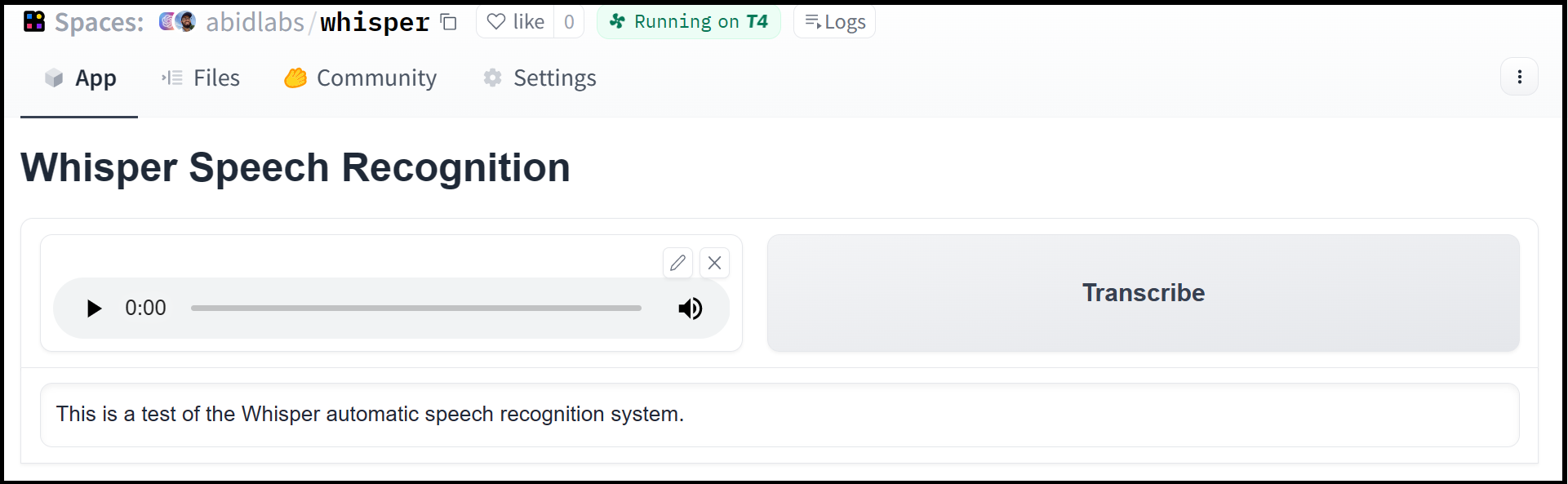

As an example, consider this [Hugging Face Space that transcribes audio files](https://huggingface.co/spaces/abidlabs/whisper) that are recorded from the microphone.

|

||||

|

||||

|

||||

|

||||

Using the `gradio_client` library, we can easily use the Gradio as an API to transcribe audio files programmatically.

|

||||

|

||||

Here's the entire code to do it:

|

||||

|

||||

```python

|

||||

import gradio_client as grc

|

||||

from gradio_client import Client

|

||||

|

||||

client = grc.Client("stabilityai/stable-diffusion")

|

||||

job = client.predict("a hyperrealistic portrait of a cat wearing cyberpunk armor", "", fn_index=1)

|

||||

job.result()

|

||||

|

||||

>> /Users/usersname/b8c26657-df87-4508-aa75-eb37cd38735f # Path to generatoed gallery of images

|

||||

client = Client("abidlabs/whisper")

|

||||

client.predict("audio_sample.wav")

|

||||

|

||||

>> "This is a test of the whisper speech recognition model."

|

||||

```

|

||||

|

||||

The Gradio client works with any Gradio Space, whether it be an image generator, a stateful chatbot, or a tax calculator.

|

||||

|

||||

## Installation

|

||||

|

||||

If you already have a recent version of `gradio`, then the `gradio_client` is included as a dependency.

|

||||

@ -31,73 +35,178 @@ $ pip install gradio_client

|

||||

|

||||

### Connecting to a Space or a Gradio app

|

||||

|

||||

Start by connecting instantiating a `Client` object and connecting it to a Gradio app

|

||||

that is running on Spaces (or anywhere else)!

|

||||

Start by connecting instantiating a `Client` object and connecting it to a Gradio app that is running on Spaces (or anywhere else)!

|

||||

|

||||

**Connecting to a Space**

|

||||

|

||||

```python

|

||||

import gradio_client as grc

|

||||

from gradio_client import Client

|

||||

|

||||

client = grc.Client("abidlabs/en2fr")

|

||||

client = Client("abidlabs/en2fr") # a Space that translates from English to French

|

||||

```

|

||||

|

||||

You can also connect to private Spaces by passing in your HF token with the `hf_token` parameter. You can get your HF token here: https://huggingface.co/settings/tokens

|

||||

|

||||

```python

|

||||

from gradio_client import Client

|

||||

|

||||

client = Client("abidlabs/my-private-space", hf_token="...")

|

||||

```

|

||||

|

||||

|

||||

**Connecting a general Gradio app**

|

||||

|

||||

If your app is running somewhere else, provide the full URL instead to the `src` argument. Here's an example of making predictions to a Gradio app that is running on a share URL:

|

||||

If your app is running somewhere else, just provide the full URL instead, including the "http://" or "https://". Here's an example of making predictions to a Gradio app that is running on a share URL:

|

||||

|

||||

```python

|

||||

import gradio_client as grc

|

||||

from gradio_client import Client

|

||||

|

||||

client = grc.Client(src="btd372-js72hd.gradio.app")

|

||||

client = Client("https://bec81a83-5b5c-471e.gradio.live")

|

||||

```

|

||||

|

||||

### Inspecting the API endpoints

|

||||

|

||||

Once you have connected to a Gradio app, you can view the APIs that are available to you by calling the `Client.view_api()` method. For the Whisper Space, we see the following:

|

||||

|

||||

```

|

||||

Client.predict() Usage Info

|

||||

---------------------------

|

||||

Named API endpoints: 1

|

||||

|

||||

- predict(input_audio, api_name="/predict") -> value_0

|

||||

Parameters:

|

||||

- [Audio] input_audio: str (filepath or URL)

|

||||

Returns:

|

||||

- [Textbox] value_0: str (value)

|

||||

```

|

||||

|

||||

This shows us that we have 1 API endpoint in this space, and shows us how to use the API endpoint to make a prediction: we should call the `.predict()` method, providing a parameter `input_audio` of type `str`, which is a `filepath or URL`.

|

||||

|

||||

We should also provide the `api_name='/predict'` argument. Although this isn't necessary if a Gradio app has a single named endpoint, it does allow us to call different endpoints in a single app if they are available. If an app has unnamed API endpoints, these can also be displayed by running `.view_api(all_endpoints=True)`.

|

||||

|

||||

|

||||

### Making a prediction

|

||||

|

||||

The simplest way to make a prediction is simply to call the `.predict()` function with the appropriate arguments and then immediately calling `.result()`, like this:

|

||||

The simplest way to make a prediction is simply to call the `.predict()` function with the appropriate arguments:

|

||||

|

||||

```python

|

||||

import gradio_client as grc

|

||||

from gradio_client import Client

|

||||

|

||||

client = grc.Client(space="abidlabs/en2fr")

|

||||

|

||||

client.predict("Hello").result()

|

||||

client = Client("abidlabs/en2fr")

|

||||

client.predict("Hello")

|

||||

|

||||

>> Bonjour

|

||||

```

|

||||

|

||||

**Running jobs asyncronously**

|

||||

If there are multiple parameters, then you should pass them as separate arguments to `.predict()`, like this:

|

||||

|

||||

Oe should note that `.result()` is a *blocking* operation as it waits for the operation to complete before returning the prediction.

|

||||

|

||||

In many cases, you may be better off letting the job run asynchronously and waiting to call `.result()` when you need the results of the prediction. For example:

|

||||

|

||||

```python

|

||||

import gradio_client as grc

|

||||

from gradio_client import Client

|

||||

|

||||

client = grc.Client(space="abidlabs/en2fr")

|

||||

client = Client("gradio/calculator")

|

||||

client.predict(4, "add", 5)

|

||||

|

||||

job = client.predict("Hello")

|

||||

>> 9.0

|

||||

```

|

||||

|

||||

For certain inputs, such as images, you should pass in the filepath or URL to the file. Likewise, for the corresponding output types, you will get a filepath or URL returned.

|

||||

|

||||

```python

|

||||

from gradio_client import Client

|

||||

|

||||

client = Client("abidlabs/whisper")

|

||||

client.predict("https://audio-samples.github.io/samples/mp3/blizzard_unconditional/sample-0.mp3")

|

||||

|

||||

>> "My thought I have nobody by a beauty and will as you poured. Mr. Rochester is serve in that so don't find simpus, and devoted abode, to at might in a r—"

|

||||

```

|

||||

|

||||

|

||||

**Running jobs asyncronously**

|

||||

|

||||

Oe should note that `.predict()` is a *blocking* operation as it waits for the operation to complete before returning the prediction.

|

||||

|

||||

In many cases, you may be better off letting the job run in the background until you need the results of the prediction. You can do this by creating a `Job` instance using the `.submit()` method, and then later calling `.result()` on the job to get the result. For example:

|

||||

|

||||

```python

|

||||

from gradio_client import Client

|

||||

|

||||

client = Client(space="abidlabs/en2fr")

|

||||

job = client.submit("Hello", api_name="/predict") # This is not blocking

|

||||

|

||||

# Do something else

|

||||

|

||||

job.result()

|

||||

job.result() # This is blocking

|

||||

|

||||

>> Bonjour

|

||||

```

|

||||

|

||||

**Adding callbacks**

|

||||

|

||||

Alternatively, one can add callbacks to perform actions after the job has completed running, like this:

|

||||

Alternatively, one can add one or more callbacks to perform actions after the job has completed running, like this:

|

||||

|

||||

```python

|

||||

import gradio_client as grc

|

||||

|

||||

from gradio_client import Client

|

||||

|

||||

def print_result(x):

|

||||

print("The translated result is: {x}")

|

||||

|

||||

client = grc.Client(space="abidlabs/en2fr")

|

||||

client = Client(space="abidlabs/en2fr")

|

||||

|

||||

job = client.submit("Hello", api_name="/predict", result_callbacks=[print_result])

|

||||

|

||||

# Do something else

|

||||

|

||||

>> The translated result is: Bonjour

|

||||

|

||||

job = client.predict("Hello", callbacks=[print_result])

|

||||

```

|

||||

|

||||

**Status**

|

||||

|

||||

The `Job` object also allows you to get the status of the running job by calling the `.status()` method. This returns a `StatusUpdate` object with the following attributes: `code` (the status code, one of a set of defined strings representing the status. See the `utils.Status` class), `rank` (the current position of this job in the queue), `queue_size` (the total queue size), `eta` (estimated time this job will complete), `success` (a boolean representing whether the job completed successfully), and `time` (the time that the status was generated).

|

||||

|

||||

```py

|

||||

from gradio_client import Client

|

||||

|

||||

client = Client(src="gradio/calculator")

|

||||

job = client.submit(5, "add", 4, api_name="/predict")

|

||||

job.status()

|

||||

|

||||

>> <Status.STARTING: 'STARTING'>

|

||||

```

|

||||

|

||||

The `Job` object also has a `done()` instance method which returns a boolean indicating whether the job has completed.

|

||||

|

||||

### Generator Endpoints

|

||||

|

||||

Some Gradio API endpoints do not return a single value, rather they return a series of values. You can get the series of values that have been returned at any time from such a generator endpoint by running `job.outputs()`:

|

||||

|

||||

```py

|

||||

from gradio_client import Client

|

||||

|

||||

client = Client(src="gradio/count_generator")

|

||||

job = client.submit(3, api_name="/count")

|

||||

while not job.done():

|

||||

time.sleep(0.1)

|

||||

job.outputs()

|

||||

|

||||

>> ['0', '1', '2']

|

||||

```

|

||||

|

||||

Note that running `job.result()` on a generator endpoint only gives you the *first* value returned by the endpoint.

|

||||

|

||||

The `Job` object is also iterable, which means you can use it to display the results of a generator function as they are returned from the endpoint. Here's the equivalent example using the `Job` as a generator:

|

||||

|

||||

```py

|

||||

from gradio_client import Client

|

||||

|

||||

client = Client(src="gradio/count_generator")

|

||||

job = client.submit(3, api_name="/count")

|

||||

|

||||

for o in job:

|

||||

print(o)

|

||||

|

||||

>> 0

|

||||

>> 1

|

||||

>> 2

|

||||

```

|

||||

@ -20,11 +20,32 @@ from packaging import version

|

||||

from typing_extensions import Literal

|

||||

|

||||

from gradio_client import serializing, utils

|

||||

from gradio_client.documentation import document, set_documentation_group

|

||||

from gradio_client.serializing import Serializable

|

||||

from gradio_client.utils import Communicator, JobStatus, Status, StatusUpdate

|

||||

|

||||

set_documentation_group("py-client")

|

||||

|

||||

|

||||

@document("predict", "submit", "view_api")

|

||||

class Client:

|

||||

"""

|

||||

The main Client class for the Python client. This class is used to connect to a remote Gradio app and call its API endpoints.

|

||||

|

||||

Example:

|

||||

from gradio_client import Client

|

||||

|

||||

client = Client("abidlabs/whisper-large-v2") # connecting to a Hugging Face Space

|

||||

client.predict("test.mp4", api_name="/predict")

|

||||

>> What a nice recording! # returns the result of the remote API call

|

||||

|

||||

client = Client("https://bec81a83-5b5c-471e.gradio.live") # connecting to a temporary Gradio share URL

|

||||

job = client.submit("hello", api_name="/predict") # runs the prediction in a background thread

|

||||

job.result()

|

||||

>> 49 # returns the result of the remote API call (blocking call)

|

||||

|

||||

"""

|

||||

|

||||

def __init__(

|

||||

self,

|

||||

src: str,

|

||||

@ -33,7 +54,7 @@ class Client:

|

||||

):

|

||||

"""

|

||||

Parameters:

|

||||

src: Either the name of the Hugging Face Space to load, (e.g. "abidlabs/pictionary") or the full URL (including "http" or "https") of the hosted Gradio app to load (e.g. "http://mydomain.com/app" or "https://bec81a83-5b5c-471e.gradio.live/").

|

||||

src: Either the name of the Hugging Face Space to load, (e.g. "abidlabs/whisper-large-v2") or the full URL (including "http" or "https") of the hosted Gradio app to load (e.g. "http://mydomain.com/app" or "https://bec81a83-5b5c-471e.gradio.live/").

|

||||

hf_token: The Hugging Face token to use to access private Spaces. Automatically fetched if you are logged in via the Hugging Face Hub CLI.

|

||||

max_workers: The maximum number of thread workers that can be used to make requests to the remote Gradio app simultaneously.

|

||||

"""

|

||||

@ -76,16 +97,49 @@ class Client:

|

||||

*args,

|

||||

api_name: str | None = None,

|

||||

fn_index: int | None = None,

|

||||

) -> Any:

|

||||

"""

|

||||

Calls the Gradio API and returns the result (this is a blocking call).

|

||||

|

||||

Parameters:

|

||||

args: The arguments to pass to the remote API. The order of the arguments must match the order of the inputs in the Gradio app.

|

||||

api_name: The name of the API endpoint to call starting with a leading slash, e.g. "/predict". Does not need to be provided if the Gradio app has only one named API endpoint.

|

||||

fn_index: As an alternative to api_name, this parameter takes the index of the API endpoint to call, e.g. 0. Both api_name and fn_index can be provided, but if they conflict, api_name will take precedence.

|

||||

Returns:

|

||||

The result of the API call. Will be a Tuple if the API has multiple outputs.

|

||||

Example:

|

||||

from gradio_client import Client

|

||||

client = Client(src="gradio/calculator")

|

||||

client.predict(5, "add", 4, api_name="/predict")

|

||||

>> 9.0

|

||||

"""

|

||||

return self.submit(*args, api_name=api_name, fn_index=fn_index).result()

|

||||

|

||||

def submit(

|

||||

self,

|

||||

*args,

|

||||

api_name: str | None = None,

|

||||

fn_index: int | None = None,

|

||||

result_callbacks: Callable | List[Callable] | None = None,

|

||||

) -> Job:

|

||||

"""

|

||||

Creates and returns a Job object which calls the Gradio API in a background thread. The job can be used to retrieve the status and result of the remote API call.

|

||||

|

||||

Parameters:

|

||||

*args: The arguments to pass to the remote API. The order of the arguments must match the order of the inputs in the Gradio app.

|

||||

args: The arguments to pass to the remote API. The order of the arguments must match the order of the inputs in the Gradio app.

|

||||

api_name: The name of the API endpoint to call starting with a leading slash, e.g. "/predict". Does not need to be provided if the Gradio app has only one named API endpoint.

|

||||

fn_index: The index of the API endpoint to call, e.g. 0. Both api_name and fn_index can be provided, but if they conflict, api_name will take precedence.

|

||||

fn_index: As an alternative to api_name, this parameter takes the index of the API endpoint to call, e.g. 0. Both api_name and fn_index can be provided, but if they conflict, api_name will take precedence.

|

||||

result_callbacks: A callback function, or list of callback functions, to be called when the result is ready. If a list of functions is provided, they will be called in order. The return values from the remote API are provided as separate parameters into the callback. If None, no callback will be called.

|

||||

Returns:

|

||||

A Job object that can be used to retrieve the status and result of the remote API call.

|

||||

Example:

|

||||

from gradio_client import Client

|

||||

client = Client(src="gradio/calculator")

|

||||

job = client.submit(5, "add", 4, api_name="/predict")

|

||||

job.status()

|

||||

>> <Status.STARTING: 'STARTING'>

|

||||

job.result() # blocking call

|

||||

>> 9.0

|

||||

"""

|

||||

inferred_fn_index = self._infer_fn_index(api_name, fn_index)

|

||||

|

||||

@ -124,29 +178,45 @@ class Client:

|

||||

return_format: Literal["dict", "str"] | None = None,

|

||||

) -> Dict | str | None:

|

||||

"""

|

||||

Prints the usage info for the API. If the Gradio app has multiple API endpoints, the usage info for each endpoint will be printed separately.

|

||||

Prints the usage info for the API. If the Gradio app has multiple API endpoints, the usage info for each endpoint will be printed separately. If return_format="dict" the info is returned in dictionary format, as shown in the example below.

|

||||

|

||||

Parameters:

|

||||

all_endpoints: If True, prints information for both named and unnamed endpoints in the Gradio app. If False, will only print info about named endpoints. If None (default), will only print info about unnamed endpoints if there are no named endpoints.

|

||||

print_info: If True, prints the usage info to the console. If False, does not print the usage info.

|

||||

return_format: If None, nothing is returned. If "str", returns the same string that would be printed to the console. If "dict", returns the usage info as a dictionary that can be programmatically parsed, and *all endpoints are returned in the dictionary* regardless of the value of `all_endpoints`. The format of the dictionary is in the docstring of this method.

|

||||

Dictionary format:

|

||||

{

|

||||

"named_endpoints": {

|

||||

"endpoint_1_name": {

|

||||

"parameters": {

|

||||

"parameter_1_name": ["python type", "description", "component_type"],

|

||||

"parameter_2_name": ["python type", "description", "component_type"],

|

||||

},

|

||||

"returns": {

|

||||

"value_1_name": ["python type", "description", "component_type"],

|

||||

Example:

|

||||

from gradio_client import Client

|

||||

client = Client(src="gradio/calculator")

|

||||

client.view_api(return_format="dict")

|

||||

>> {

|

||||

'named_endpoints': {

|

||||

'/predict': {

|

||||

'parameters': {

|

||||

'num1': ['int | float', 'value', 'Number'],

|

||||

'operation': ['str', 'value', 'Radio'],

|

||||

'num2': ['int | float', 'value', 'Number']

|

||||

},

|

||||

'returns': {

|

||||

'output': ['int | float', 'value', 'Number']

|

||||

}

|

||||

}

|

||||

},

|

||||

'unnamed_endpoints': {

|

||||

2: {

|

||||

'parameters': {

|

||||

'parameter_0': ['str', 'value', 'Dataset']

|

||||

},

|

||||

'returns': {

|

||||

'num1': ['int | float', 'value', 'Number'],

|

||||

'operation': ['str', 'value', 'Radio'],

|

||||

'num2': ['int | float', 'value', 'Number'],

|

||||

'output': ['int | float', 'value', 'Number']

|

||||

}

|

||||

}

|

||||

...

|

||||

"unnamed_endpoints": {

|

||||

"fn_index_1": {

|

||||

...

|

||||

}

|

||||

...

|

||||

}

|

||||

}

|

||||

|

||||

"""

|

||||

info: Dict[str, Dict[str | int, Dict[str, Dict[str, List[str]]]]] = {

|

||||

"named_endpoints": {},

|

||||

@ -549,14 +619,28 @@ class Endpoint:

|

||||

return await utils.get_pred_from_ws(websocket, data, hash_data, helper)

|

||||

|

||||

|

||||

@document("result", "outputs", "status")

|

||||

class Job(Future):

|

||||

"""A Job is a thin wrapper over the Future class that can be cancelled."""

|

||||

"""

|

||||

A Job is a wrapper over the Future class that represents a prediction call that has been

|

||||

submitted by the Gradio client. This class is not meant to be instantiated directly, but rather

|

||||

is created by the Client.submit() method.

|

||||

|

||||

A Job object includes methods to get the status of the prediction call, as well to get the outputs of

|

||||

the prediction call. Job objects are also iterable, and can be used in a loop to get the outputs

|

||||

of prediction calls as they become available for generator endpoints.

|

||||

"""

|

||||

|

||||

def __init__(

|

||||

self,

|

||||

future: Future,

|

||||

communicator: Communicator | None = None,

|

||||

):

|

||||

"""

|

||||

Parameters:

|

||||

future: The future object that represents the prediction call, created by the Client.submit() method

|

||||

communicator: The communicator object that is used to communicate between the client and the background thread running the job

|

||||

"""

|

||||

self.future = future

|

||||

self.communicator = communicator

|

||||

self._counter = 0

|

||||

@ -581,37 +665,20 @@ class Job(Future):

|

||||

if self.communicator.job.latest_status.code == Status.FINISHED:

|

||||

raise StopIteration()

|

||||

|

||||

def outputs(self) -> List[Tuple | Any]:

|

||||

"""Returns a list containing the latest outputs from the Job.

|

||||

|

||||

If the endpoint has multiple output components, the list will contain

|

||||

a tuple of results. Otherwise, it will contain the results without storing them

|

||||

in tuples.

|

||||

|

||||

For endpoints that are queued, this list will contain the final job output even

|

||||

if that endpoint does not use a generator function.

|

||||

def result(self, timeout=None) -> Any:

|

||||

"""

|

||||

if not self.communicator:

|

||||

return []

|

||||

else:

|

||||

with self.communicator.lock:

|

||||

return self.communicator.job.outputs

|

||||

|

||||

def result(self, timeout=None):

|

||||

"""Return the result of the call that the future represents.

|

||||

|

||||

Args:

|

||||

timeout: The number of seconds to wait for the result if the future

|

||||

isn't done. If None, then there is no limit on the wait time.

|

||||

Return the result of the call that the future represents. Raises CancelledError: If the future was cancelled, TimeoutError: If the future didn't finish executing before the given timeout, and Exception: If the call raised then that exception will be raised.

|

||||

|

||||

Parameters:

|

||||

timeout: The number of seconds to wait for the result if the future isn't done. If None, then there is no limit on the wait time.

|

||||

Returns:

|

||||

The result of the call that the future represents.

|

||||

|

||||

Raises:

|

||||

CancelledError: If the future was cancelled.

|

||||

TimeoutError: If the future didn't finish executing before the given

|

||||

timeout.

|

||||

Exception: If the call raised then that exception will be raised.

|

||||

Example:

|

||||

from gradio_client import Client

|

||||

calculator = Client(src="gradio/calculator")

|

||||

job = calculator.submit("foo", "add", 4, fn_index=0)

|

||||

job.result(timeout=5)

|

||||

>> 9

|

||||

"""

|

||||

if self.communicator:

|

||||

timeout = timeout or float("inf")

|

||||

@ -633,7 +700,46 @@ class Job(Future):

|

||||

else:

|

||||

return super().result(timeout=timeout)

|

||||

|

||||

def outputs(self) -> List[Tuple | Any]:

|

||||

"""

|

||||

Returns a list containing the latest outputs from the Job.

|

||||

|

||||

If the endpoint has multiple output components, the list will contain

|

||||

a tuple of results. Otherwise, it will contain the results without storing them

|

||||

in tuples.

|

||||

|

||||

For endpoints that are queued, this list will contain the final job output even

|

||||

if that endpoint does not use a generator function.

|

||||

|

||||

Example:

|

||||

from gradio_client import Client

|

||||

client = Client(src="gradio/count_generator")

|

||||

job = client.submit(3, api_name="/count")

|

||||

while not job.done():

|

||||

time.sleep(0.1)

|

||||

job.outputs()

|

||||

>> ['0', '1', '2']

|

||||

"""

|

||||

if not self.communicator:

|

||||

return []

|

||||

else:

|

||||

with self.communicator.lock:

|

||||

return self.communicator.job.outputs

|

||||

|

||||

def status(self) -> StatusUpdate:

|

||||

"""

|

||||

Returns the latest status update from the Job in the form of a StatusUpdate

|

||||

object, which contains the following fields: code, rank, queue_size, success, time, eta.

|

||||

|

||||

Example:

|

||||

from gradio_client import Client

|

||||

client = Client(src="gradio/calculator")

|

||||

job = client.submit(5, "add", 4, api_name="/predict")

|

||||

job.status()

|

||||

>> <Status.STARTING: 'STARTING'>

|

||||

job.status().eta

|

||||

>> 43.241 # seconds

|

||||

"""

|

||||

time = datetime.now()

|

||||

if self.done():

|

||||

if not self.future._exception: # type: ignore

|

||||

@ -671,14 +777,3 @@ class Job(Future):

|

||||

def __getattr__(self, name):

|

||||

"""Forwards any properties to the Future class."""

|

||||

return getattr(self.future, name)

|

||||

|

||||

def cancel(self) -> bool:

|

||||

"""Cancels the job."""

|

||||

if self.future.cancelled() or self.future.done():

|

||||

pass

|

||||

return False

|

||||

elif self.future.running():

|

||||

pass # TODO: Handle this case

|

||||

return True

|

||||

else:

|

||||

return self.future.cancel()

|

||||

|

||||

@ -93,6 +93,8 @@ def document_fn(fn: Callable, cls) -> Tuple[str, List[Dict], Dict, str | None]:

|

||||

if mode == "description":

|

||||

description.append(line if line.strip() else "<br>")

|

||||

continue

|

||||

if not (line.startswith(" ") or line.strip() == ""):

|

||||

print(line)

|

||||

assert (

|

||||

line.startswith(" ") or line.strip() == ""

|

||||

), f"Documentation format for {fn.__name__} has format error in line: {line}"

|

||||

@ -114,7 +116,7 @@ def document_fn(fn: Callable, cls) -> Tuple[str, List[Dict], Dict, str | None]:

|

||||

for param_name, param in signature.parameters.items():

|

||||

if param_name.startswith("_"):

|

||||

continue

|

||||

if param_name == "kwargs" and param_name not in parameters:

|

||||

if param_name in ["kwargs", "args"] and param_name not in parameters:

|

||||

continue

|

||||

parameter_doc = {

|

||||

"name": param_name,

|

||||

@ -130,8 +132,11 @@ def document_fn(fn: Callable, cls) -> Tuple[str, List[Dict], Dict, str | None]:

|

||||

if default.__class__.__module__ != "builtins":

|

||||

default = f"{default.__class__.__name__}()"

|

||||

parameter_doc["default"] = default

|

||||

elif parameter_doc["doc"] is not None and "kwargs" in parameter_doc["doc"]:

|

||||

parameter_doc["kwargs"] = True

|

||||

elif parameter_doc["doc"] is not None:

|

||||

if "kwargs" in parameter_doc["doc"]:

|

||||

parameter_doc["kwargs"] = True

|

||||

if "args" in parameter_doc["doc"]:

|

||||

parameter_doc["args"] = True

|

||||

parameter_docs.append(parameter_doc)

|

||||

assert (

|

||||

len(parameters) == 0

|

||||

@ -1 +1 @@

|

||||

0.0.6b10

|

||||

0.0.8

|

||||

|

||||

@ -21,7 +21,7 @@ class TestPredictionsFromSpaces:

|

||||

@pytest.mark.flaky

|

||||

def test_numerical_to_label_space(self):

|

||||

client = Client("gradio-tests/titanic-survival")

|

||||

output = client.predict("male", 77, 10, api_name="/predict").result()

|

||||

output = client.predict("male", 77, 10, api_name="/predict")

|

||||

assert json.load(open(output))["label"] == "Perishes"

|

||||

with pytest.raises(

|

||||

ValueError,

|

||||

@ -37,34 +37,34 @@ class TestPredictionsFromSpaces:

|

||||

@pytest.mark.flaky

|

||||

def test_private_space(self):

|

||||

client = Client("gradio-tests/not-actually-private-space", hf_token=HF_TOKEN)

|

||||

output = client.predict("abc", api_name="/predict").result()

|

||||

output = client.predict("abc", api_name="/predict")

|

||||

assert output == "abc"

|

||||

|

||||

@pytest.mark.flaky

|

||||

def test_state(self):

|

||||

client = Client("gradio-tests/increment")

|

||||

output = client.predict(api_name="/increment_without_queue").result()

|

||||

output = client.predict(api_name="/increment_without_queue")

|

||||

assert output == 1

|

||||

output = client.predict(api_name="/increment_without_queue").result()

|

||||

output = client.predict(api_name="/increment_without_queue")

|

||||

assert output == 2

|

||||

output = client.predict(api_name="/increment_without_queue").result()

|

||||

output = client.predict(api_name="/increment_without_queue")

|

||||

assert output == 3

|

||||

client.reset_session()

|

||||

output = client.predict(api_name="/increment_without_queue").result()

|

||||

output = client.predict(api_name="/increment_without_queue")

|

||||

assert output == 1

|

||||

output = client.predict(api_name="/increment_with_queue").result()

|

||||

output = client.predict(api_name="/increment_with_queue")

|

||||

assert output == 2

|

||||

client.reset_session()

|

||||

output = client.predict(api_name="/increment_with_queue").result()

|

||||

output = client.predict(api_name="/increment_with_queue")

|

||||

assert output == 1

|

||||

output = client.predict(api_name="/increment_with_queue").result()

|

||||

output = client.predict(api_name="/increment_with_queue")

|

||||

assert output == 2

|

||||

|

||||

@pytest.mark.flaky

|

||||

def test_job_status(self):

|

||||

statuses = []

|

||||

client = Client(src="gradio/calculator")

|

||||

job = client.predict(5, "add", 4)

|

||||

job = client.submit(5, "add", 4)

|

||||

while not job.done():

|

||||

time.sleep(0.1)

|

||||

statuses.append(job.status())

|

||||

@ -82,7 +82,7 @@ class TestPredictionsFromSpaces:

|

||||

def test_job_status_queue_disabled(self):

|

||||

statuses = []

|

||||

client = Client(src="freddyaboulton/sentiment-classification")

|

||||

job = client.predict("I love the gradio python client", api_name="/classify")

|

||||

job = client.submit("I love the gradio python client", api_name="/classify")

|

||||

while not job.done():

|

||||

time.sleep(0.02)

|

||||

statuses.append(job.status())

|

||||

@ -94,7 +94,7 @@ class TestPredictionsFromSpaces:

|

||||

self,

|

||||

):

|

||||

client = Client(src="gradio/count_generator")

|

||||

job = client.predict(3, api_name="/count")

|

||||

job = client.submit(3, api_name="/count")

|

||||

|

||||

while not job.done():

|

||||

time.sleep(0.1)

|

||||

@ -102,14 +102,14 @@ class TestPredictionsFromSpaces:

|

||||

assert job.outputs() == [str(i) for i in range(3)]

|

||||

|

||||

outputs = []

|

||||

for o in client.predict(3, api_name="/count"):

|

||||

for o in client.submit(3, api_name="/count"):

|

||||

outputs.append(o)

|

||||

assert outputs == [str(i) for i in range(3)]

|

||||

|

||||

@pytest.mark.flaky

|

||||

def test_break_in_loop_if_error(self):

|

||||

calculator = Client(src="gradio/calculator")

|

||||

job = calculator.predict("foo", "add", 4, fn_index=0)

|

||||

job = calculator.submit("foo", "add", 4, fn_index=0)

|

||||

output = [o for o in job]

|

||||

assert output == []

|

||||

|

||||

@ -117,33 +117,33 @@ class TestPredictionsFromSpaces:

|

||||

def test_timeout(self):

|

||||

with pytest.raises(TimeoutError):

|

||||

client = Client(src="gradio/count_generator")

|

||||

job = client.predict(api_name="/sleep")

|

||||

job = client.submit(api_name="/sleep")

|

||||

job.result(timeout=0.05)

|

||||

|

||||

@pytest.mark.flaky

|

||||

def test_timeout_no_queue(self):

|

||||

with pytest.raises(TimeoutError):

|

||||

client = Client(src="freddyaboulton/sentiment-classification")

|

||||

job = client.predict(api_name="/sleep")

|

||||

job = client.submit(api_name="/sleep")

|

||||

job.result(timeout=0.1)

|

||||

|

||||

@pytest.mark.flaky

|

||||

def test_raises_exception(self):

|

||||

with pytest.raises(Exception):

|

||||

client = Client(src="freddyaboulton/calculator")

|

||||

job = client.predict("foo", "add", 9, fn_index=0)

|

||||

job = client.submit("foo", "add", 9, fn_index=0)

|

||||

job.result()

|

||||

|

||||

@pytest.mark.flaky

|

||||

def test_raises_exception_no_queue(self):

|

||||

with pytest.raises(Exception):

|

||||

client = Client(src="freddyaboulton/sentiment-classification")

|

||||

job = client.predict([5], api_name="/sleep")

|

||||

job = client.submit([5], api_name="/sleep")

|

||||

job.result()

|

||||

|

||||

def test_job_output_video(self):

|

||||

client = Client(src="gradio/video_component")

|

||||

job = client.predict(

|

||||

job = client.submit(

|

||||

"https://huggingface.co/spaces/gradio/video_component/resolve/main/files/a.mp4",

|

||||

fn_index=0,

|

||||

)

|

||||

@ -220,7 +220,7 @@ class TestStatusUpdates:

|

||||

mock_make_end_to_end_fn.side_effect = MockEndToEndFunction

|

||||

|

||||

client = Client(src="gradio/calculator")

|

||||

job = client.predict(5, "add", 6)

|

||||

job = client.submit(5, "add", 6)

|

||||

|

||||

statuses = []

|

||||

while not job.done():

|

||||

@ -292,8 +292,8 @@ class TestStatusUpdates:

|

||||

mock_make_end_to_end_fn.side_effect = MockEndToEndFunction

|

||||

|

||||

client = Client(src="gradio/calculator")

|

||||

job_1 = client.predict(5, "add", 6)

|

||||

job_2 = client.predict(11, "subtract", 1)

|

||||

job_1 = client.submit(5, "add", 6)

|

||||

job_2 = client.submit(11, "subtract", 1)

|

||||

|

||||

statuses_1 = []

|

||||

statuses_2 = []

|

||||

|

||||

11

client/python/test/test_documentation.py

Normal file

11

client/python/test/test_documentation.py

Normal file

@ -0,0 +1,11 @@

|

||||

import os

|

||||

|

||||

from gradio_client import documentation

|

||||

|

||||

os.environ["GRADIO_ANALYTICS_ENABLED"] = "False"

|

||||

|

||||

|

||||

class TestDocumentation:

|

||||

def test_website_documentation(self):

|

||||

docs = documentation.generate_documentation()

|

||||

assert len(docs) > 0

|

||||

@ -19,6 +19,7 @@ import requests

|

||||

from anyio import CapacityLimiter

|

||||

from gradio_client import serializing

|

||||

from gradio_client import utils as client_utils

|

||||

from gradio_client.documentation import document, set_documentation_group

|

||||

from typing_extensions import Literal

|

||||

|

||||

from gradio import (

|

||||

@ -33,7 +34,6 @@ from gradio import (

|

||||

)

|

||||

from gradio.context import Context

|

||||

from gradio.deprecation import check_deprecated_parameters

|

||||

from gradio.documentation import document, set_documentation_group

|

||||

from gradio.exceptions import DuplicateBlockError, InvalidApiName

|

||||

from gradio.helpers import EventData, create_tracker, skip, special_args

|

||||

from gradio.themes import Default as DefaultTheme

|

||||

|

||||

@ -32,6 +32,7 @@ import requests

|

||||

from fastapi import UploadFile

|

||||

from ffmpy import FFmpeg

|

||||

from gradio_client import utils as client_utils

|

||||

from gradio_client.documentation import document, set_documentation_group

|

||||

from gradio_client.serializing import (

|

||||

BooleanSerializable,

|

||||

FileSerializable,

|

||||

@ -50,7 +51,6 @@ from typing_extensions import Literal

|

||||

|

||||

from gradio import media_data, processing_utils, utils

|

||||

from gradio.blocks import Block, BlockContext

|

||||

from gradio.documentation import document, set_documentation_group

|

||||

from gradio.events import (

|

||||

Blurrable,

|

||||

Changeable,

|

||||

|

||||

@ -6,8 +6,9 @@ from __future__ import annotations

|

||||

import warnings

|

||||

from typing import TYPE_CHECKING, Any, Callable, Dict, List, Set, Tuple

|

||||

|

||||

from gradio_client.documentation import document, set_documentation_group

|

||||

|

||||

from gradio.blocks import Block

|

||||

from gradio.documentation import document, set_documentation_group

|

||||

from gradio.helpers import EventData

|

||||

from gradio.utils import get_cancel_function

|

||||

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

from gradio.documentation import document, set_documentation_group

|

||||

from gradio_client.documentation import document, set_documentation_group

|

||||

|

||||

set_documentation_group("helpers")

|

||||

|

||||

|

||||

@ -10,11 +10,11 @@ from typing import TYPE_CHECKING, Callable, Dict

|

||||

|

||||

import requests

|

||||

from gradio_client import Client

|

||||

from gradio_client.documentation import document, set_documentation_group

|

||||

|

||||

import gradio

|

||||

from gradio import components, utils

|

||||

from gradio.context import Context

|

||||

from gradio.documentation import document, set_documentation_group

|

||||

from gradio.exceptions import Error, TooManyRequestsError

|

||||

from gradio.external_utils import (

|

||||

cols_to_rows,

|

||||

|

||||

@ -13,10 +13,10 @@ from typing import TYPE_CHECKING, Any, List

|

||||

|

||||

import pkg_resources

|

||||

from gradio_client import utils as client_utils

|

||||

from gradio_client.documentation import document, set_documentation_group

|

||||

|

||||

import gradio as gr

|

||||

from gradio import utils

|

||||

from gradio.documentation import document, set_documentation_group

|

||||

|

||||

if TYPE_CHECKING:

|

||||

from gradio.components import IOComponent

|

||||

|

||||

@ -20,10 +20,10 @@ import numpy as np

|

||||

import PIL

|

||||

import PIL.Image

|

||||

from gradio_client import utils as client_utils

|

||||

from gradio_client.documentation import document, set_documentation_group

|

||||

|

||||

from gradio import processing_utils, routes, utils

|

||||

from gradio.context import Context

|

||||

from gradio.documentation import document, set_documentation_group

|

||||

from gradio.flagging import CSVLogger

|

||||

|

||||

if TYPE_CHECKING: # Only import for type checking (to avoid circular imports).

|

||||

|

||||

@ -13,6 +13,8 @@ import warnings

|

||||

import weakref

|

||||

from typing import TYPE_CHECKING, Any, Callable, List, Tuple

|

||||

|

||||

from gradio_client.documentation import document, set_documentation_group

|

||||

|

||||

from gradio import Examples, external, interpretation, utils

|

||||

from gradio.blocks import Blocks

|

||||

from gradio.components import (

|

||||

@ -24,7 +26,6 @@ from gradio.components import (

|

||||

get_component_instance,

|

||||

)

|

||||

from gradio.data_classes import InterfaceTypes

|

||||

from gradio.documentation import document, set_documentation_group

|

||||

from gradio.events import Changeable, Streamable

|

||||

from gradio.flagging import CSVLogger, FlaggingCallback, FlagMethod

|

||||

from gradio.layouts import Column, Row, Tab, Tabs

|

||||

|

||||

@ -3,8 +3,9 @@ from __future__ import annotations

|

||||

import warnings

|

||||

from typing import Type

|

||||

|

||||

from gradio_client.documentation import document, set_documentation_group

|

||||

|

||||

from gradio.blocks import BlockContext

|

||||

from gradio.documentation import document, set_documentation_group

|

||||

from gradio.events import Changeable, Selectable

|

||||

|

||||

set_documentation_group("layout")

|

||||

|

||||

@ -4,8 +4,9 @@ Ways to transform interfaces to produce new interfaces

|

||||

import asyncio

|

||||

import warnings

|

||||

|

||||

from gradio_client.documentation import document, set_documentation_group

|

||||

|

||||

import gradio

|

||||

from gradio.documentation import document, set_documentation_group

|

||||

|

||||

set_documentation_group("mix_interface")

|

||||

|

||||

|

||||

@ -33,6 +33,7 @@ from fastapi.responses import (

|

||||

)

|

||||

from fastapi.security import OAuth2PasswordRequestForm

|

||||

from fastapi.templating import Jinja2Templates

|

||||

from gradio_client.documentation import document, set_documentation_group

|

||||

from jinja2.exceptions import TemplateNotFound

|

||||

from starlette.background import BackgroundTask

|

||||

from starlette.responses import RedirectResponse, StreamingResponse

|

||||

@ -43,7 +44,6 @@ import gradio.ranged_response as ranged_response

|

||||

from gradio import utils

|

||||

from gradio.context import Context

|

||||

from gradio.data_classes import PredictBody, ResetBody

|

||||

from gradio.documentation import document, set_documentation_group

|

||||

from gradio.exceptions import Error

|

||||

from gradio.helpers import EventData

|

||||

from gradio.queueing import Estimation, Event

|

||||

|

||||

@ -10,9 +10,9 @@ from typing import Dict, Iterable

|

||||

import huggingface_hub

|

||||

import requests

|

||||

import semantic_version as semver

|

||||

from gradio_client.documentation import document, set_documentation_group

|

||||

from huggingface_hub import CommitOperationAdd

|

||||

|

||||

from gradio.documentation import document, set_documentation_group

|

||||

from gradio.themes.utils import (

|

||||

colors,

|

||||

fonts,

|

||||

|

||||

@ -3,7 +3,7 @@ aiohttp

|

||||

altair>=4.2.0

|

||||

fastapi

|

||||

ffmpy

|

||||

gradio_client>=0.0.5

|

||||

gradio_client>=0.0.8

|

||||

httpx

|

||||

huggingface_hub>=0.13.0

|

||||

Jinja2

|

||||

|

||||

@ -3,7 +3,7 @@

|

||||

cd "$(dirname ${0})/.."

|

||||

|

||||

echo "Formatting the backend... Our style follows the Black code style."

|

||||

ruff gradio test

|

||||

ruff --fix gradio test

|

||||

black gradio test

|

||||

|

||||

bash client/python/scripts/format.sh # Call the client library's formatting script

|

||||

|

||||

@ -1,18 +0,0 @@

|

||||

import os

|

||||

import sys

|

||||

|

||||

import pytest

|

||||

|

||||

import gradio as gr

|

||||

|

||||

os.environ["GRADIO_ANALYTICS_ENABLED"] = "False"

|

||||

|

||||

|

||||

class TestDocumentation:

|

||||

@pytest.mark.skipif(

|

||||

sys.version_info < (3, 8),

|

||||

reason="Docs use features in inspect module not available in py 3.7",

|

||||

)

|

||||

def test_website_documentation(self):

|

||||

documentation = gr.documentation.generate_documentation()

|

||||

assert len(documentation) > 0

|

||||

@ -15,6 +15,9 @@ RUN pnpm build

|

||||

RUN pnpm build:website

|

||||

RUN pip install build awscli requests

|

||||

RUN pip install .

|

||||

WORKDIR /gradio/client/python

|

||||

RUN pip install .

|

||||

WORKDIR /gradio

|

||||

RUN python -m build -w

|

||||

ARG AWS_ACCESS_KEY_ID

|

||||

ENV AWS_ACCESS_KEY_ID $AWS_ACCESS_KEY_ID

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

import os

|

||||

|

||||

from gradio.documentation import document_cls, generate_documentation

|

||||

from gradio_client.documentation import document_cls, generate_documentation

|

||||

from gradio.events import EventListener

|

||||

|

||||

from ..guides import guides

|

||||

|

||||

@ -1,5 +1,9 @@

|

||||

{% if parent != "gradio" %}

|

||||

<div class="obj" id="{{ parent[7:].lower() + '-' + obj['name'].lower() }}">

|

||||

{% if parent == "gradio_client" %}

|

||||

<div class="obj" id="{{ parent.lower() + '-' + obj['name'].lower() }}">

|

||||

{% else %}

|

||||

<div class="obj" id="{{ parent[7:].lower() + '-' + obj['name'].lower() }}">

|

||||

{% endif %}

|

||||

{% else %}

|

||||

<div class="obj" id="{{ obj['name'].lower() }}">

|

||||

{% endif %}

|

||||

@ -68,7 +72,7 @@

|

||||

{% else %}

|

||||

<div class="codeblock"><pre><code class="lang-python">{{ parent }}{% if obj["name"] != "__call__" %}.<span>{{ obj["name"] }}{% endif %}(</span><!--

|

||||

-->{% for param in obj["parameters"] %}<!--

|

||||

-->{% if "kwargs" not in param and "default" not in param and param["name"] != "self" %}<!--

|

||||

-->{% if "kwargs" not in param and "args" not in param and "default" not in param and param["name"] != "self" %}<!--

|

||||

-->{{ param["name"] }}, <!--

|

||||

-->{% endif %}<!--

|

||||

-->{% endfor %}<!--

|

||||

@ -149,7 +153,7 @@

|

||||

<p class="text-gray-500 italic">{{ param["annotation"] }}</p>

|

||||

{% if "default" in param %}

|

||||

<p class="text-gray-500 font-semibold">default: {{ param["default"] }}</p>

|

||||

{% elif "kwargs" not in param %}

|

||||

{% elif "kwargs" not in param and "args" not in param %}

|

||||

<p class="text-orange-600 font-semibold italic">required</p>

|

||||

{% endif %}

|

||||

</td>

|

||||

|

||||

@ -156,6 +156,13 @@

|

||||

{% for component in docs["routes"] %}

|

||||

<a class="px-4 block thin-link" href="#{{ component['name'].lower() }}">{{ component['name'] }}</a>

|

||||

{% endfor %}

|

||||

<a class="link px-4 my-2 block" href="#clients">Clients

|

||||

<a class="thin-link px-4 block" href="#python-client">Python</a>

|

||||

<div class="sub-links hidden" hash="#python-client">

|

||||

{% for component in docs["py-client"] %}

|

||||

<a class="thinner-link px-4 pl-8 block" href="#{{'gradio_client-' + component['name'].lower() }}">{{ component['name'] }}</a>

|

||||

{% endfor %}

|

||||

</div>

|

||||

</div>

|

||||

<div class="flex flex-col w-full min-w-full lg:w-10/12 lg:min-w-0">

|

||||

<div>

|

||||

@ -369,6 +376,40 @@

|

||||

{% endwith %}

|

||||

{% endfor %}

|

||||

</section>

|

||||

|

||||

<section id="clients" class="pt-2 flex flex-col gap-10 mb-8">

|

||||

<section id="python-client" class="pt-2 flex flex-col gap-10 mb-8">

|

||||

<div>

|

||||

<h2 id="clients-header"

|

||||

class="text-4xl font-light mb-2 pt-2 text-orange-500">

|

||||

Client libraries

|

||||

</h2>

|

||||

<p class="mt-8 text-lg">

|

||||

The lightweight Gradio client libraries make it easy to use any Gradio app as an API.

|

||||

We currently support a Python client libraries and are developing client

|

||||

libraries in other languages.

|

||||

</p>

|

||||

</div>

|

||||

<div>

|

||||

<h3 class="text-4xl font-light my-4" id="python-client">

|

||||

Python client library

|

||||

</h3>

|

||||

<p class="mt-8 text-lg">

|

||||

The Python client library is `gradio_client`. It is included in the latest

|

||||

versions of the `gradio` package, but for a more lightweight experience, you

|

||||

can install it using `pip` without having to install `gradio`:

|

||||

</p>

|

||||

<div class="codeblock"><pre><code class="lang-bash">pip install gradio_client</code></pre></div>

|

||||

Here are the key classes and methods in the Python client library:

|

||||

</div>

|

||||

{% for component in docs["py-client"] %}

|

||||

{% with obj=component, parent="gradio_client" %}

|

||||

{% include "docs/obj_doc_template.html" %}

|

||||

{% endwith %}

|

||||

{% endfor %}

|

||||

</section>

|

||||

</section>

|

||||

|

||||

</div>

|

||||

</main>

|

||||

<script>

|

||||

|

||||

Loading…

x

Reference in New Issue

Block a user